Mastering Legal and Ethical AI Compliance: Europe and the World

Executive Summary

This webinar covers a range of crucial topics related to AI development and legal compliance, including ethical considerations, the impact of AI on society and law, and the European Union's AI Act. Arnoud Engelfriet discusses the need for Data Governance and Management in AI systems, human oversight in AI deployment, and the challenges associated with personal Data Management systems. The webinar highlights the significance of accountability, compliance, and the EU's approach to trustworthy AI, as well as issues related to the risk-based approach of the AI Act and the controversies surrounding data use in AI systems and social networks.

Webinar Details:

Title: Mastering Legal and Ethical AI Compliance: Europe and the World

Date: 21 October 2024

Presenter: Arnoud Engelfriet

Meetup Group: DAMA SA User Group

Write-up Author: Howard Diesel

Contents

Executive Summary

Webinar Details

Ethical Considerations and Legal Compliance in AI Development with Arnoud Engelfriet

Ethical Implications and Risks of Artificial Intelligence Development

The AI Act and Legal Liability: Autonomous Car Accidents

Issues of False Positives and Negatives: Case Study Smart Cameras in Netherlands

The Impact of Artificial Intelligence on Law and Society

Controversies of Data Use in AI Systems and Social Networks

Artificial Intelligence Legislation and Policy in South Africa

The European Union AI Act

The European Union’s AI Act on Innovation

Understanding the Risk-Based Approach of the AI Act

The AI Act and Its Implications

EU Conformity and AI Regulations

AI Technology: Concerns of a Social Credit System

Compliance and Consequences of High-Risk AI Deployment in the EU

Human Oversight in AI Deployment

Data Governance and the AI Impact Assessment

Accountability and Compliance in the Utilization of AI Systems

Ethical Challenges and Accountability in AI Adoption

The EU's Approach to Trustworthy AI

Challenges of Personal Data Management Systems

Ethical Considerations and Legal Compliance in AI Development with Arnoud Engelfriet

Arnoud Engelfriet, a Dutch IT lawyer and Chief Knowledge Officer, opens the webinar. Arnoud discusses his book "AI and Algorithms, Master of Legal Ethical Compliance" and his role in developing a course for certified AI Compliance Officers. He emphasises the importance of understanding both the legal and ethical considerations when working with AI, especially in the context of the complex European Union AI Act.

Figure 1 Introduction Cover Slide

Figure 2 About the Speaker

Ethical Implications and Risks of Artificial Intelligence Development

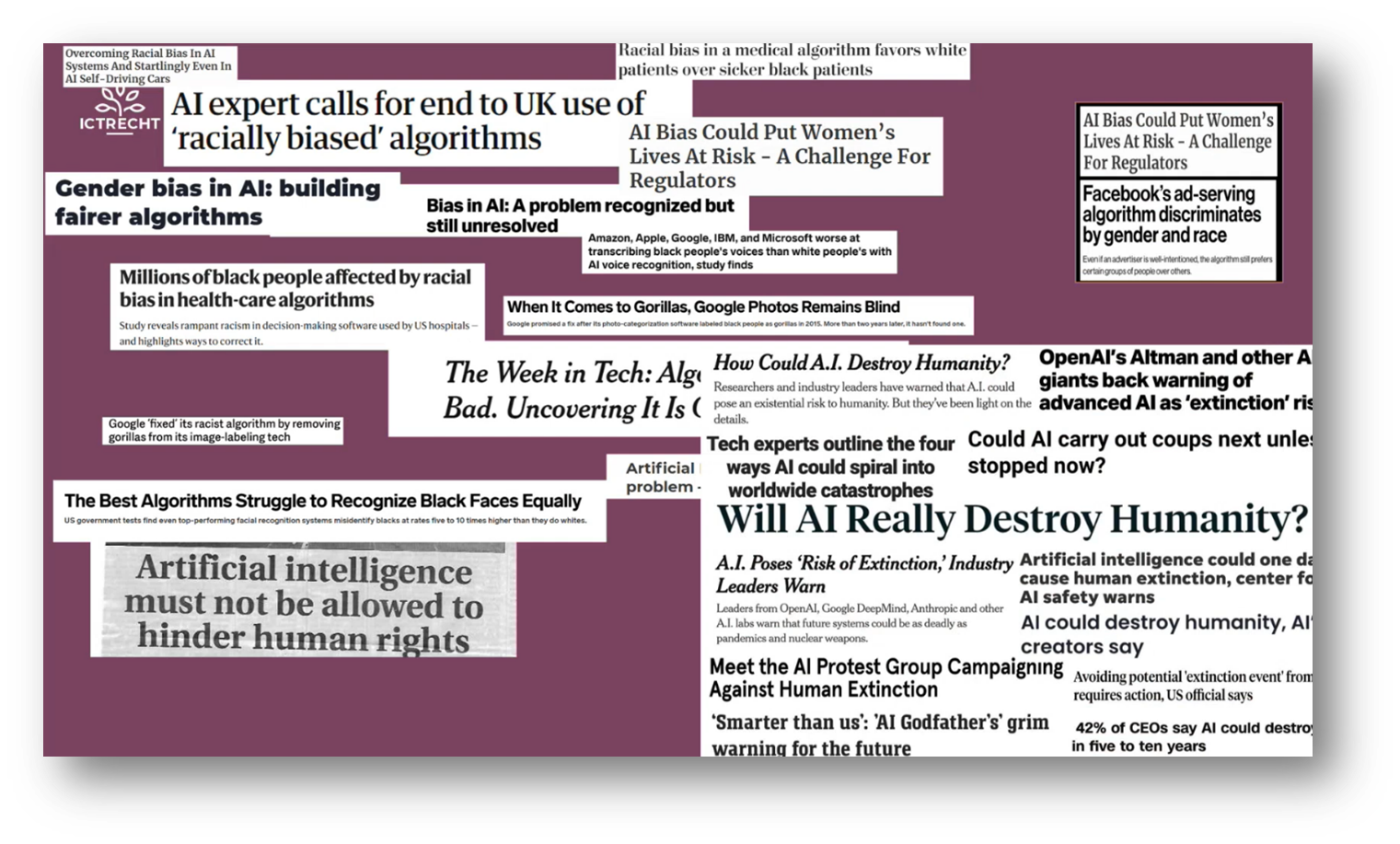

Arnoud and the attendees discuss the implications of AI. Concerns have been raised on biases in AI systems based on ethical backgrounds, skin colour, and gender, as well as the potential for AI to benefit certain regions disproportionately. Worries about AI taking over the world or posing a threat to humanity are acknowledged but deemed unlikely. Arnoud is asked about real-world AI applications such as self-driving cars and the ethical dilemmas they present, highlighting the need to address lower-level issues in AI development.

Figure 3 Controversies Surrounding AI

Figure 4 Self-driving Car View

The AI Act and Legal Liability: Autonomous Car Accidents

In the context of self-driving cars in Europe, the AI Act's Product Safety legislative framework holds the producer, company, and seller liable for any accidents caused by the autonomous vehicle. Another approach in the US suggests creating a fund to which every producer of autonomous cars contributes, and this fund would pay out in case of accidents, serving as mandatory insurance to avoid lawsuits and punitive damages, thus facilitating innovation. This approach is similar to the one used for medication to encourage the development of new drugs without the fear of being sued out of existence.

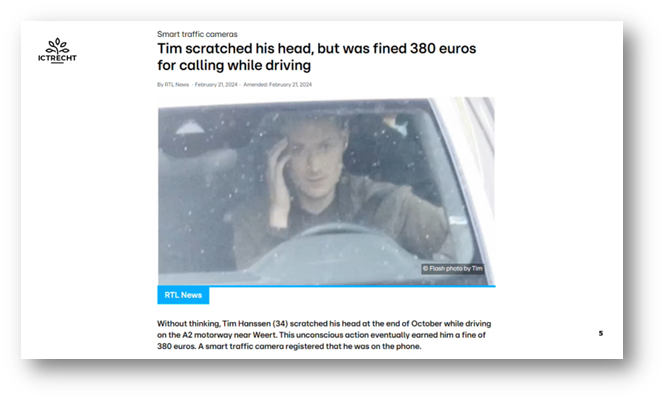

Issues of False Positives and Negatives: Case Study Smart Cameras in Netherlands

In the Netherlands, there was an incident where a man named Tim Hanssen was falsely accused of holding a phone while driving by a smart camera system designed to catch illegal phone use. The system, which is used by the police, incorrectly identified Tim's hand scratching his head as holding a phone. It was later discovered that the open-source software used by the police had a bug causing false positives, where the system detects something that isn't actually there. This incident highlights the importance of addressing false positive issues in automated systems.

Figure 5 News Snippet

Figure 6 Tim Hanssen Demonstrating False Positives

The Impact of Artificial Intelligence on Law and Society

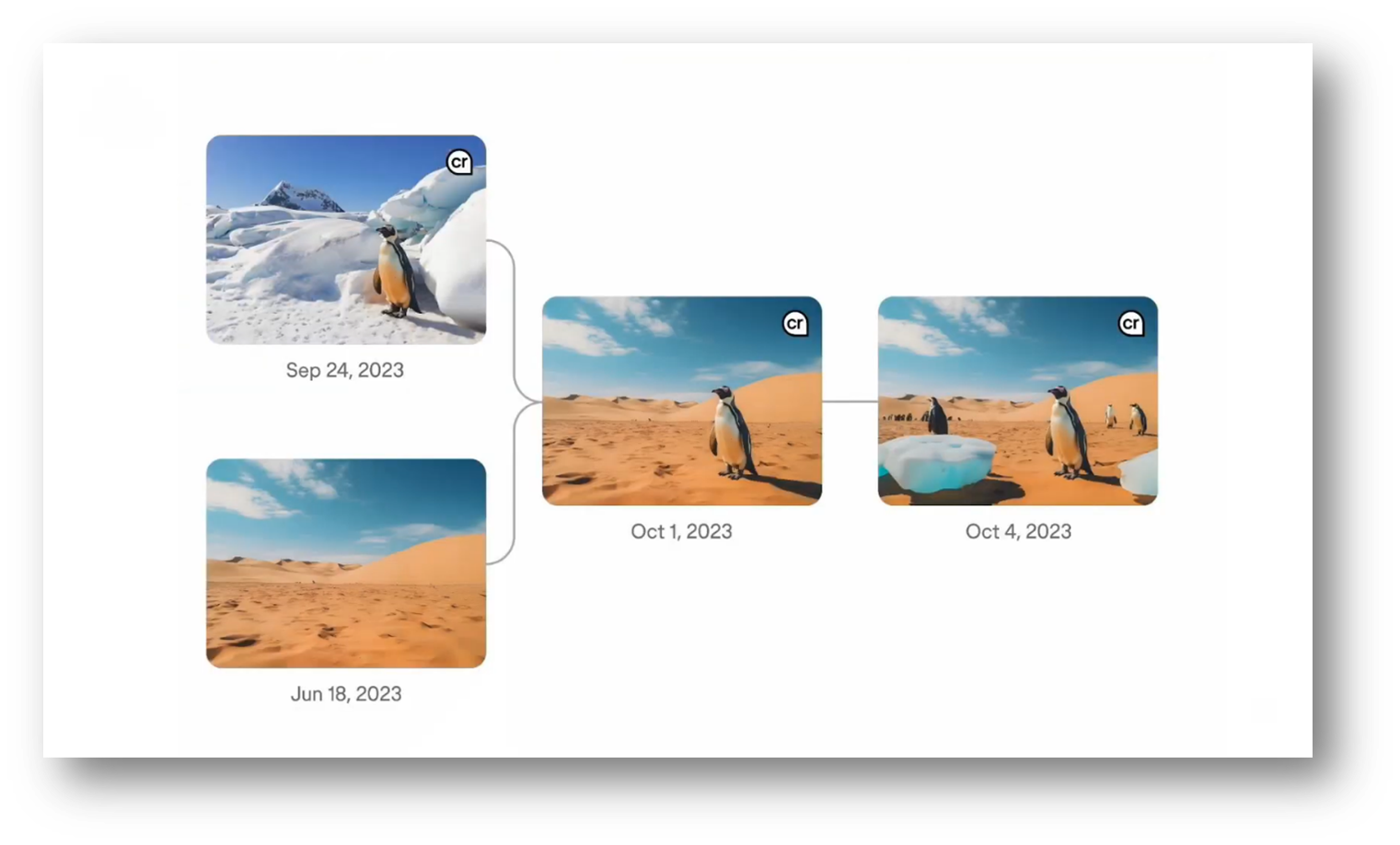

The use of artificial intelligence in image processing presents challenges regarding the reliability of evidence and recordings of the real world. An example is the case of a police officer confirming AI-detected violations, which may result in a biased validation of only real positives. Furthermore, AI's ability to manipulate and generate images raises concerns about trust and authenticity. Efforts like Adobe's content credentials proposal aim to address these issues by embedding Metadata in edited photos. However, the fundamental challenge of capturing original moments remains, impacting the perception of evidence and recordings. Additionally, the use of AI in legal document generation has raised concerns about the authenticity of such documents.

AI systems can present factually incorrect information, which is problematic when we rely on them. A notable incident occurred in South Africa in July 2023, where a lawyer used ChatGPT to generate non-existent cases, leading to a judicial rebuke. This issue arises because we often regard AI as human-like due to the term “artificial intelligence,” which attributes human traits and reasoning to these systems. However, AI does not reason or evaluate facts like humans; it uses statistical methods to predict likely outcomes. This shift from human reasoning to statistical predictions can significantly alter societal interactions, including legal arguments, case decisions, and interpersonal interactions, posing a substantial deviation from current practices.

Figure 7 AI Manipulation of Image

Figure 8 'Washington Judge bans use of AI-enhanced video as trial evidence'

Figure 9 Metadata of AI-enhanced Image

Figure 10 Images Spliced to Create AI-enhanced Image

Figure 11 Case Study of AI Misuse

Figure 12 “South African Lawyer Fined for ChatGPT” Article Snippets

Controversies of Data Use in AI Systems and Social Networks

Currently, there is a significant debate surrounding the use of data, particularly in the context of training large AI systems. Major social networks are keen on utilising the vast amounts of user data they have access to, including chats on WhatsApp and discussions on platforms like Facebook, Instagram, and LinkedIn. This has led to legal action, such as a lawsuit against LinkedIn for allegedly not obtaining proper consent from users before updating their terms of use. The lawsuit is ongoing, and it will be interesting to see how it unfolds.

Figure 13 South Africa and AI Regulation

Artificial Intelligence Legislation and Policy in South Africa

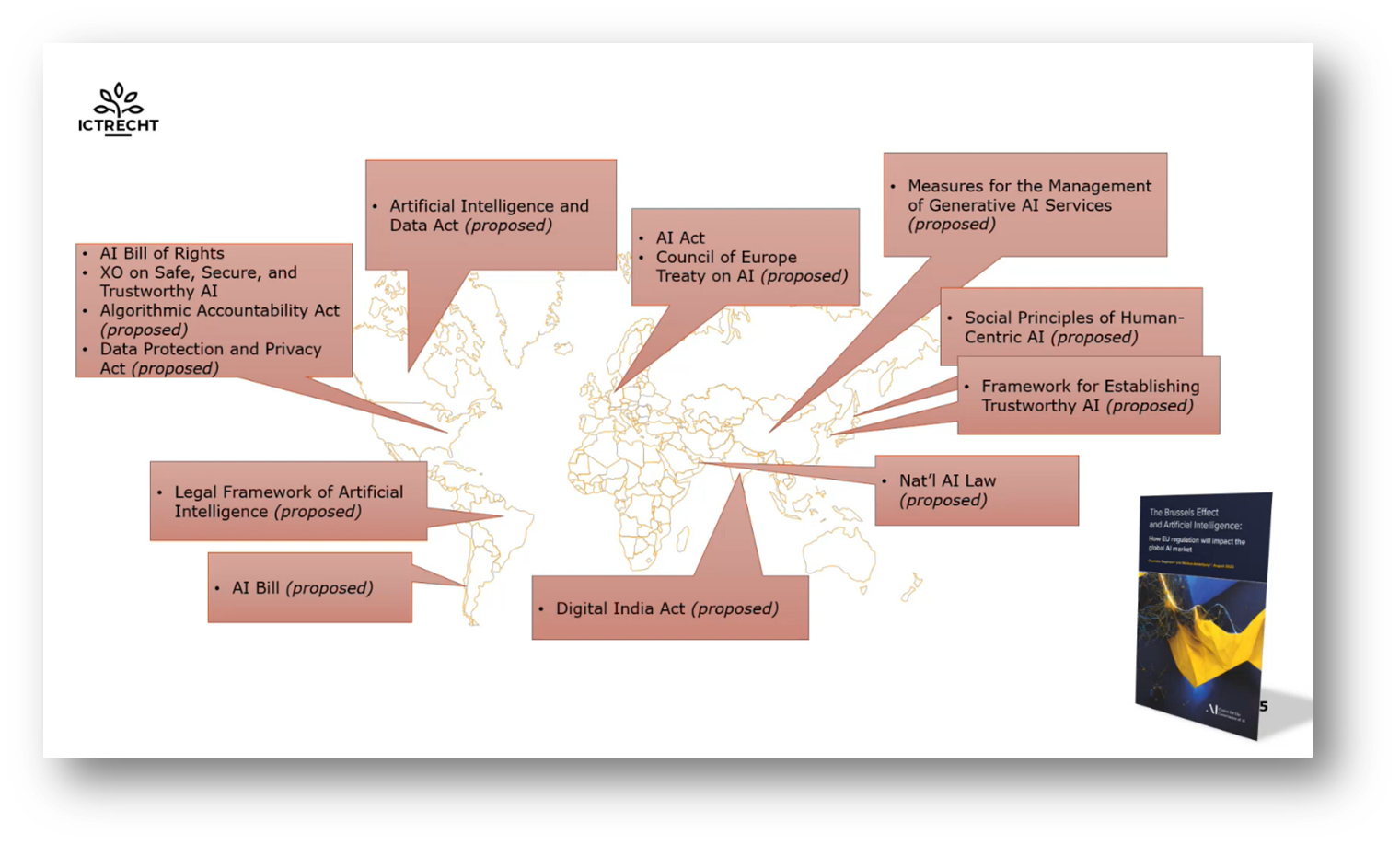

In the field of Artificial Intelligence (AI), countries worldwide are at different stages of developing legislation and frameworks to regulate AI technology. South Africa has proposed a national AI policy framework, while the United States and other countries are working on guidelines and legislation to address AI-related issues such as discriminatory hiring and data protection. The European Union has enacted the AI Act, and there is also an international Treaty on AI aiming to set a baseline for responsible AI. Various countries, including India, South Korea, Brazil, and China, are also making progress in developing AI-related legislation. A global AI regulatory tracker from a law firm called White Case provides updates on the latest developments in AI regulations worldwide.

Figure 14 Global AI Legislations

The European Union AI Act

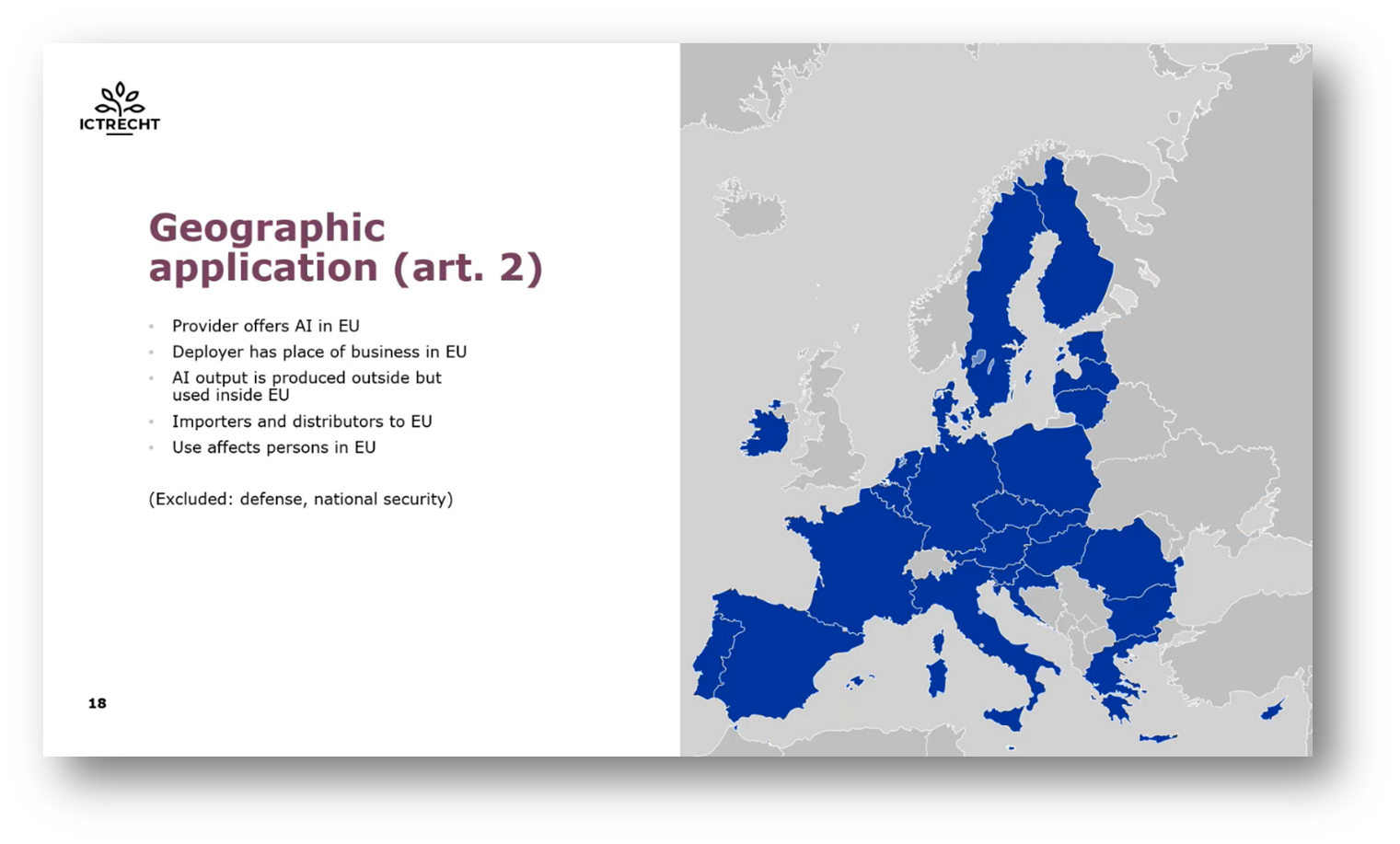

In Europe, the AI Act is a formal law that applies to all EU countries and even extends to some entities outside the EU. The main goal is to regulate AI, which affects European citizens, businesses, and society. If you offer AI as a service or product, whether inside or outside the EU, you are covered by the AI Act. This also applies if you have a place of business in the EU that operates AI or if you import or distribute AI into the EU. Additionally, using AI in a way that directly affects people in the EU triggers the act. Even producing AI output outside the EU and importing it back into the EU makes the producer or operator subject to the AI Act.

Figure 15 European Artificial Intelligence Act Article

Figure 16 European Union AI Act Geographic Application

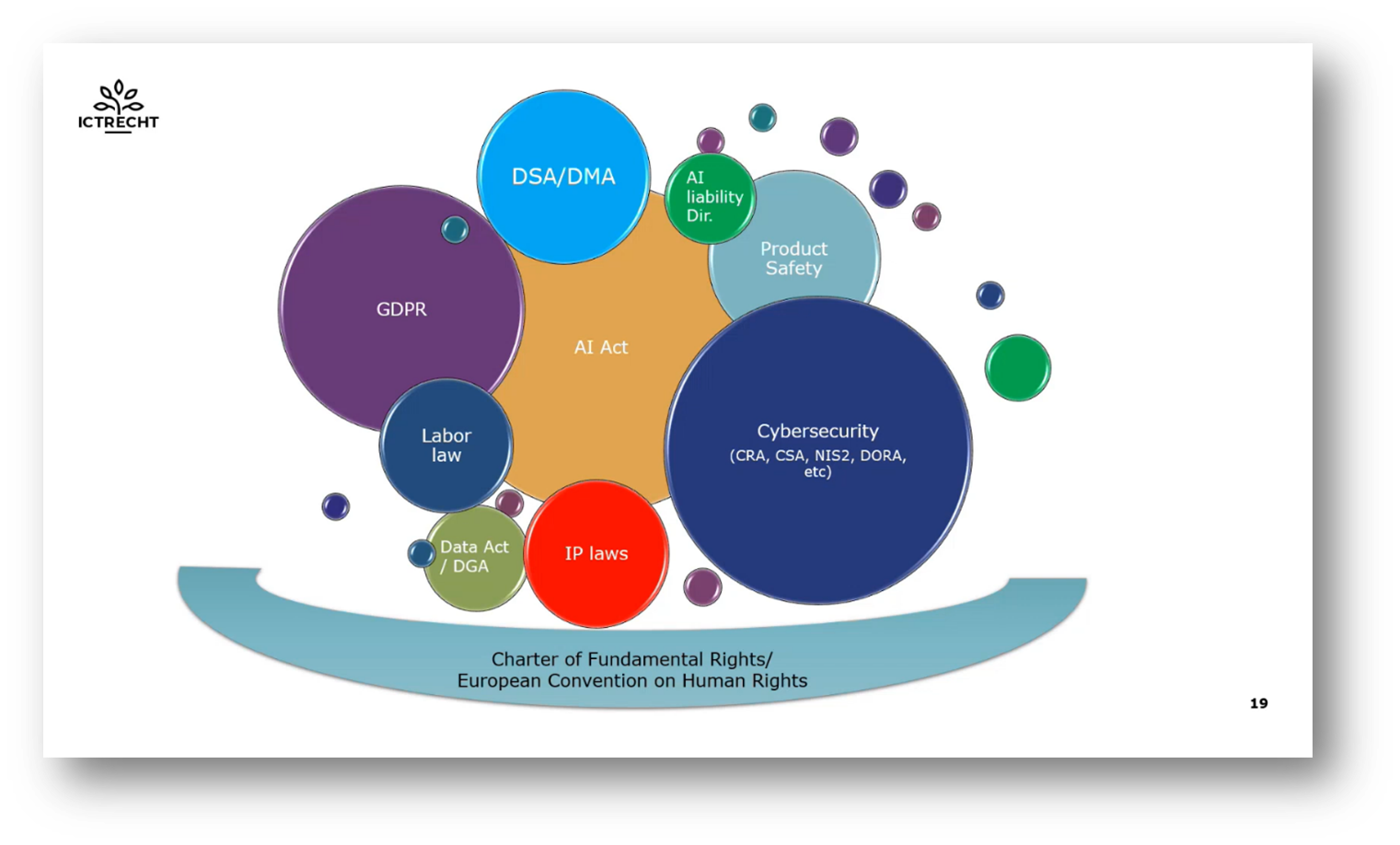

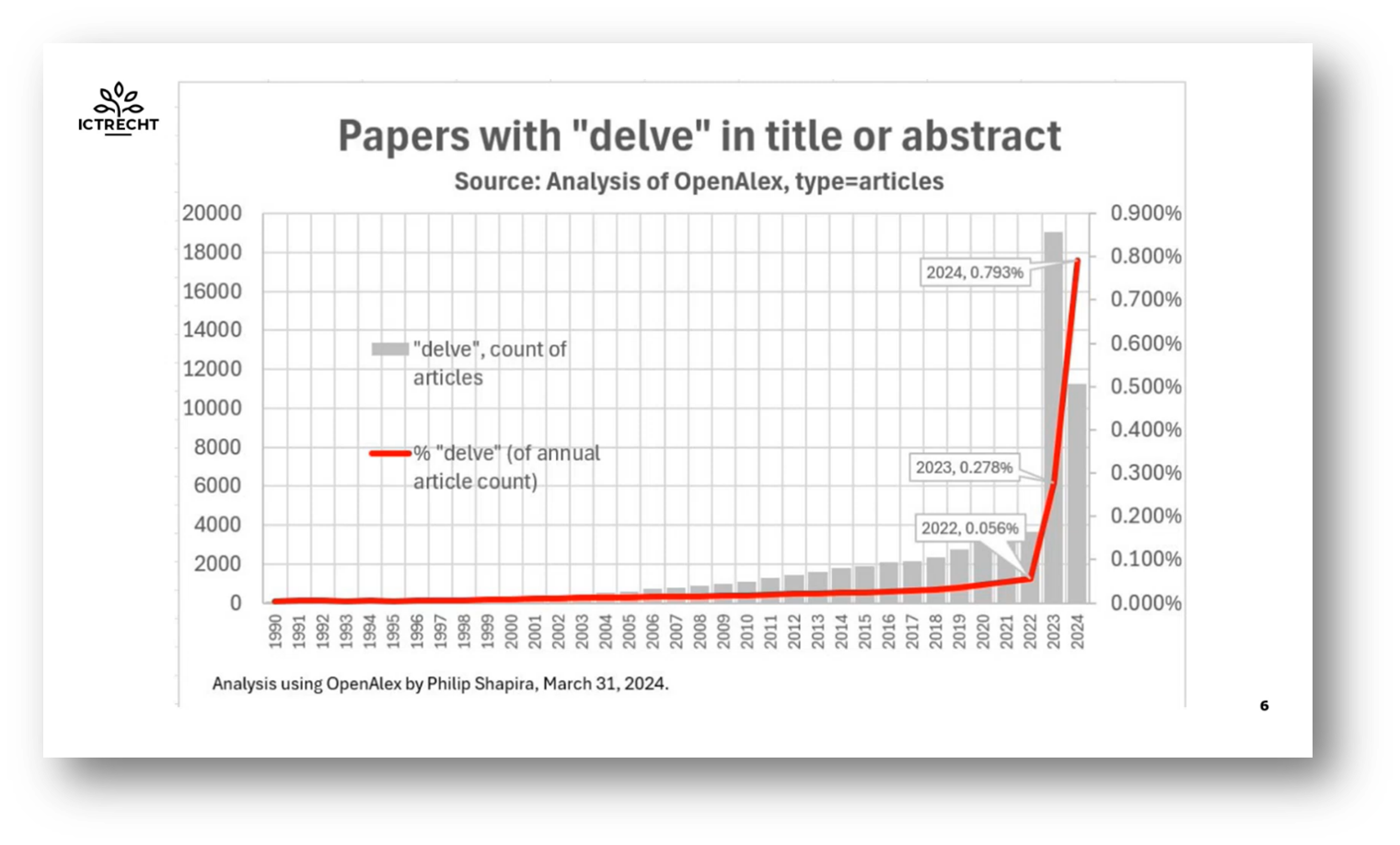

The European Union’s AI Act on Innovation

The European Union's AI Act has already made a significant impact since its recent implementation. Despite media reports suggesting that Apple's delay in launching AI features in Europe is due to concerns about the AI Act's strict regulations, the company's primary apprehension stems from a legal battle over opening up its App Store to third-party app stores. In addition to the AI Act, the EU is developing various digital regulations, including the Digital Markets Act and laws related to data, cybersecurity, intellectual property, and product safety. Compliance with these diverse laws poses a complex challenge for legal professionals. Furthermore, the widespread use of the phrase "delve in" has notably increased in recent years, partly due to its frequent usage by AI models like ChatGPT.

Figure 17 'Apple delays launch of AI-powered features in Europe, blaming EU rules'

Figure 18 Chapter of Fundamental Rights/ European Convention on Human Rights

Figure 19 Regulation (EU) 2024/1689 of the European Parliament and of the Council

Figure 20 AI's influence on use of word: "Delve"

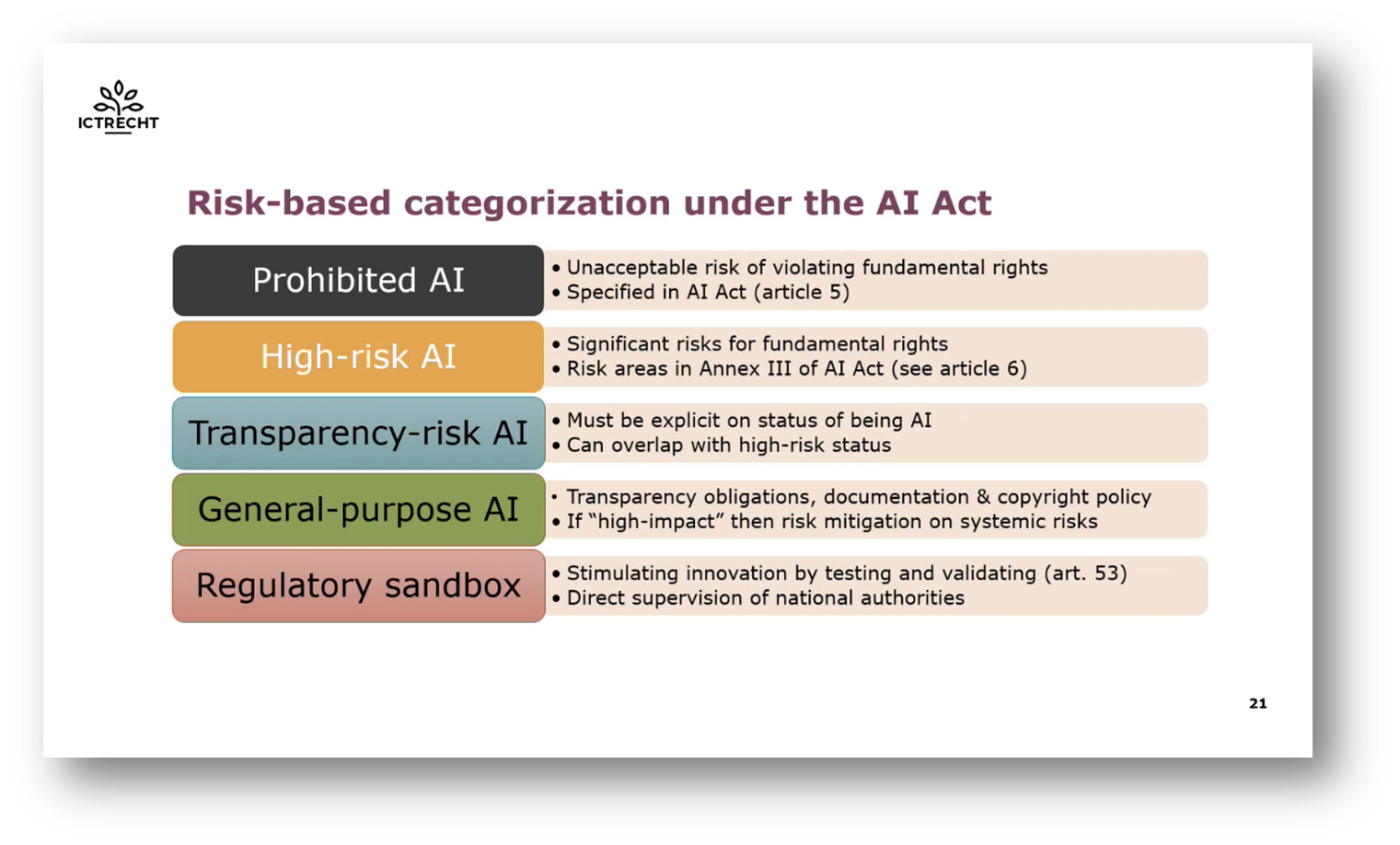

Understanding the Risk-Based Approach of the AI Act

The AI Act introduces a risk-based approach to regulating AI in the EU. It categorises AI into three main groups: Prohibited AI, High-Risk AI, and AI with Transparency Risk. Prohibited AI practices are banned altogether, while High-Risk AI will face extensive regulations. The Act aims to provide legal clarity by explicitly stating prohibited practices, in contrast to the more open-ended approach of the GDPR. Additionally, it addresses transparency risk, requiring AI systems to identify themselves to users when necessary.

Figure 21 Risk-based Categorization under the AI Act

Figure 22 Prohibited Practices

The AI Act and Its Implications

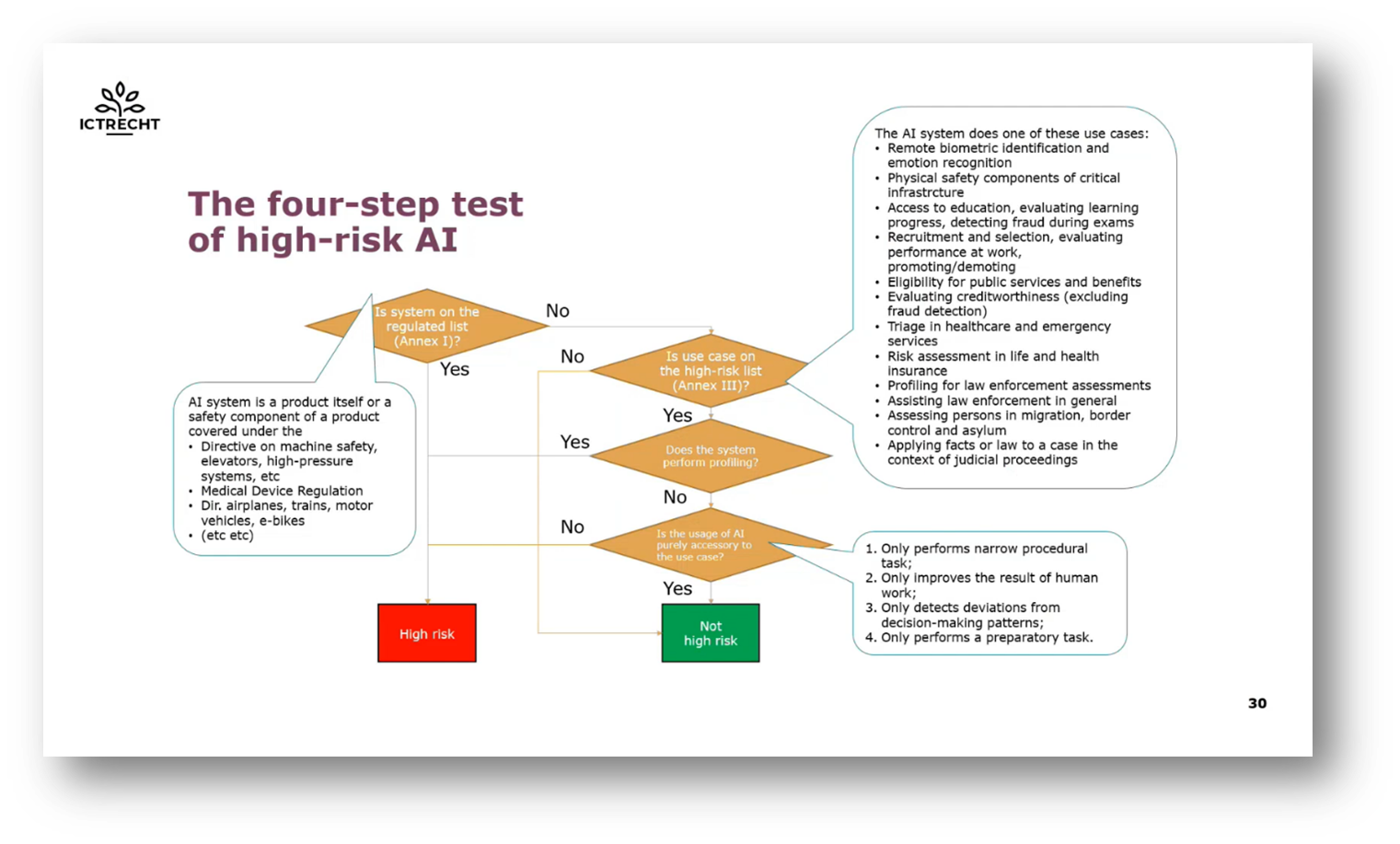

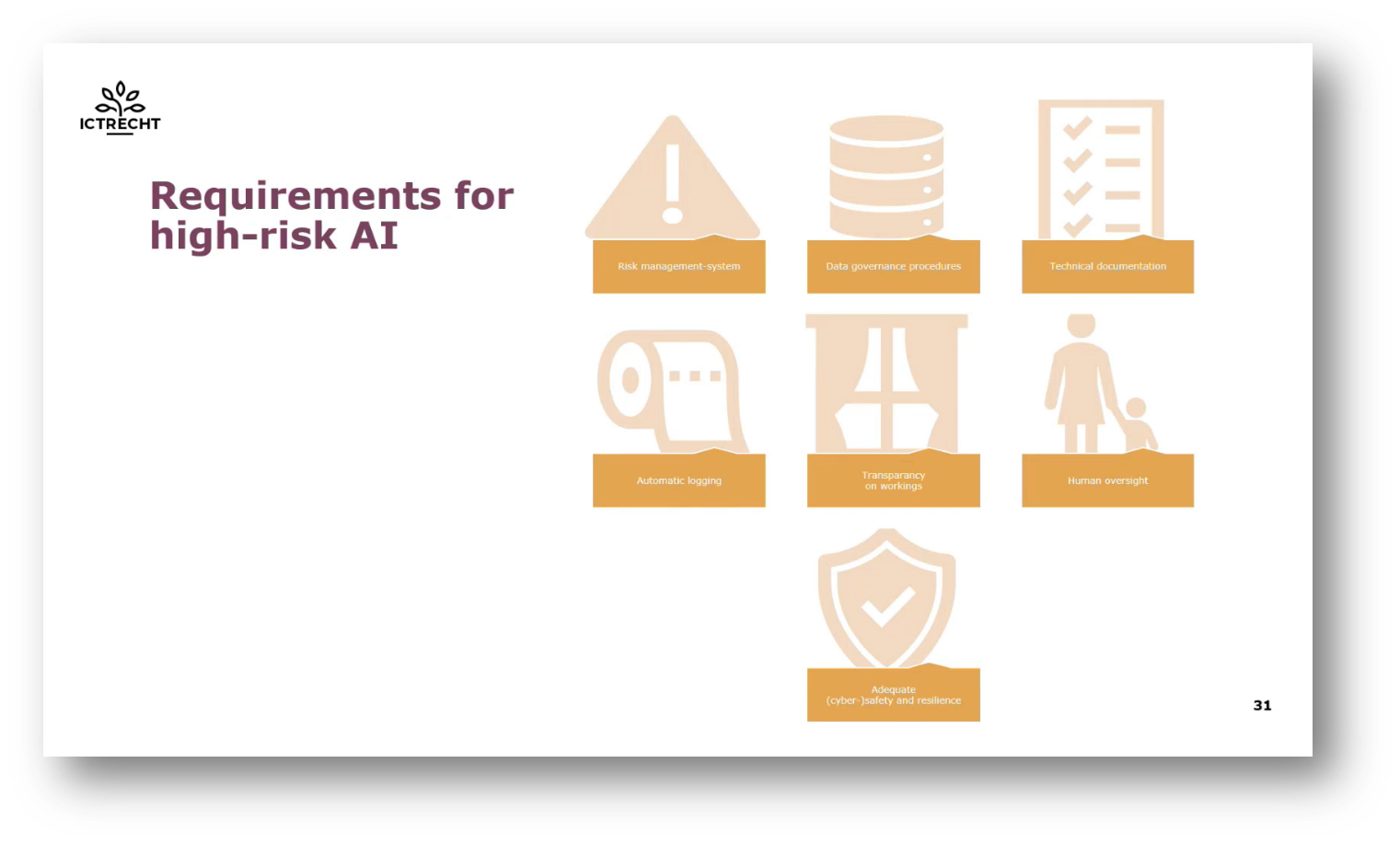

The AI Act outlines seven prohibited practices, including manipulation or exploitation of individuals and emotion recognition in certain settings. The Act also covers biometric regulation, profiling, and real-time remote recognition of people in groups, with specific considerations for High-risk AI systems used in regulated industries. If an AI system falls under the high-risk category, it must adhere to extensive documentation and monitoring requirements to demonstrate compliance with the Act's standards, which can be challenging due to the novelty of AI technology.

Figure 23 The Four-step Test of High-risk AI

Figure 24 Requirements for High-risk AI

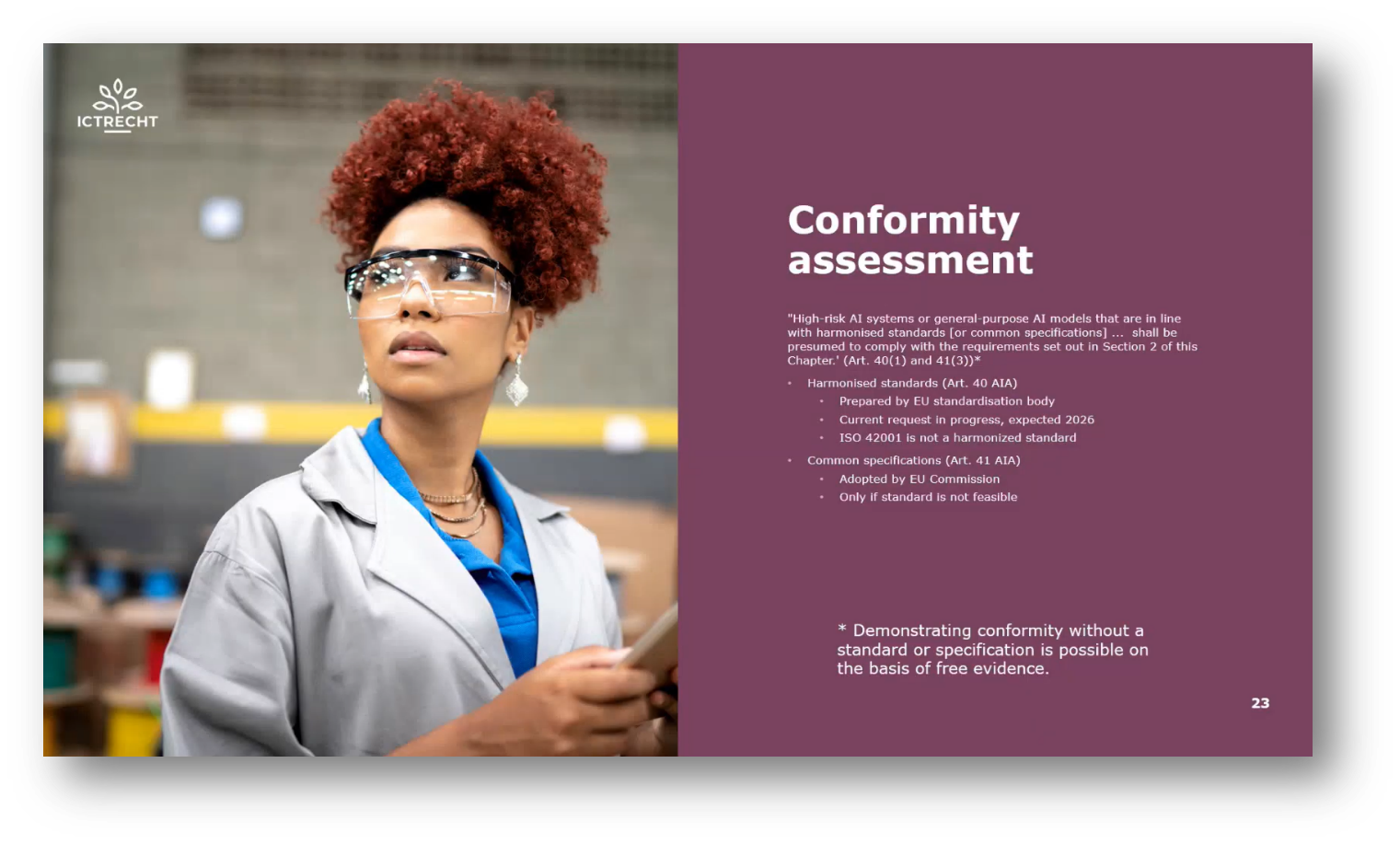

EU Conformity and AI Regulations

In Europe, products that comply with EU regulations bear the European Conformity (CE) logo, indicating their adherence to relevant safety standards. This logo can be found on plugs, batteries, and other items in the European Union, signifying their supposed safety. The EU has established harmonised standards to ensure product safety, and if a product displays the CE logo, it means it has been certified against these standards. Additionally, the discussion touches on the prohibition of AI technology that involves emotion recognition in the workplace, emphasising its potential impact and unproven nature.

Figure 25 Conformité Européenne

Figure 26 Conformity Assessment

Figure 27 "Serious Incident"

AI Technology: Concerns of a Social Credit System

The social credit system, which has been banned in some places, raises concerns about using AI to assign merits and credits for various behaviours. This system could potentially impact people's access to transportation, education, and employment opportunities based on their social credit scores. While the prohibition of this system is quite limited, there is a need to ensure that any form of long-term credit demerit system is fair and transparent, especially in the context of employment evaluations and bonuses.

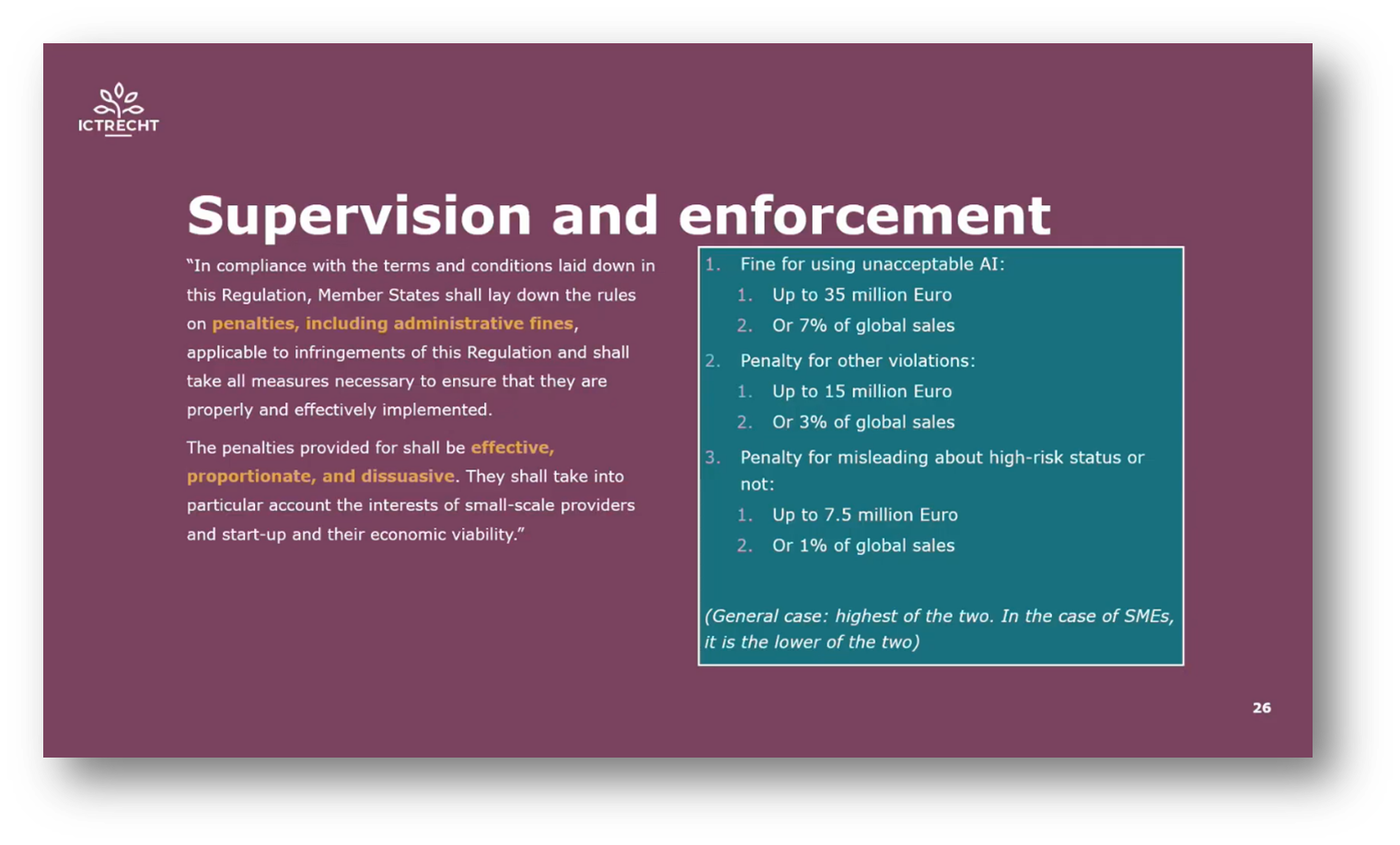

Compliance and Consequences of High-Risk AI Deployment in the EU

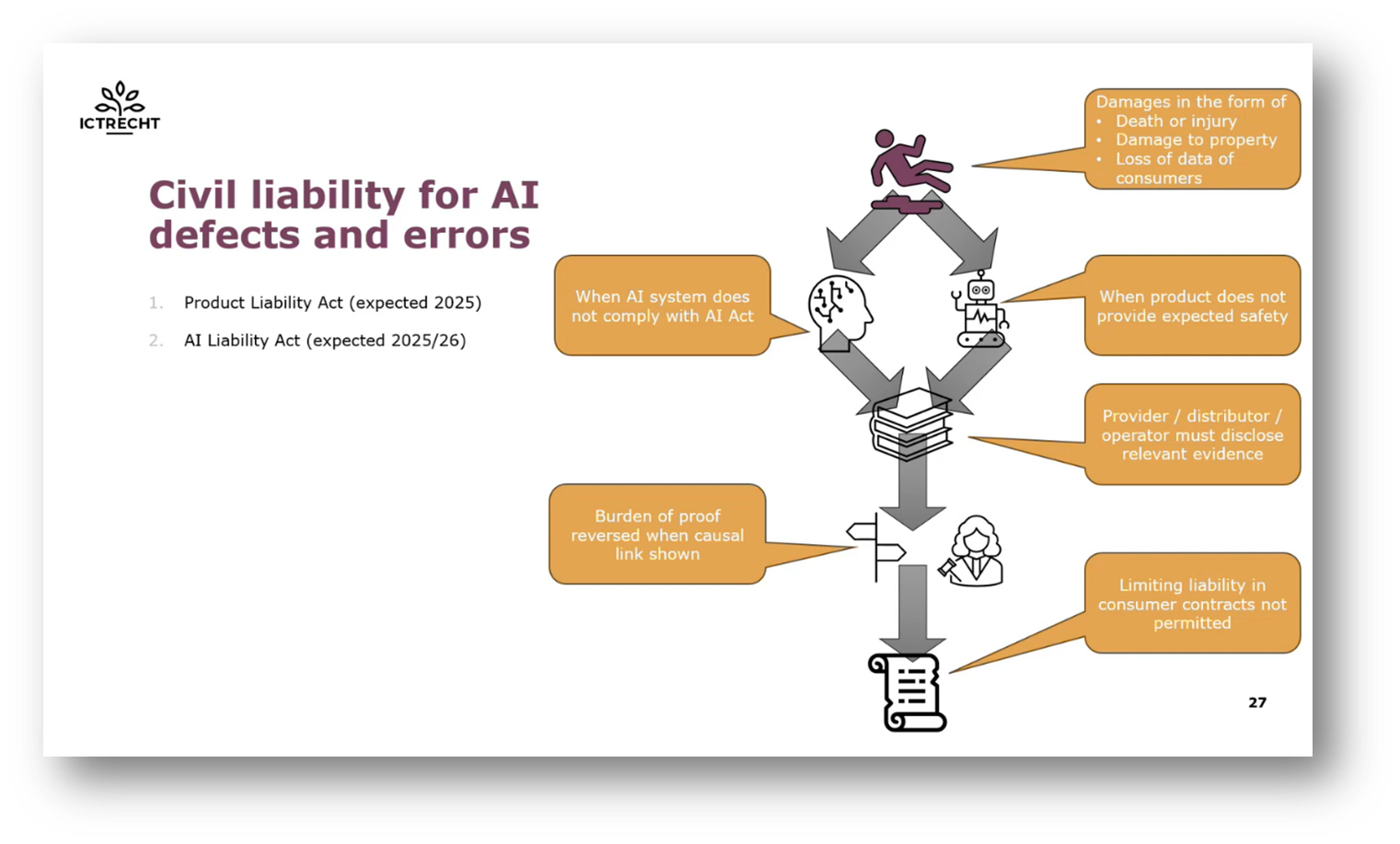

In order to introduce high-risk AI into the EU, ensuring compliance with the standards is crucial. Additionally, active market monitoring is necessary to address potential issues, with immediate intervention and reporting to authorities being essential. Failure to comply can result in fines of up to €35 million for prohibited AI, alongside civil liability, where affected parties can sue for damages. Companies are advised to carefully assess whether their AI falls into the high-risk category and take necessary steps to avoid or manage this classification.

Figure 28 Supervision and Enforcement

Figure 29 Civil Liability for AI Defects and Errors

Figure 30 Human Oversight

Human Oversight in AI Deployment

When deploying AI, it is crucial to ensure proper human oversight, as stipulated by the law. The specific requirements for oversight may vary based on the circumstances, but there are general guidelines to follow. Oversight personnel must be well-trained and capable of overriding the AI system, and they may need to operate independently or in a group. Organisations must decide how humans will be involved in decision-making and control, whether intervening when necessary or having ultimate control. Evaluating the specifics of each situation will help determine the appropriate approach to human intervention.

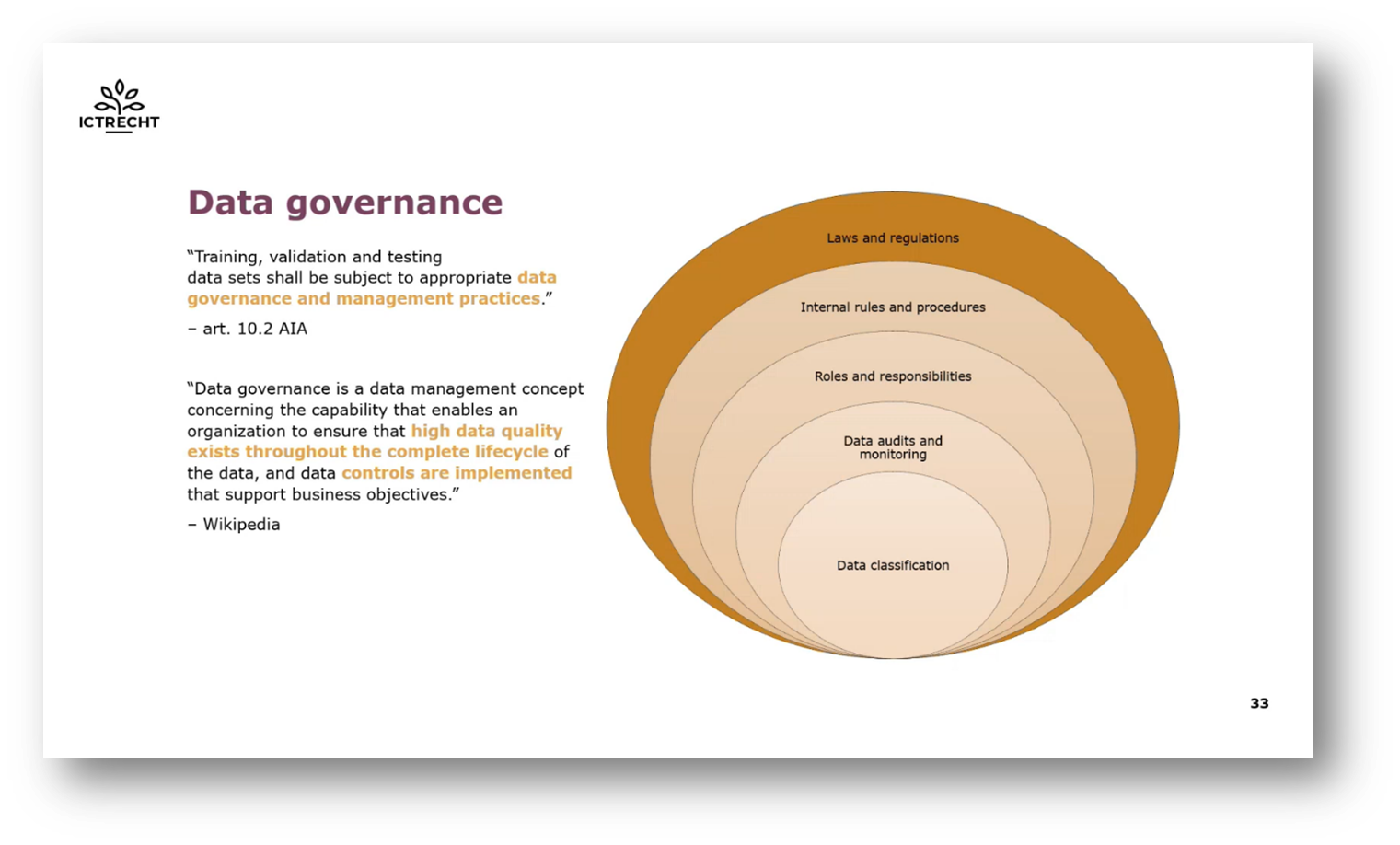

Figure 31 Data Governance

Data Governance and the AI Impact Assessment

Data Governance is crucial for AI systems as it ensures high data quality throughout the data lifecycle and proper controls to maintain it. It encompasses policies, procedures, and processing methods to support diverse, complete, and tested data sets. While ethical impact assessments are not mandatory for private entities, the AI Act requires employers in the public sector to conduct Fundamental Rights Impact Assessments to mitigate potential harm to citizens. Proper Data Governance can help identify and address unintended issues in AI systems, such as the example of a background removal algorithm causing unintended consequences. In the European Union, employing AI requires a strong focus on Data Governance to ensure responsible and effective use.

Figure 32 Instance of Failure

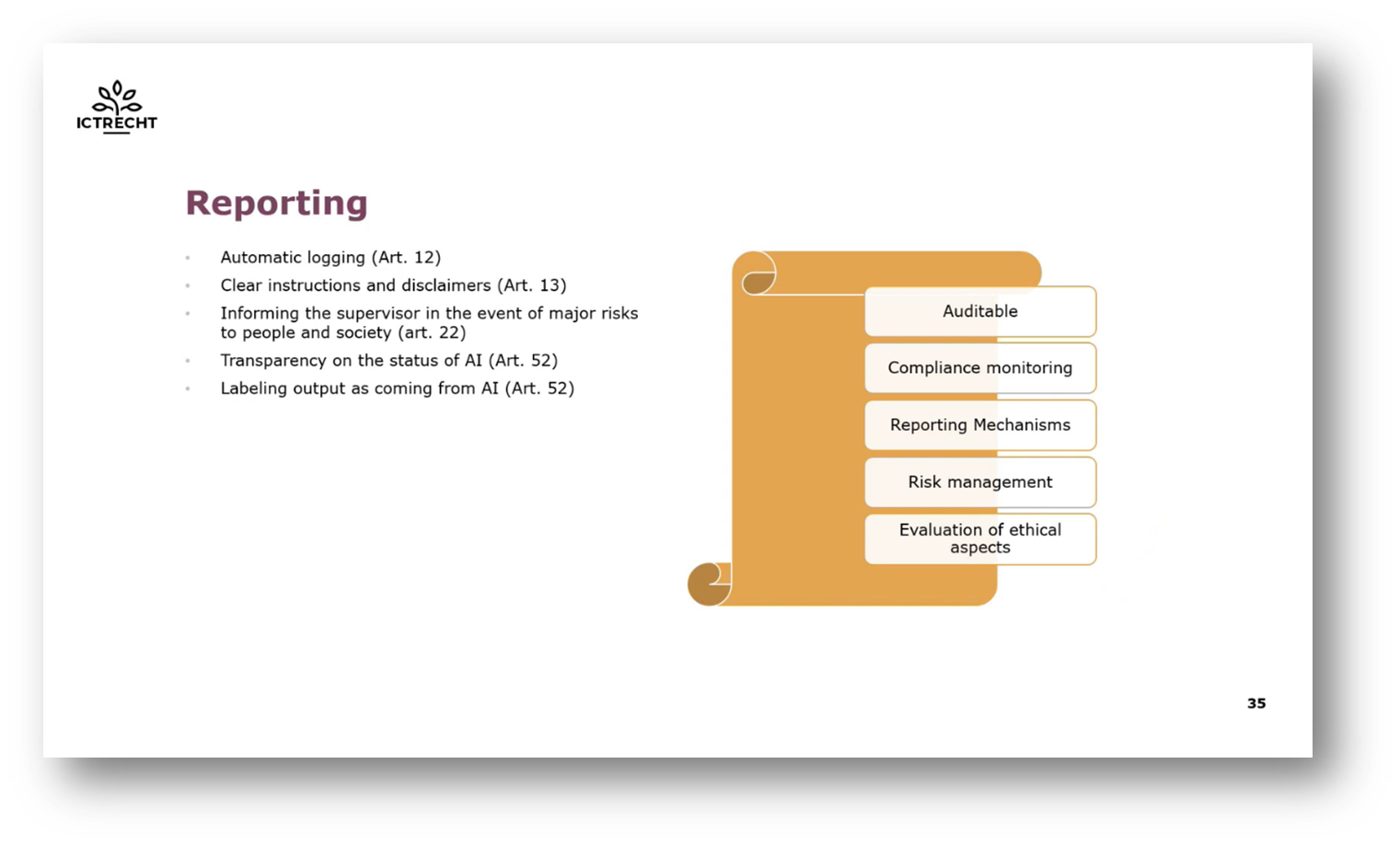

Figure 33 Reporting

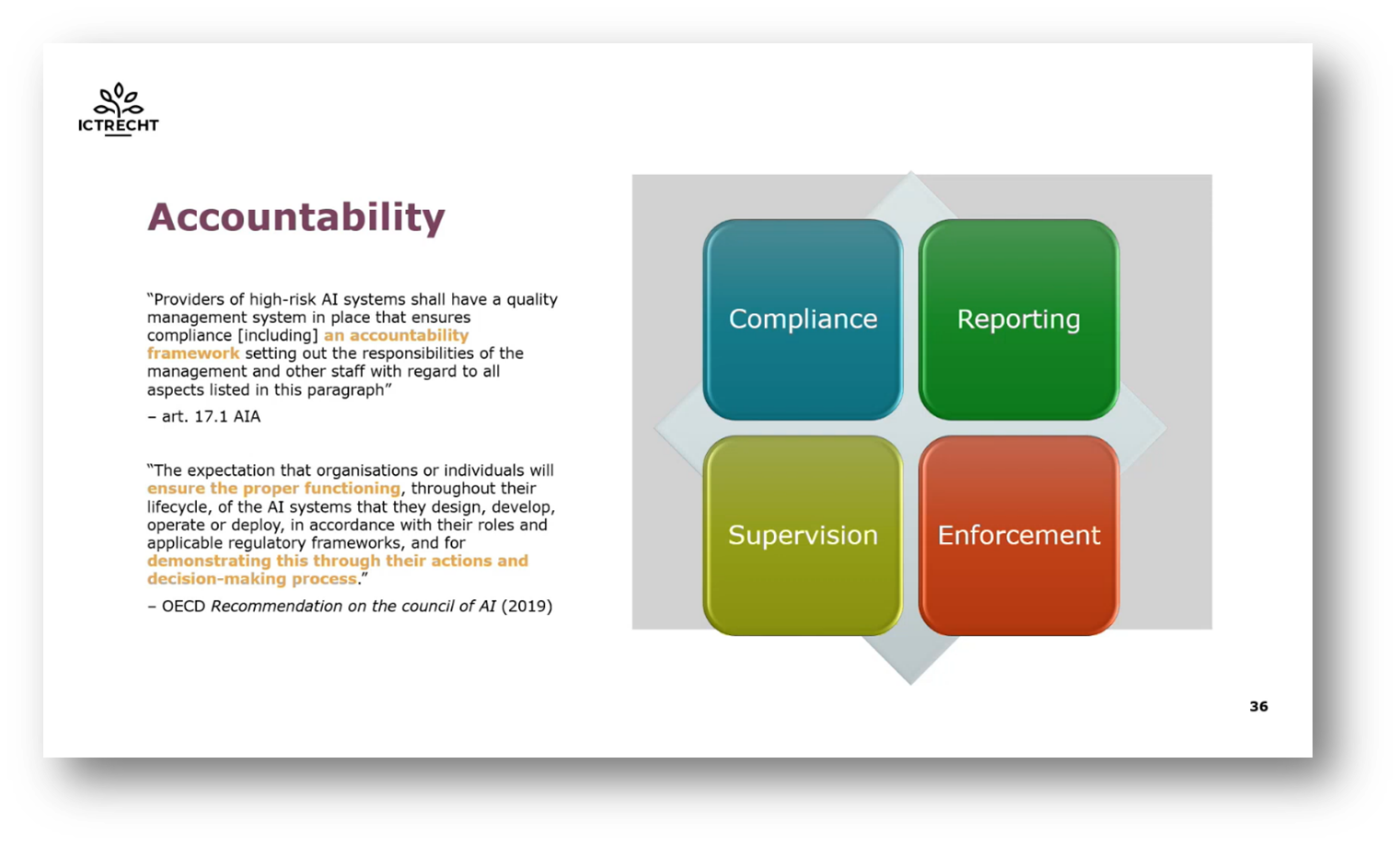

Accountability and Compliance in the Utilization of AI Systems

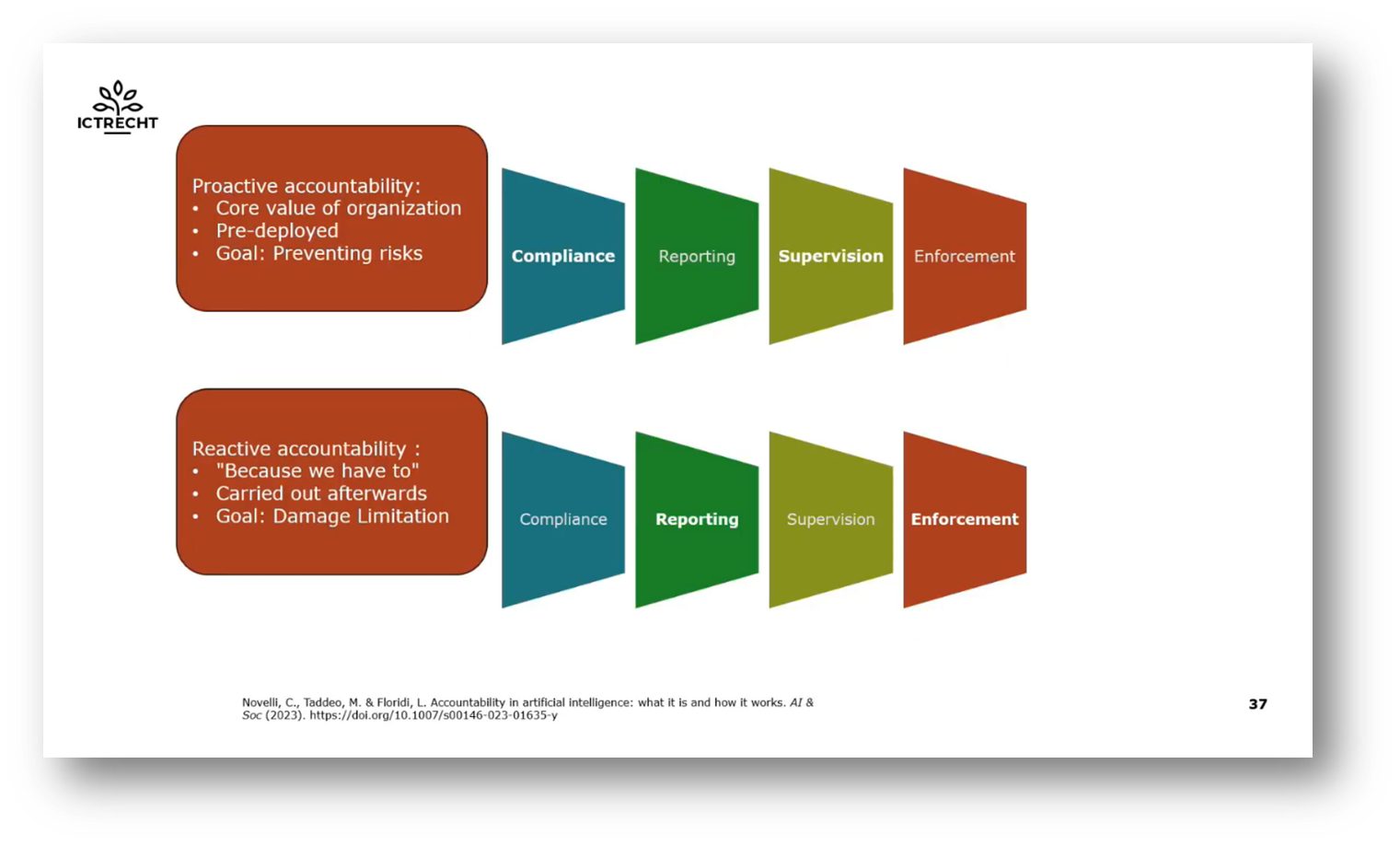

Companies need to ensure that their AI systems are accountable. This means being able to report on the system's actions and having the ability to reconstruct events that led to issues or risks. Accountability goes beyond legal compliance and involves taking responsibility for the system's actions, even if they go beyond the law. Proactive accountability, where accountability is a core value of the organisation, is ideal. Companies should also align their accountability framework with their core values. It can be challenging, but establishing proactive accountability is crucial for ethical and responsible AI usage.

Figure 34 Accountability

Figure 35 Proactive Accountability and Reactive Accountability

Figure 36 Accountability and Transparency

Ethical Challenges and Accountability in AI Adoption

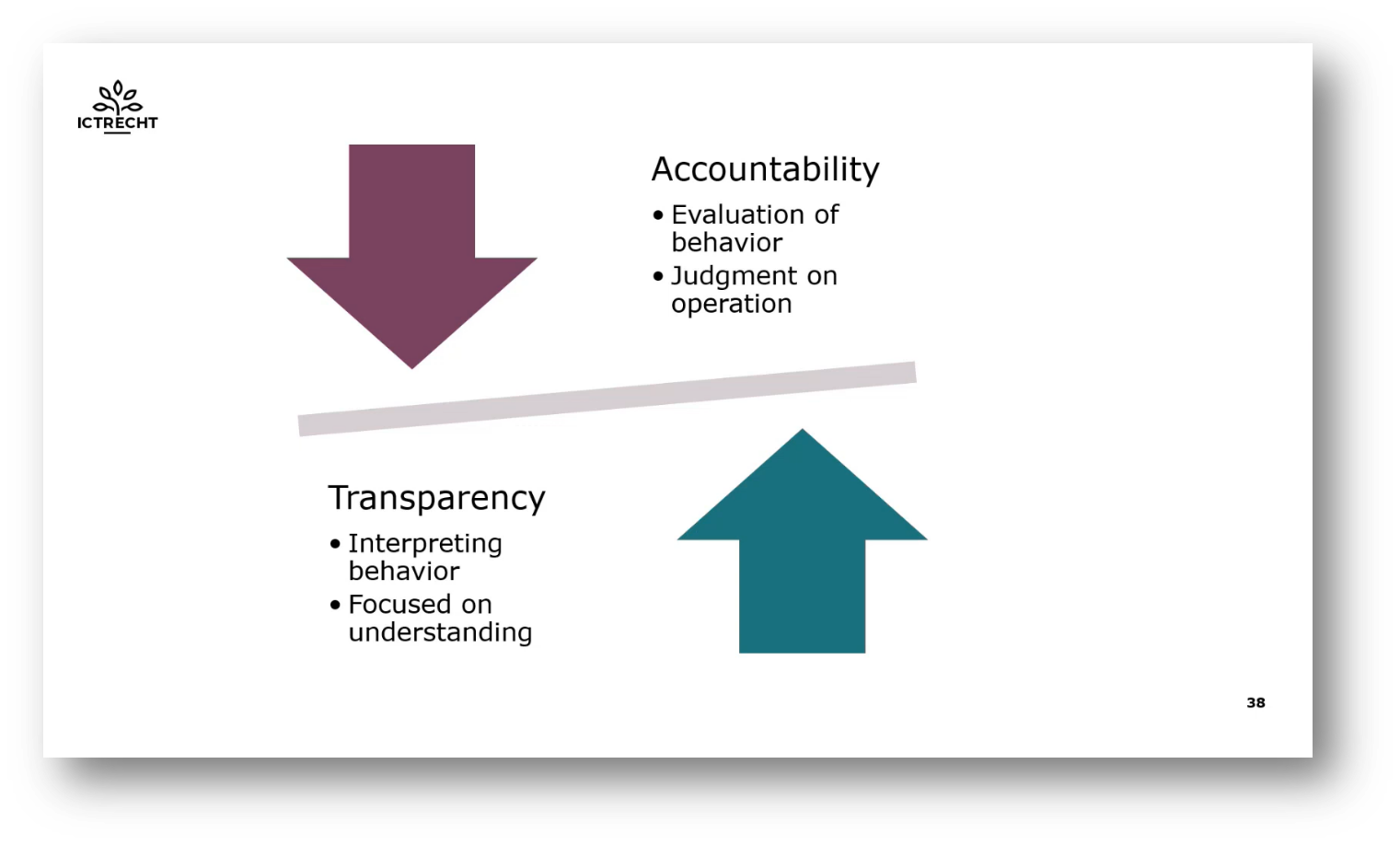

Microsoft reportedly dismissed its entire ethics team in March last year, raising concerns about the ethical implications of its collaboration with OpenAI and the subsequent integration of Copilot into their systems. This move reflects a broader trend, with other tech companies like Meta and Google also facing scrutiny for similar actions. The challenge lies in balancing business goals, legal requirements, and ethical considerations, highlighting the need for trained AI compliance officers to ensure accountability and transparency in decision-making. Accountability goes beyond mere transparency and involves justifying decisions, taking responsibility for outcomes, and continuously improving AI systems to operate ethically.

Figure 37 Legislation, Organization Goals, Ethical Guidelines and AI Policy

Figure 38 "What is an AI Compliance Officer?"

Figure 39 EU Framework for Trustworthy AI

The EU's Approach to Trustworthy AI

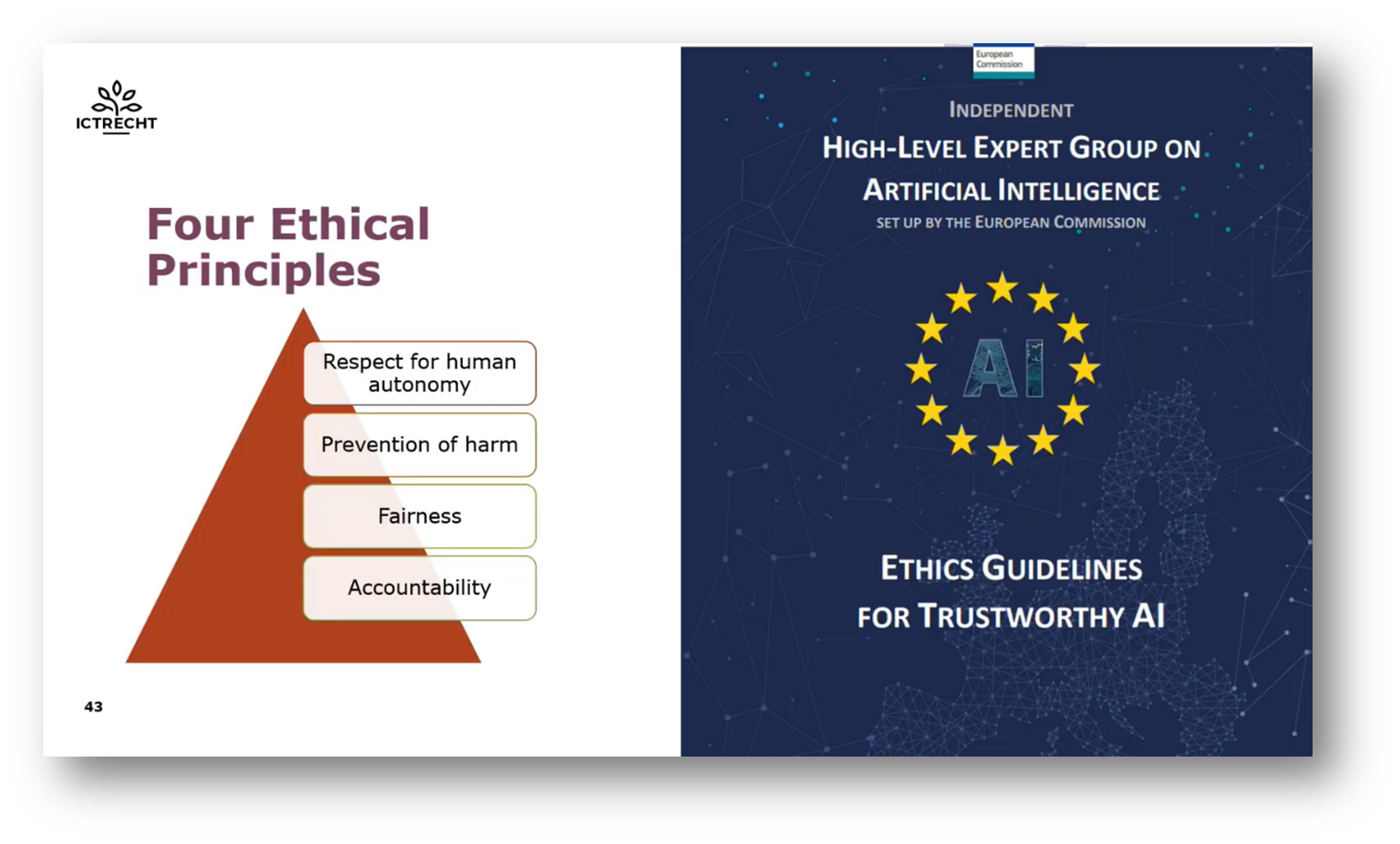

The European Union has established a framework for trustworthy AI, focusing on three key elements: legality, ethics, and technical robustness. The EU's guidelines for Trustworthy AI, published in 2019, outline four ethical principles (respect for human autonomy, prevention of harm, fairness, and accountability) and seven requirements. These requirements have been translated into a self-assessment tool called the Assessment List for Trustworthy Artificial Intelligence (ALTAI), designed to help organisations integrate legal and ethical considerations into their AI projects. The ALTAI assessment list allows for initial assessments, updates throughout a project, and final evaluations to ensure ethical compliance and progress tracking.

Figure 40 Four Ethical Principles

Figure 41 Seven Requirements for Trustworthy AI

Figure 42 'AI and Algorithms: Mastering Legal and Ethical Compliance'

Figure 43 Closing Slide

Challenges of Personal Data Management Systems

Arnoud and the attendees discuss challenges with large language model systems (LLMS) in managing data subjects' information and ensuring compliance with regulations like GDPR. LLMS does not store data but generates content based on statistical trends, leading to inconsistencies and inaccuracies when referencing individuals. Addressing these issues involves implementing guardrails to prevent the generation of potentially incorrect information, though this approach may limit the system's capabilities. Additionally, Arnoud notes that there are contrasting approaches between different AI systems, with some providing extensive information and others prioritising minimal disclosure. The webinar closed with comments on the application of guardrails, particularly in relation to public figures.

If you want to receive the recording, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!