Data Quality Framework & Methodologies – Data Citizen

Executive Summary

The article discusses the various aspects of data quality management and implementation in a professional setting:

Data quality management and implementation in a professional setting

Topics covered include data governance, data quality strategy, and implementation framework

Comparison of data quality analysis and improvement areas

Importance of implementation cycle, data quality assessment, and documentation of data quality expectations highlighted

Exploration of the DMAIC process for risk assessment and remediation

Definition and analysis of quality, measurement, and control

Discussion of quality control and process improvement techniques

Exploration of analysis of deliverables and frameworks in project evaluation

Discussion on data analysis and strategy implementation

Steps to ensure data quality and implementation, including data quality assessment process and root cause analysis and documentation process

Emphasis on the importance of data quality in company culture and achieving appropriate data accuracy for business value.

Webinar details

Title: DATA QUALITY FRAMEWORK & METHODOLOGIES DATA CITIZEN

Date: 13th July 2023

Presenter: Howard Diesel

Meetup Group: 3. Data Citizen

Write-up Author: Howard Diesel

Contents

Executive Summary

Webinar details

Data quality management and implementation cycle in a professional setting

Data Governance and Data Quality Strategy

Data Governance and Implementation Framework

Areas to Compare in Data Quality Analysis and Improvement

Notes on Data-Driven and Process-Driven Approaches

Quality Dimensions, Frameworks, and Information Systems

Implementation Cycle and Data Quality Assessment

Importance and Documentation of Data Quality Expectations

Implementing the DMAIC process for risk assessment and remediation

Defining and Analysing Quality, Validating Measurement, and Control

Quality Control and Process Improvement Techniques

Analysis of Deliverables and Frameworks in Project Evaluation

Data Analysis and Strategy Implementation

Steps in Ensuring Data Quality and Implementation

Data Quality Assessment Process

Root Cause Analysis and Documentation Process

Data Analysis Techniques and Tools

Discussion on Outliers and Data Analysis

Prioritising Data Management Approaches

Discussion on decision-making and decision inventory

The Importance of Well-Defined Outputs, Data Quality, and Cost Metrics in Data Governance

Error Rates and Quality Maintenance in Data Analysis

Achieving 100% Data Accuracy and its Value to Business

Importance of Data Quality in Company Culture

Data quality management and implementation cycle in a professional setting

Paul Bolton and Howard Diesel discuss the importance of understanding various frameworks and methodologies for the specialist exam, including the DAMA D Mac and Juran trilogy. Howard Diesel challenges the audience to create or choose a combination of these frameworks and explain their choices. They also cover data citizens, the implementation cycle, critical data elements, impact assessment, data quality expectations, and rules. Finally, they discuss defining a process and architecture for data quality, as well as executives and business cases, with examples from the Canadian Institute for Health Information. Howard Diesel offers to review previous sections if needed.

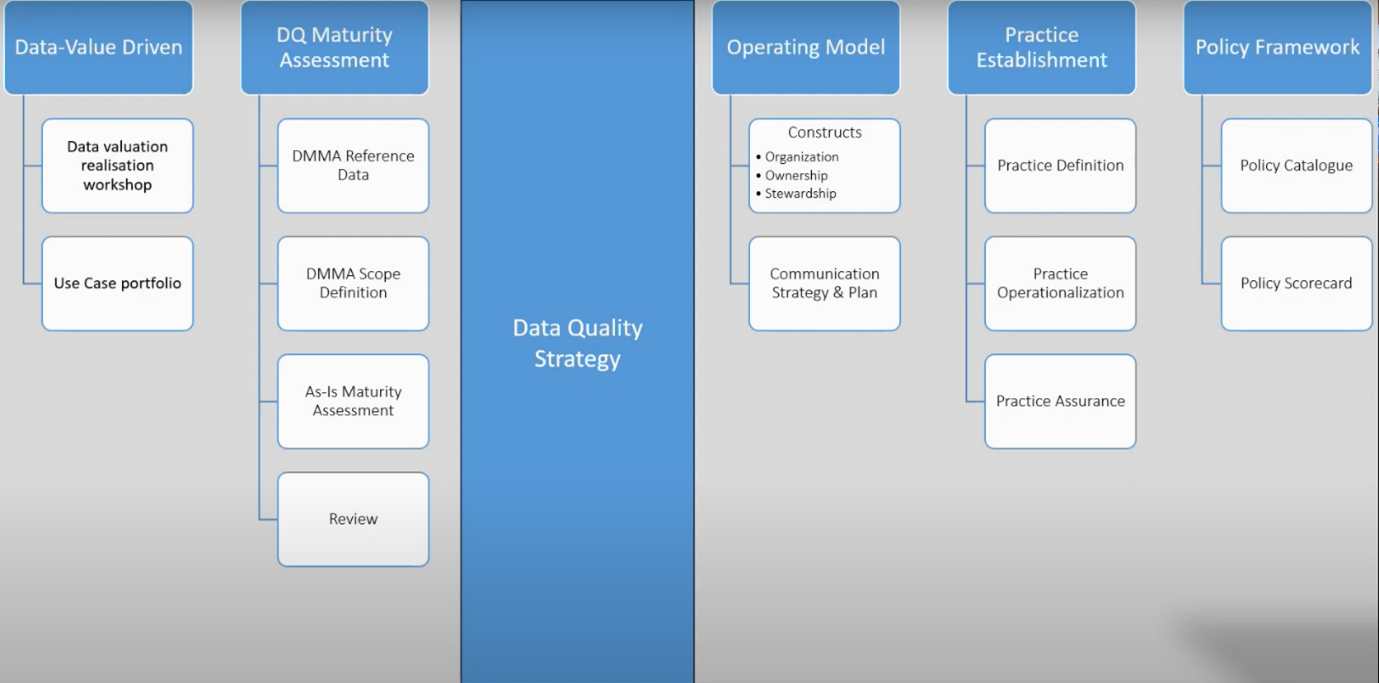

Data Governance and Data Quality Strategy

Karen requests a quick recap of establishing a data quality practice in data governance. Howard explains the hierarchy of business strategy, data strategy, and data management strategy, emphasising the alignment and derivation of data strategy from business strategy. He discusses the role of data quality in implementing the data strategy and defining the data quality domain and operating model, as well as the importance of defining principles, policies, procedures, and reference architecture in data governance.

Data Governance and Implementation Framework

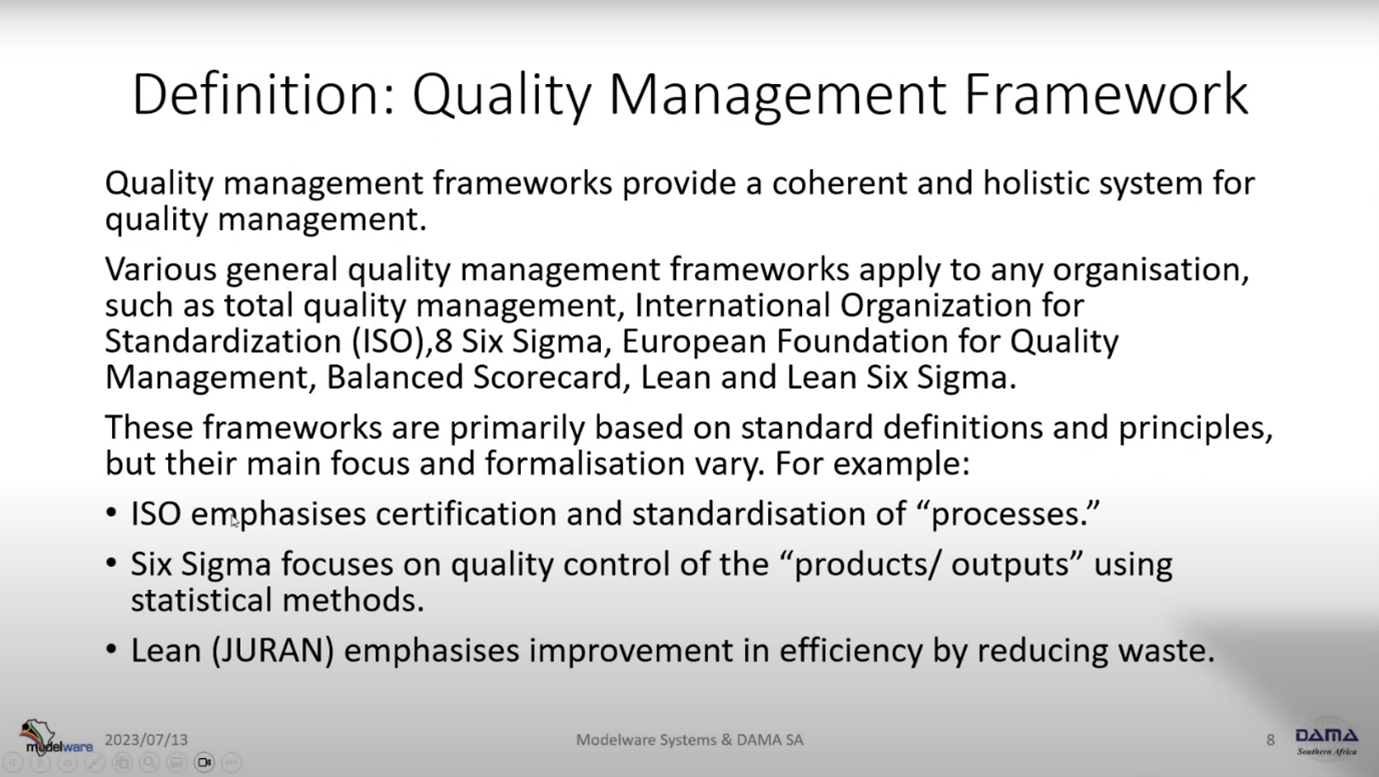

Effective policy implementation requires standardised procedures, training, and continuous review. DAMA promotes standardisation across domains and business units while assurance ensures progress through optimised data governance. Various implementation frameworks, including ISO 88,000, dash 61, 6 Sigma, and Juran, offer different approaches to certification, quality control, and waste reduction. NDMO regulation standardises procedures across ministries in Saudi Arabia, and comparative perspectives provide context for other frameworks.

Figure 1 Data Quality Game Plan

Areas to Compare in Data Quality Analysis and Improvement

We need to assess and compare frameworks for improving data quality, considering techniques for dimensions, concepts, and cost reduction. Continuously evaluate and improve data quality, identifying different data types, compatibility, and available services for analysis and improvement.

Figure 2 Definition of Quality Management Framework

Notes on Data-Driven and Process-Driven Approaches

Data-driven processes involve fixing data and changing data models, which can affect application performance. It is crucial to understand the processes that impact data quality. Dimension definitions may differ across frameworks, but using conformed dimensions like those recommended by Dan Myers is ideal. The cost of a DQ program includes reading materials on different data types and developing rules. Rule building and requirements analysis vary among frameworks, with CDQ being a comprehensive approach. Improvement strategies depend on the area or system being worked on.

Figure 3 Improvement Strategies & Techniques

Quality Dimensions, Frameworks, and Information Systems

Comparison of Quality Management (QM) and Loshin models. Quality, Cost, and Delivery (QCD) and Total Information Quality Management (TIQM) frameworks, comparison of quality dimensions, improvement assessments, and costs. Monolithic systems are Enterprise Resource Planning (ERP), and distributed systems include data management, fabric, and warehousing. COOPERATIVE systems are used across different government organisations, and DAMA Quinn CS is used both within and outside an organisation. Framework review analyses how other frameworks converge. TIQM discussed. The Canadian Institute for Health Information (CIHI) differentiates between data quality and information quality in their use case. DQA refers to subjective and objective metrics in measuring quality.

Figure 4 English & Loshin Comparison

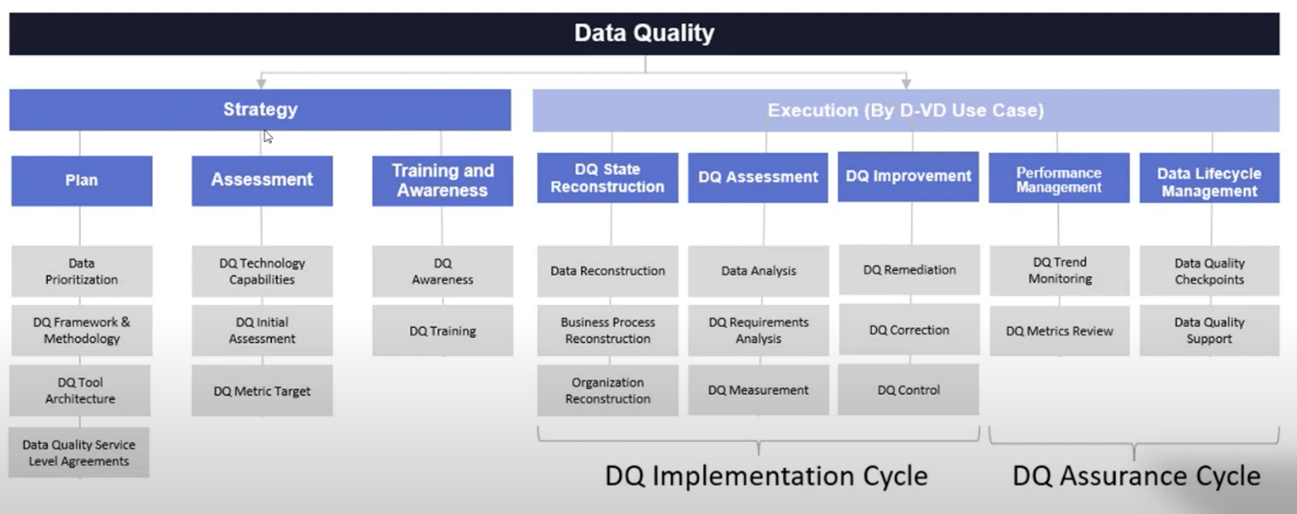

Implementation Cycle and Data Quality Assessment

Howard suggests harmonising different frameworks for implementing and assessing data quality. He emphasises the importance of understanding data quality issues and building metadata, lineage, and organisational structure before assessing data. Howard advises against relying solely on predefined business models and highlights the need for reconstructing states and assessing critical data. The assessment includes profiling and creating data quality requirements analysis. Finally, the data is evaluated based on expectations and data quality rules.

Figure 5 DQ Implementation Cycle

Importance and Documentation of Data Quality Expectations

The DMBOK lacks an interesting deliverable for data quality expectations. A combination of business rules, perspectives, and user requirements determines expectations. Data quality is measured, and issues are reported with root cause analysis using the Pareto principle. Expectations are not the measure itself but the level at which it should pass.

Figure 6 Definition & DQ Implementation Cycle BAU

Implementing the DMAIC process for risk assessment and remediation

Howard recommends involving internal audit in risk assessment and compliance, citing reliable sources and previous industry experience with segmentation. The implementation cycle includes planning, measurement, remediation, action, and review stages. Howard suggests revising targets instead of aiming for 100% compliance to avoid missed deadlines. The DMAIC process provides tools and techniques for each risk assessment and remediation stage.

Figure 7 DQ Expectation Template

Defining and Analysing Quality, Validating Measurement, and Control

The quality requirements are defined by listening to customer feedback. Achievable outputs and data collection methods are identified. The data is analysed using various methods, including the "five whys" technique. The importance of conducting a cost-benefit analysis and improvement is emphasised. Potential failures are assessed using failure mode effect analysis. The measurement system is validated, and rules are reset for accurate measurements. Control methods are shared once quality levels are reached. The meeting also covered the data quality specialist exam and the importance of excluding tasks that won't be pursued.

Figure 8 Six Sigma DMAIC Process for DQ Projects

Quality Control and Process Improvement Techniques

The Juran Trilogy, quality control zones, Pareto principle, waste reduction, PDCA cycle, Statistical Process Control, root cause analysis, and techniques for resolving quality issues with budget constraints.

Figure 9 Juran Trilogy

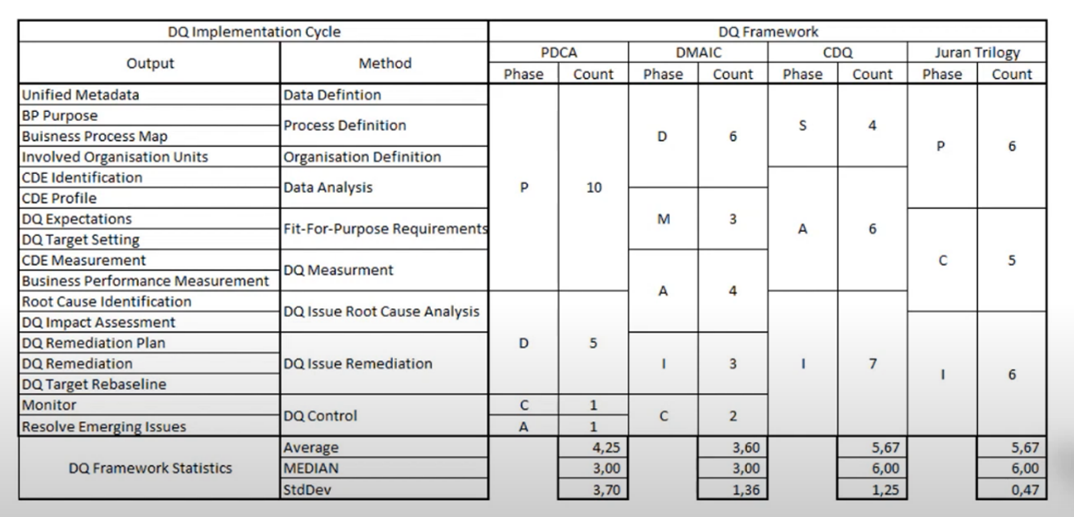

Analysis of Deliverables and Frameworks in Project Evaluation

Howard discusses the budget needed to fix problems and suggests analysing errors, causes, cost, and benefits. Different frameworks, including PDCA, MAICCDQ, and Juran I, are compared for their outputs. Howard highlights the importance of unified metadata, data lineage, data models, and business processes in project evaluation. Deliverables in PDCA are mainly concentrated in the planning and doing phases, with an average of 4.25 per segment of work. State reconstruction assessment and improvement show a better average number of deliverables compared to PDCA. The Jurant trilogy is one of the better frameworks, with planning, control, and improvement as its key areas. A participant seeks clarification on the meaning of PCI, PC, and PCD and learns that they represent the count of deliverables per phase.

Figure 10 Data Quality Framework Harmonisation & Analysis

Data Analysis and Strategy Implementation

Howard discusses the challenges of his current plan, including extensive upfront work and a small tail end. JG acknowledges the statistical outlier view of the Juran trilogy and the importance of aiming for the next set of outliers. Howard explores different frameworks for selecting a strategy and mapping it to standardised outputs. He also discusses working on a customer project, identifying critical areas, and setting targets. Data reconstruction is emphasised, including obtaining metadata, business glossary definitions, reference data, master data, and data models. Understanding business processes and data lineage is crucial, referencing a previous data flow analysis for data privacy. Organisation reconstruction and discussions on obtaining data from authoritative sources are mentioned.

Figure 11 Data Quality Practice Playbook

Steps in Ensuring Data Quality and Implementation

Howard discusses difficulties in fixing complex data, the importance of state reconstruction, and specific steps for data quality assurance involving data citizens and business data stewards.

Figure 12 DQ Practice Definition, Process Steps & Deliverables

Data Quality Assessment Process

The data stewards will engage in activities including reconstructing data state, business processes, and organisation for each use case. They will distinguish between profiling data with statistical analysis and conducting data quality assessments. Data quality issues may arise during the profiling stage. The acceptability of the min-max range depends on the use case. Accurate identification of data issues requires high data quality expectations. Profiling can be automated using SQL Server agent job. Profiling results play a role in initiating data quality discussions and assessments. The assessment process includes raising and prioritising data quality issues. The 80/20 rule is used for data quality priority. Root cause analysis uses the fishbone technique, and the identified root causes are agreed upon.

Figure 13 DQ Implementation Cycle (BAU)

Root Cause Analysis and Documentation Process

After conducting an assessment, ensure that all stakeholders are satisfied with the identified root causes, obtain agreement from all parties on the chosen course of action, and create a remediation plan based on the selected solution matrix (data-driven or process-driven). Conduct a cost-benefit analysis to determine the expenses and quality improvements of the proposed fix and create failure mode, Moscow, progress, and optimal improvement plans. Document the revised deque target and initiate the necessary fixes, striving to maintain the new level of performance. Utilise flow charts and techniques like critical data element prioritisation, cost taxonomy, and profiling tools to understand and resolve data-related problems. Finally, present findings using fishbone diagrams and check sheets to communicate issues and their origins effectively.

Figure 14 Finding CDEs

Data Analysis Techniques and Tools

The check sheet helps identify and address data-related problems. Frequency distribution analyses data distribution and identifies areas of concern. Histograms visually present the frequency distribution of data. Power BI analyses data across segments and identifies issues. The Pareto chart prioritises fixing impactful issues. Scatter plots, flow charts, and box plots are additional data analysis tools. Identifying and quantifying outliers improves expectations and outcomes.

Figure 15 Data Frequency Distribution Measurement

Discussion on Outliers and Data Analysis

Paul Bolton and Howard Diesel investigate an outlier in the data causing arrival delays and consider other out-of-control factors. They conclude that the data cannot support forecasting to 2050 but discuss its usefulness for analytics and other applications, including massive CDs.

Prioritising Data Management Approaches

Paul Bolton recommends using a use-case approach to prioritise and target specific areas for improvement in data management. Howard Diesel agrees and stresses the importance of not trying to tackle too many aspects at once. They propose starting with data warehouse reports or dashboards as recognisable issues that data citizens can trust. Howard emphasises the significance of critical data elements and suggests starting with reports if a use-case approach is not feasible. Bolton acknowledges the effectiveness of starting with reports and expresses gratitude for the insights. Howard warns against starting at the application level, as it can take longer to reach the desired outcome.

Figure 16 Display the shape of CDEs

Discussion on decision-making and decision inventory

Howard stresses the importance of critical data elements in supporting decision-making. He suggests factoring in the cost of a wrong decision when analysing a report. Paul agrees and finds it easy to quantify the cost of a wrong decision. Howard mentions the challenge of defining all decisions and the need for scenario planning. He refers to a decision inventory that links to the enterprise system involved in making decisions. Howard offers to chat further after the meeting. He also mentions his review work and experience with different frameworks.

The Importance of Well-Defined Outputs, Data Quality, and Cost Metrics in Data Governance

Having clearly defined outputs facilitates comparing different cycles and frameworks. Due to business alignment, it is easier to address data quality than data governance. The business already recognises and accepts the need to improve data quality. Cost metrics can justify the expenses of data quality programs when issues are quantified. Automation and appropriate technology tools are essential for efficiently fixing data quality issues. Linking data quality to risk management is crucial for addressing broader risks. Some health professionals may underestimate data quality's importance.

Error Rates and Quality Maintenance in Data Analysis

Hesham is concerned about high error rates in data analysis and the need for improvement. Howard Diesel acknowledges the issue and questions the tolerance for errors and the decision-making process. Hesham and Howard discuss the difficulty in reaching and maintaining 100% data quality. Howard compares the quest for bug-free programs to attaining error-free data, highlighting the challenges. Hesham appreciates the discussion and plans to provide feedback on LinkedIn. Hesham requests the complete recording and slides, and Howard assures him they are available. Howard brings up the dimension of accuracy in data analysis as a further discussion point.

(Editors note: if you would like to watch the recording, please get in touch with us at social@modelwaresystems.com)

Achieving 100% Data Accuracy and its Value to Business

Hesham questions the possibility of achieving 100% accuracy and its relevance to the real world. Howard argues that aiming for perfection and overspending may not lead to 100% accuracy. Hesham wonders if 95% accuracy is sufficient and asks about the value of the remaining 5% to the business. They agree that 100% accuracy may not be necessary for all data sources, citing the example of tables with optional columns. Mayela shares her experience, stating that achieving 100% data accuracy is challenging, and the target can vary over time. Discussing the importance of data culture in a company is a separate topic.

Importance of Data Quality in Company Culture

Howard stresses the importance of data quality in company culture. He agrees with the previous discussion on data quality's role in planning and controlling practices. Howard mentions the significance of subjective and objective measures in understanding data quality. He acknowledges the need for a change in perspective and an awareness, desire, and knowledge environment.

If you have enjoyed this blog and would like to watch the recording for more information, please get in touch with us on LinkedIn or at social@modelwaresystems.com.

Remember to RSVP on Meetup and join a community of fellow data professionals to be a part of the discussion. We are excited for you to join us!

If you want to receive the recording, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!