DMBOK Revised Edition – Episode 2

Executive Summary

‘DMBOK Revised Edition – Episode 2’ outlines various data management and examination procedures topics. Howard Diesel covers the transition to the new exam platform, Canvas, and provides online quiz procedures and navigation information. Additionally, he discusses the evaluation and exam procedures for D2L, exam feedback, and technical issues. The webinar discusses the changes to data quality dimensions, data integration, data lineage, and metadata. It emphasises the significance of understanding data quality and information security regulations and the role of data modelling and cardinality in relationship types. Lastly, Howard highlights the essential points for data science and ethics exam preparation, the importance of using a dictionary in data management, and data lineage tools.

Webinar Details:

Title: DMBOK V2 Revised Edition – Episode 2

Date: 15 April 2024

Presenter: Howard Diesel

Write-up Author: Howard Diesel

Contents

Executive Summary.

Webinar Details:

Transition to New Exam Platform.

Canvas Platform and Practice Exam.

Online Quiz Procedures and Navigation.

D2L Evaluation and Exam Procedures.

Exam Feedback and Technical Issues.

Data Management Fundamentals Practice Exam Feedback.

Test Taking Strategy.

Exam Process Notes.

CDMP Exam Preparation.

Exam Preparation Notes.

Data Quality Dimensions.

Data Quality Dimensions and Beach Inspection.

Data Element Validation.

Data Integration and Interoperability.

Data Lineage and Metadata.

Data Lineage and Business Glossary.

Understanding Data Quality and Information Security Regulations.

Regulation and Data Modeling.

Cardinality and Optionality in Relationship Types.

Key Points for Data Science and Ethics Exam Prep.

Data Lineage Tools.

Data Lineage and Dependency in PowerBI

Importance of Using a Dictionary in Data Management

Transition to New Exam Platform

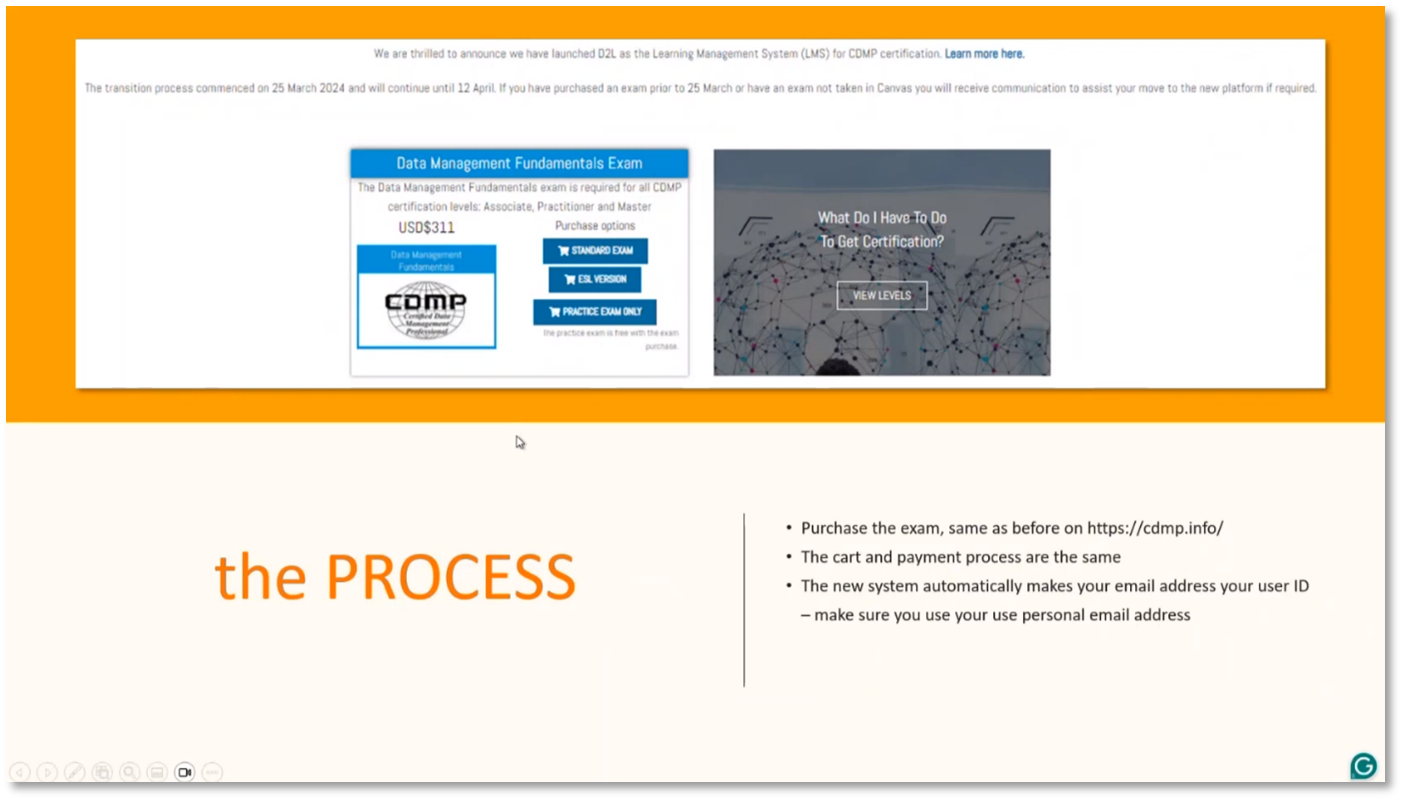

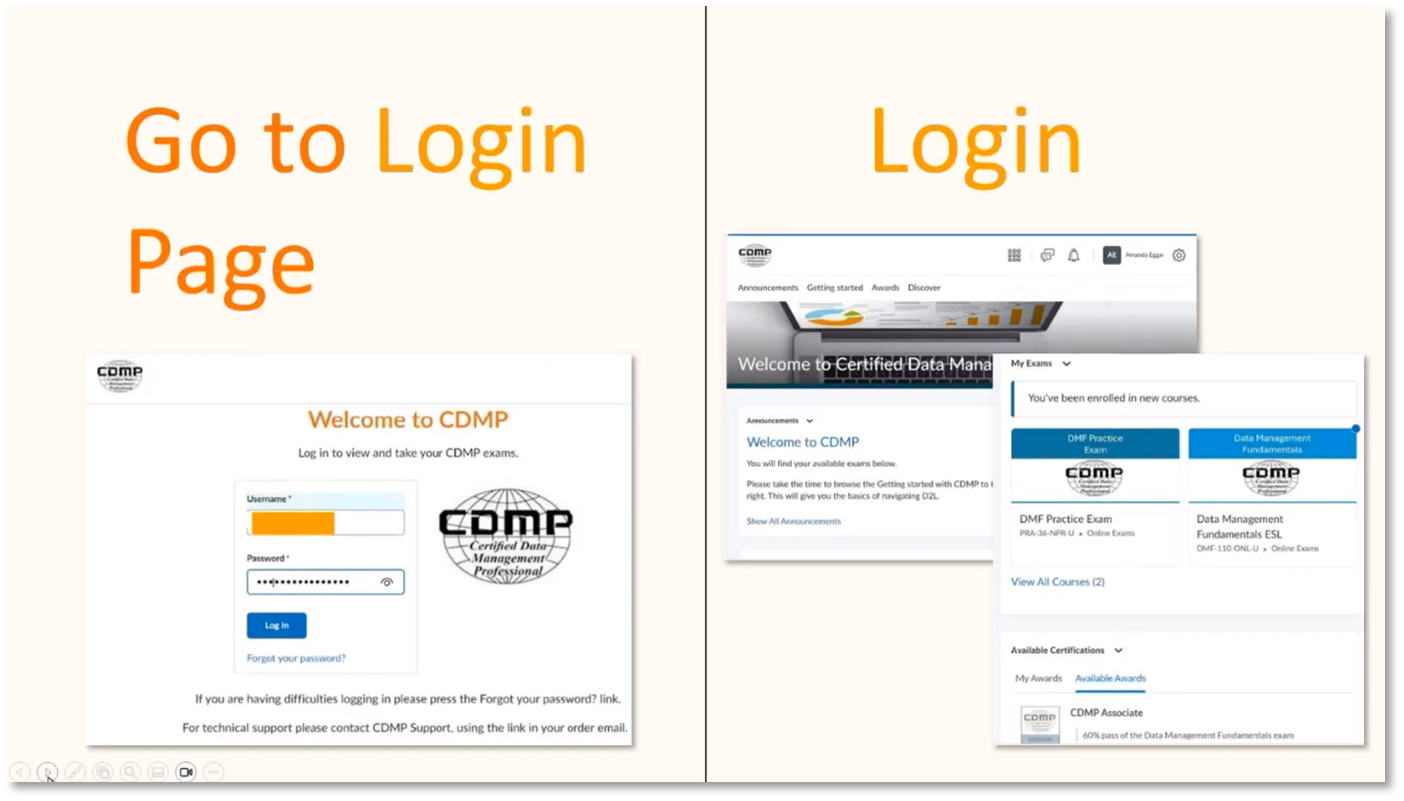

Howard Diesel opens by introducing important information regarding the CDMP certification program. He shares a deck of slides that compares knowledge areas containing 61 slides. The new platform, D2L, will go live in three days and affect exam registrations and administration during the transition period. It is important to note that individuals who have already taken exams on Canvas will still need to set up an account on D2L. Tips and tricks for using D2L will be provided, along with a technique for answering Master Level questions. To take the exam, individuals must purchase it on cdmpdo.info and register on the exam platform, which includes setting up a D2L account, logging in for the first time, and setting up a password and profile.

Figure 1 New platform D2L Demo

Figure 2 The D2L Process

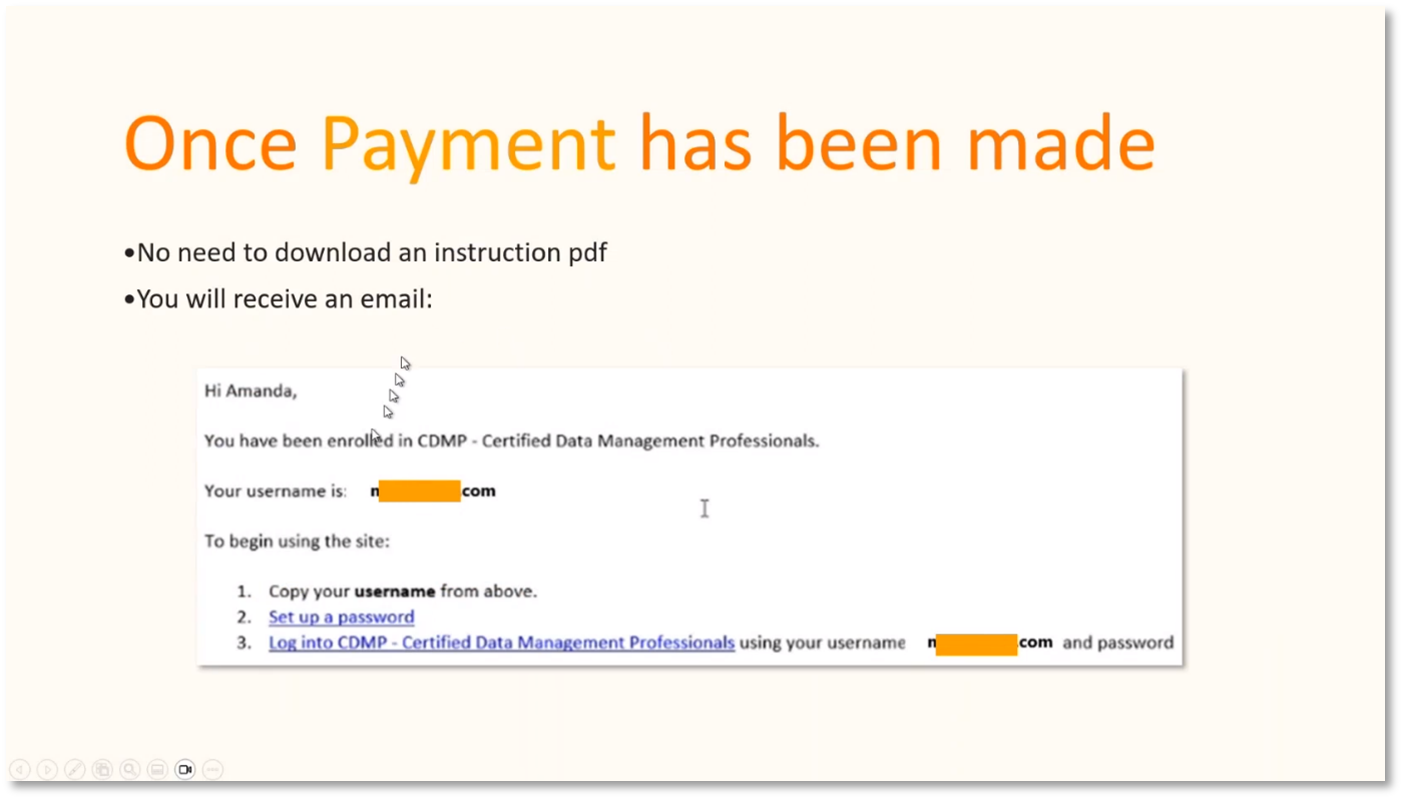

Figure 3 What to do Once the Payment has been made

Canvas Platform and Practice Exam

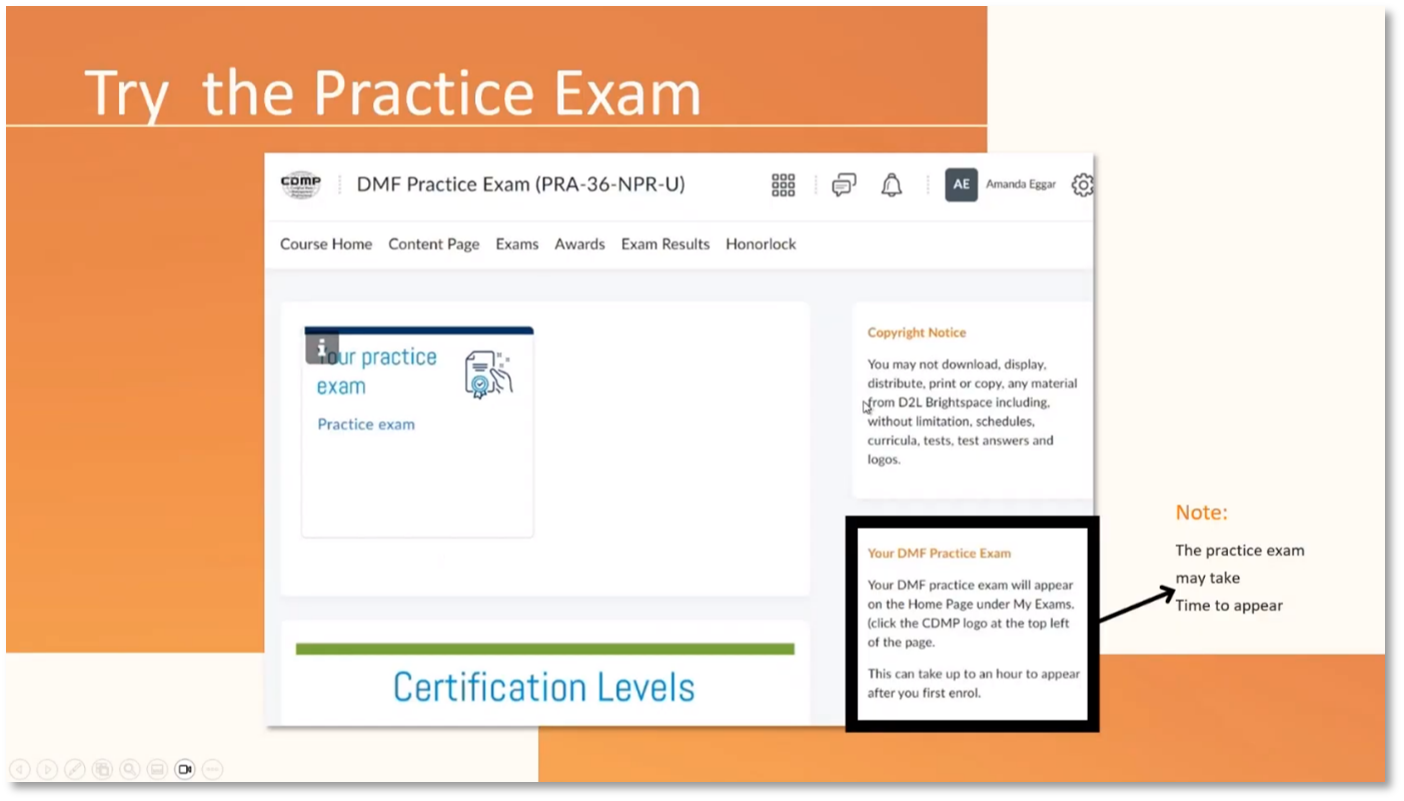

Users are advised to use a personal email to avoid communication and access issues when logging into the platform. The platform includes a practice exam and data management fundamentals. For English as a Second Language (ESL) users from Saudi, the Gulf area, and Africa, an extra 20 minutes is given due to language differences. Users may experience a delay in accessing the practice exam, and navigating to exam tiles is done via clicking on the CDMP logo. It is recommended to take the practice exam even before specialist exams due to the helpfulness of the practice questions.

Figure 4 Set up your password

Figure 5 Go to the Login page and Login

Figure 6 Try the practice exam

Online Quiz Procedures and Navigation

The following are key points to remember regarding the DMF Practice Exam hyperlink, quiz setup, and Honorlock process. After entering the situation, the next screen will display the summary, description, and quiz details. Starting the quiz will initiate the timer only after the setup process is completed, which may include sorting out the Honorlock process. Honorlock must be set up to launch for both practice and main exams in advance. The practice exam did not initially launch Honorlock, while subsequent attempts did, but the reason for this is unclear. Honorlock’s setup process includes taking a picture of your face, agreeing to terms and conditions, providing a photo ID and room scan, and granting permission to record your screen. Changes in the quiz navigation display the pages and questions answered, but does not provide flagging ability for uncertain or guessed answers. It is recommended to revise and guess when uncertain but to continue moving forward during the quiz.

Figure 7 Accessing the practice exam (quiz)

Figure 8 Starting the practice exam (quiz)

Figure 9 The practice exam (quiz)

D2L Evaluation and Exam Procedures

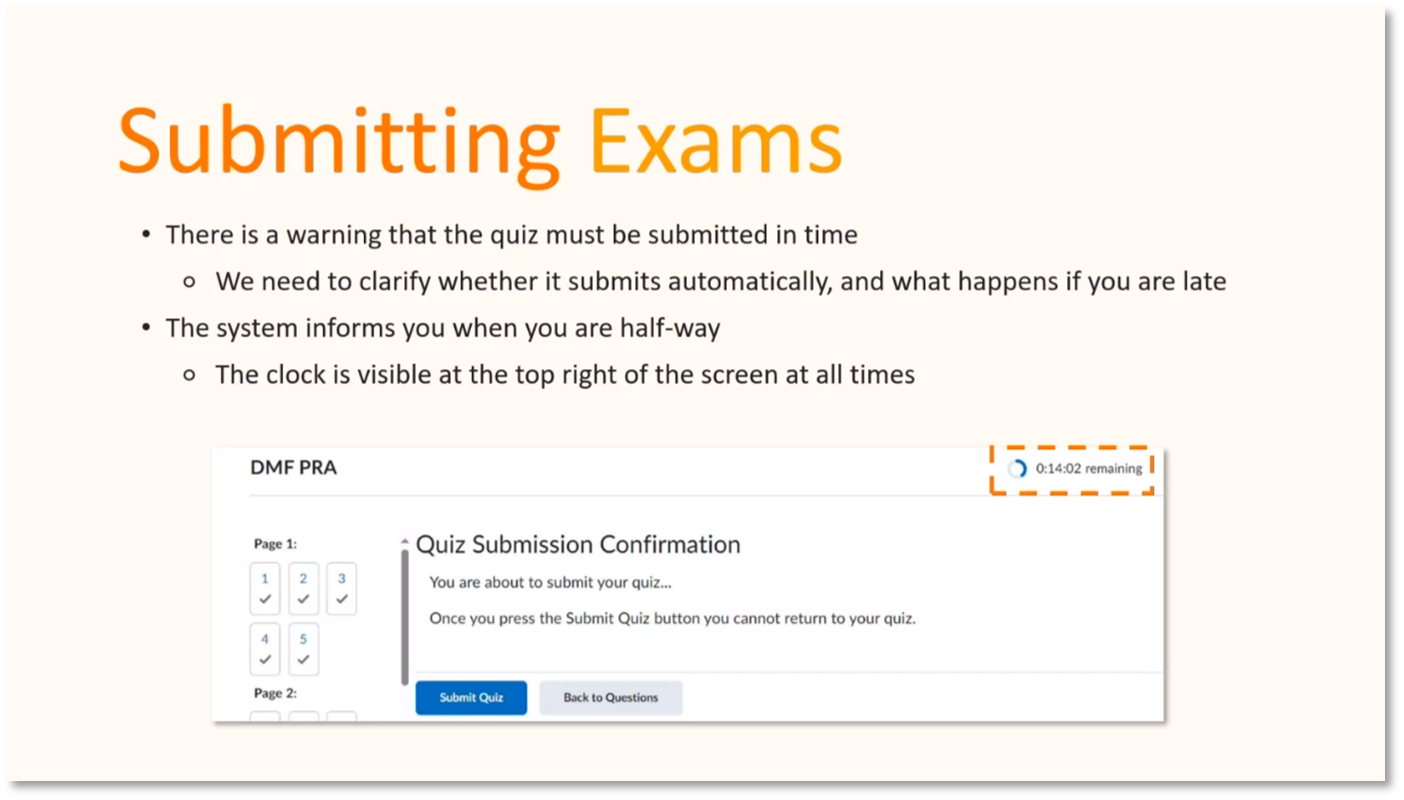

The D2L platform requires identification and is currently being evaluated, with plans to increase functionality in October. Navigation issues exist, such as the inability to flag questions for review. Quizzes will refer to the new version of the DMBoK V2 Revised Edition in October. Submitting exams on the platform is uncertain as there may be an auto-submission feature and potential penalties for late submissions. One user is seeking clarification on whether their April exam will be taken on the new platform and if there will be penalties for late submissions. Additionally, there is an inquiry about the fundamentals exams on Canvas and if this will be the first exam being taken.

Figure 10 Submitting the exam

Exam Feedback and Technical Issues

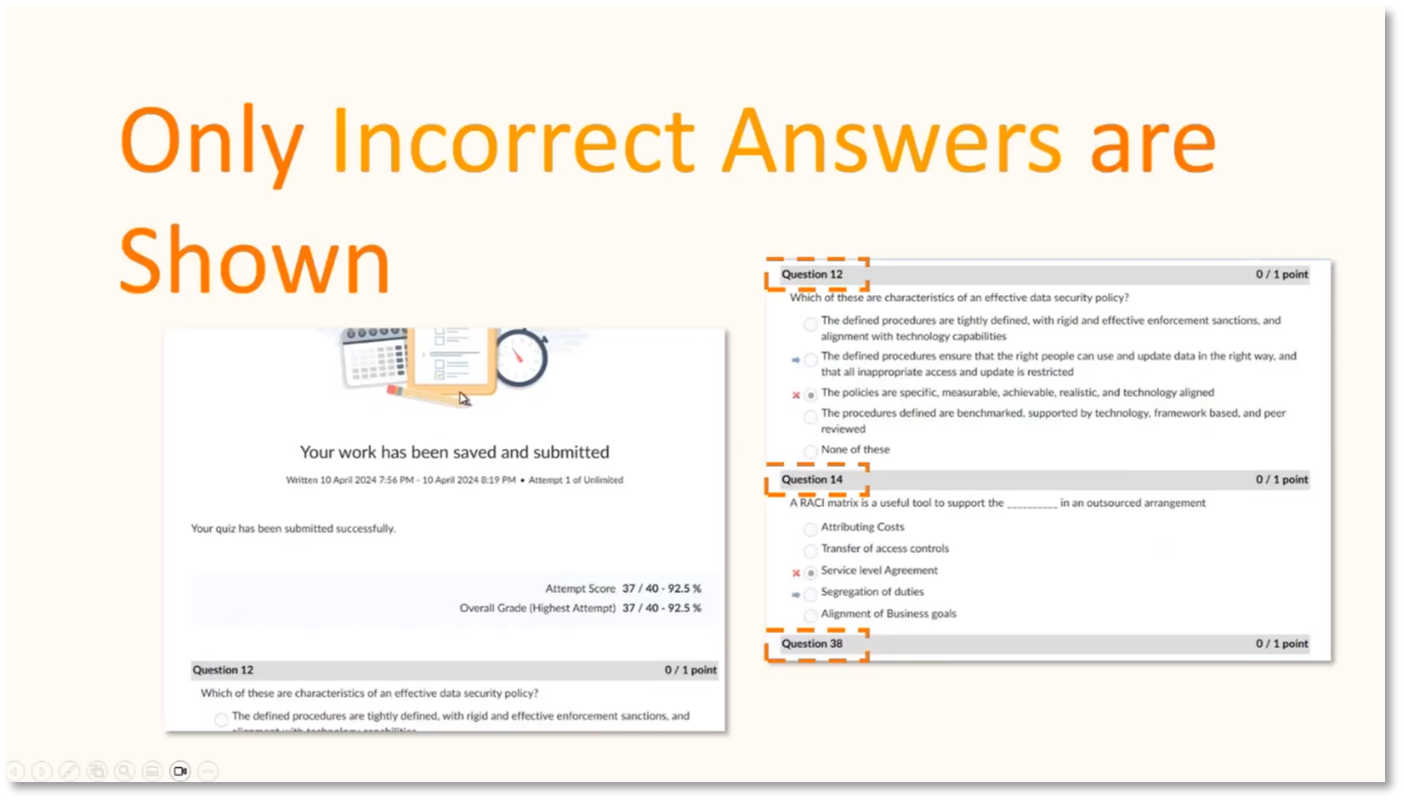

The practice exam on Canvas now only displays incorrect answers, making it easier to navigate. Feedback reveals only incorrect answers without displaying correct ones. To launch the unlock platform, clear cookies and cache and use Chrome. Closing all other browsers and programs may be helpful for resolving technical issues. Additionally, the new format of the practice exam has replaced the previous one.

Figure 11 Only the incorrect answers are shown once completed

Figure 12 Make sure you test Honorlock

Data Management Fundamentals Practice Exam Feedback

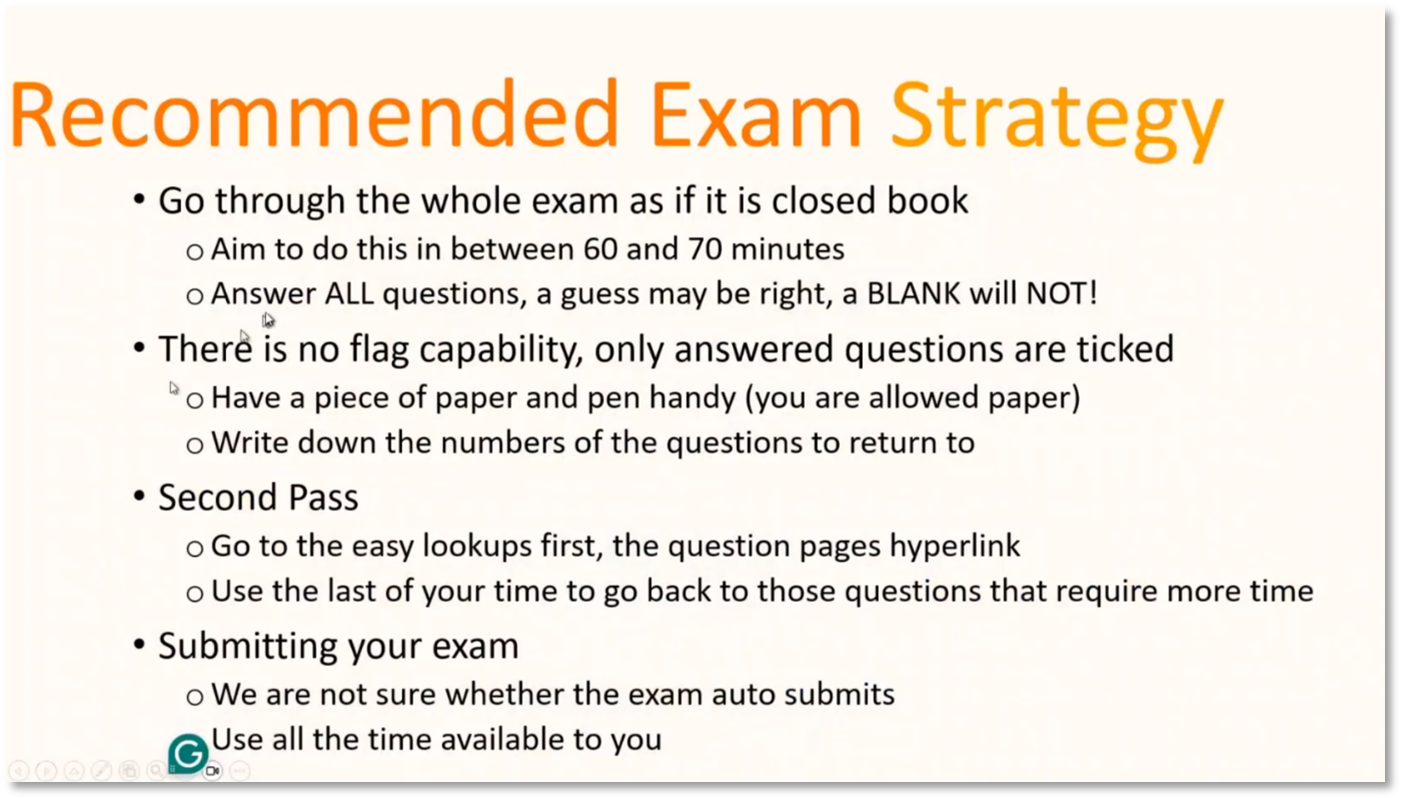

The practice exam timer now pauses if any issues are detected by the CDMP Supportive tool. Additionally, the hyperlink at the bottom of the screen has been moved to the same categories as all the other links. There is a platform update and questions on the new D2L platform. Exam techniques for answering Master Level questions and timing are also covered. ESL students get an extra 20 minutes to complete the exam, while others get 100 minutes for 100 questions. A timer is required for the exam, and phones are not allowed during the exam. Finally, there are suggestions for marking and moving on during the exam.

Figure 13 Veronica's Exam Technique

Figure 14 Recommended Exam Strategy

Test Taking Strategy

When taking a test, there are several tips to remember to perform well. Using a timer is crucial to manage time effectively. When unsure about a question, flag it and guess before moving on. It is important to read questions carefully, particularly those with negative words like "not" or "false", and to eliminate obviously incorrect options. Be cautious of absolute words such as "always" and "never" and negative questions. Go back to flagged questions after completing all the questions. Initially, approach the exam as if it were a closed book and answer all questions in the first pass. Use easy lookups first, such as metadata and technical terms, then spend more time on challenging questions in the second pass.

Exam Process Notes

The Data Management Body of Knowledge (DMBoK) serves as the primary resource for 60% of questions in data governance, data quality, and data modelling exams. The remaining 40% of questions may cover cross-knowledge areas and DMBoK-related concepts not directly found in it. To prepare for the exam, it is recommended to use a practice platform with questions on these topics. There is a discussion of the benefits of taking the exam on the old platform versus the new platform, with companies being advised to register and transition to the new platform. It is confirmed that the exam must be submitted on the new platform.

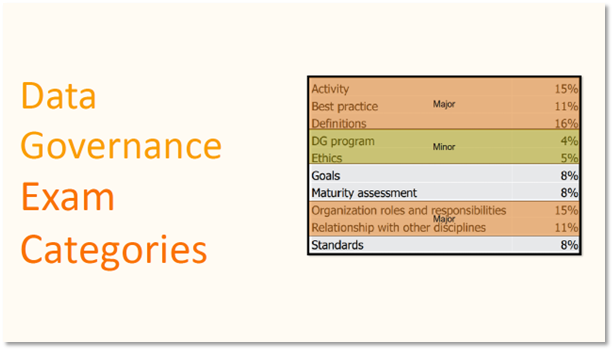

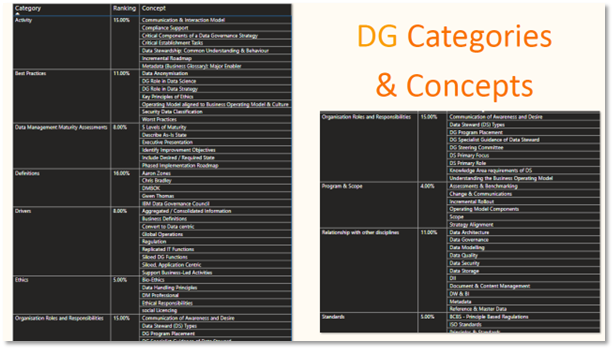

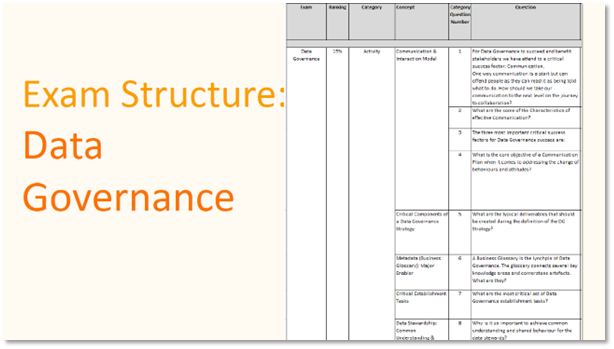

CDMP Exam Preparation

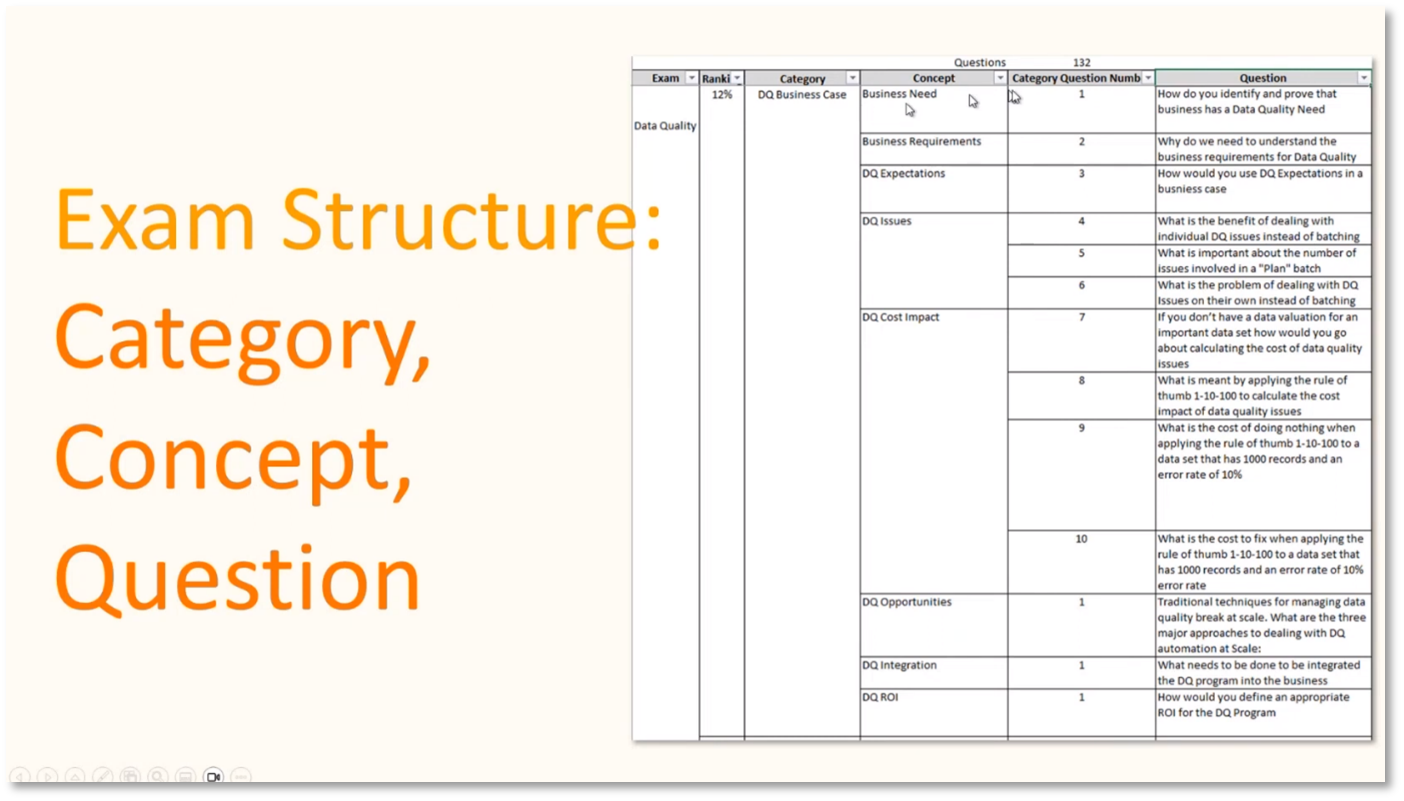

Due to recent updates to the content and questions, it is advised to complete the CDMP exam before October. The specialist exam is organised into categories, and questions are grouped by concept with distractors and correct answers. It is recommended not to use the revised book for the exam before October. Details about changes to recertification requirements have not yet been announced.

Figure 15 Specialist exams

Exam Preparation Notes

The DMBoK is a valuable resource for those taking the CDMP exam. While the revised edition is the only one available for purchase, version 2 can be obtained for free by applying before October. It's important to take the exam using the old DMBoK version 2 before October to pass the foundation exam. The large yellow sticker on the label can identify the revised edition. The exam technique for the data quality and specialist exams are discussed, and a sample question is provided. The exam includes master-level questions, and searching for specific terms may not be allowed. It's emphasised that a deeper understanding of the data quality dimension is required for the exam.

Figure 16 Look out for the yellow badge on the DMBOK Revised Edition

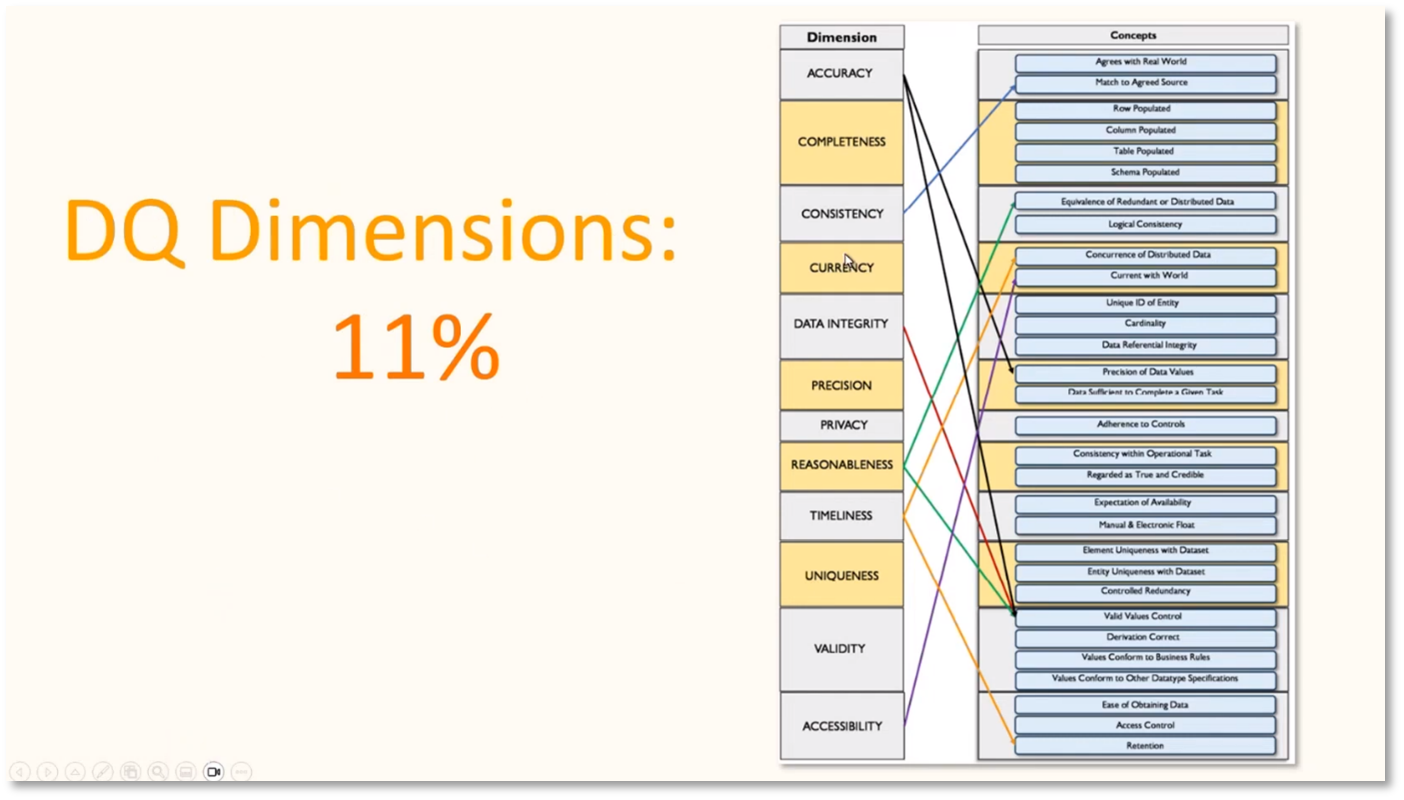

Data Quality Dimensions

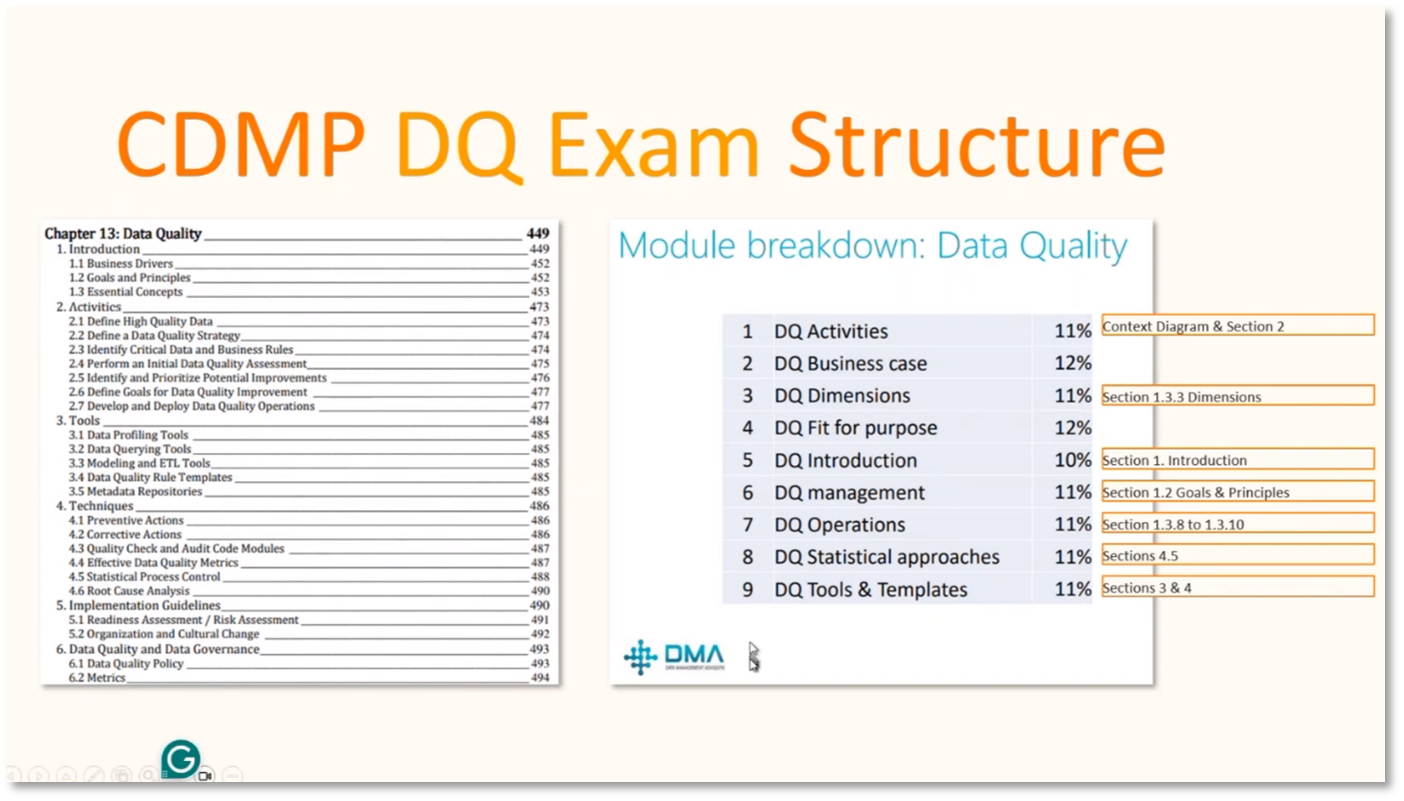

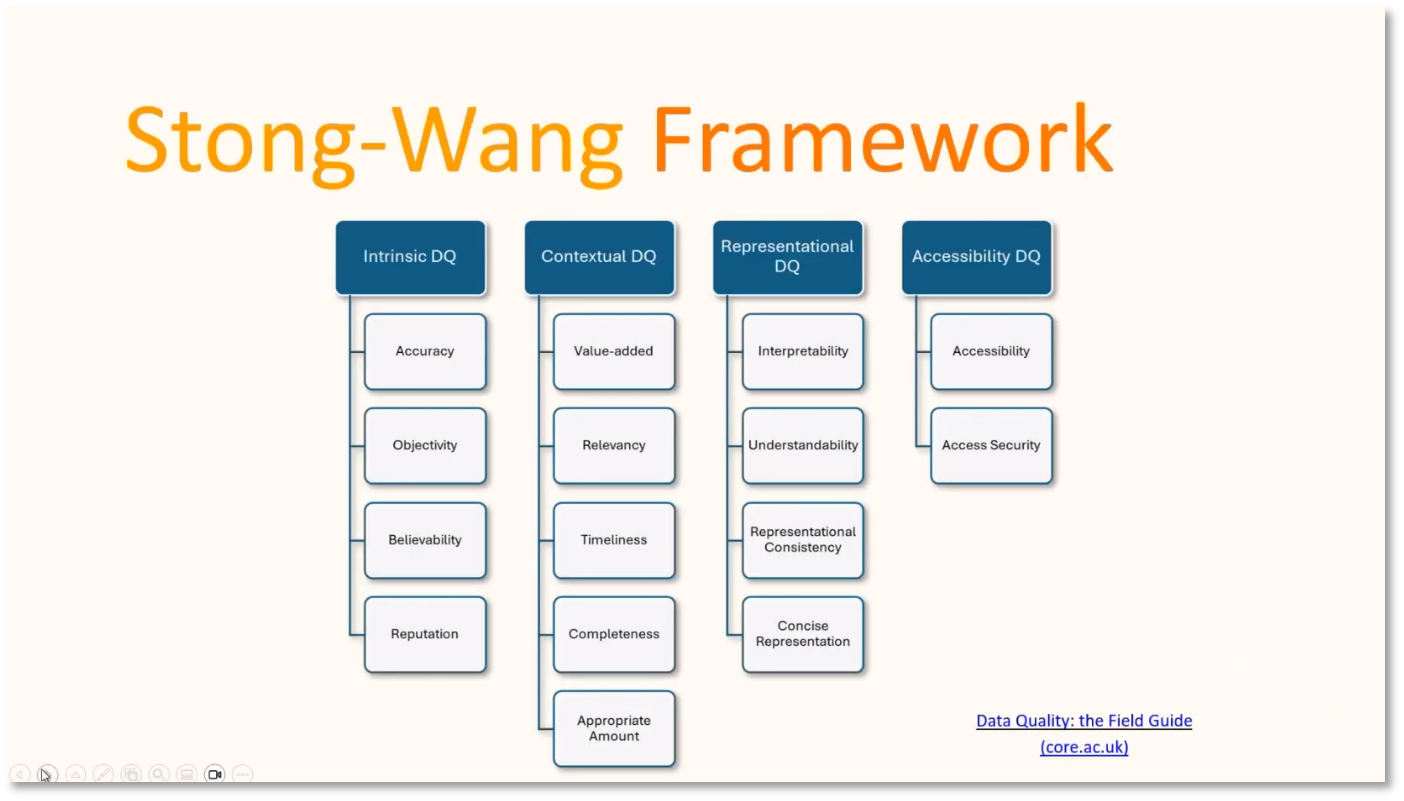

Howard discusses various categories related to data quality activities and business case dimensions. He notes that the context diagram and section two answer several questions about these categories. One of the categories, DQ business case, refers to business drivers and challenges. The concepts listed under this category include business case, business need, business requirements, and DQ exceptions. The focus is on exploring data quality dimensions and concepts, such as validity, valid values, control, derivation, correct values, conformity to business rules, and data types. However, measuring quality at the dimension level, such as completeness or population of rows/columns, can be difficult. The revised edition has removed the dimension slide, but exploring the dimension and concept levels of data quality dimensions and concepts can still provide many benefits. Building a PowerBI to show data governance drivers, aggregates, and sample questions also emphasises the relationship with other disciplines, such as architecture, governance, and modelling.

Figure 17 CDMP Data Quality Exam Structure

Figure 18 Exam Structure: Category, Concept and Question

Figure 19 Data Quality Dimensions

Figure 20 Stong-Wang Framework

Data Quality Dimensions and Beach Inspection

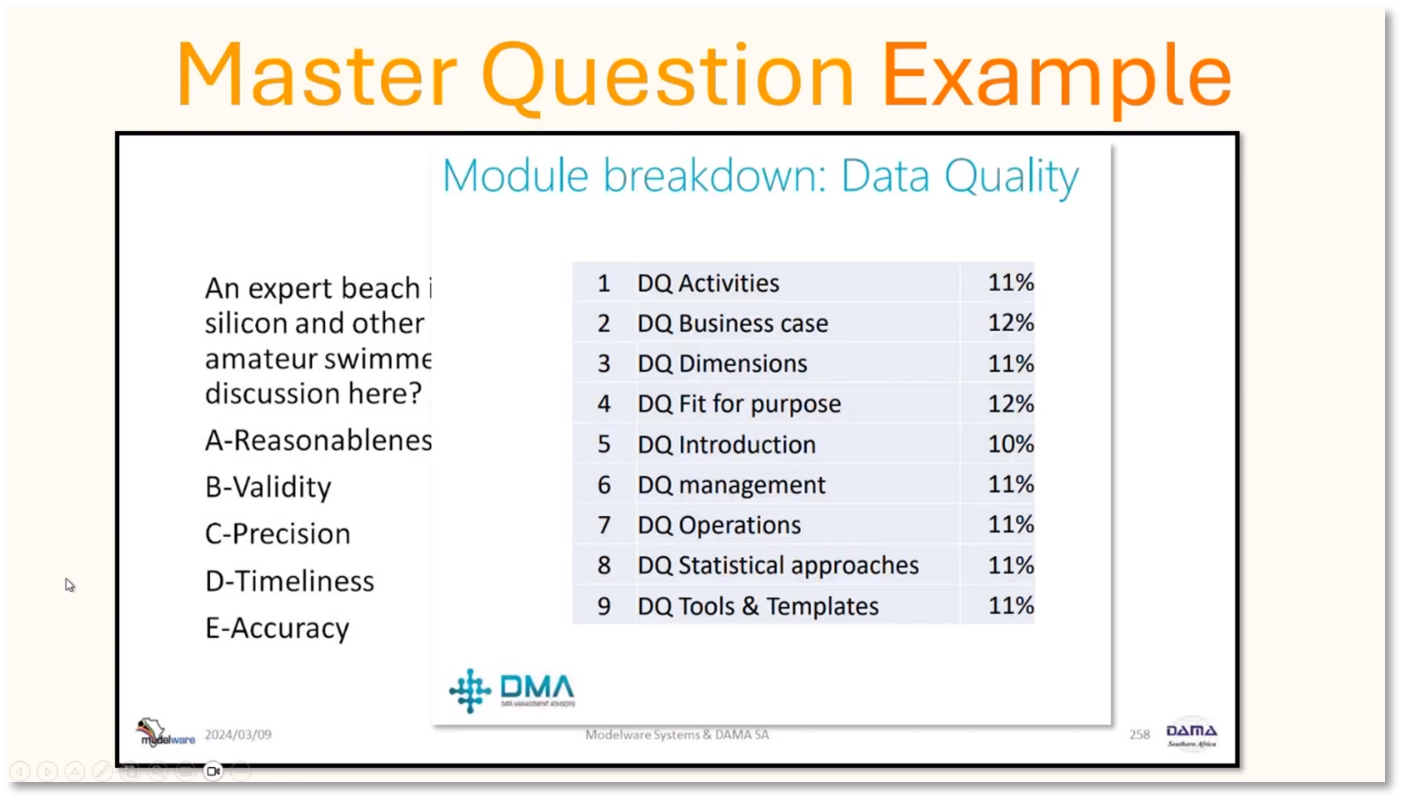

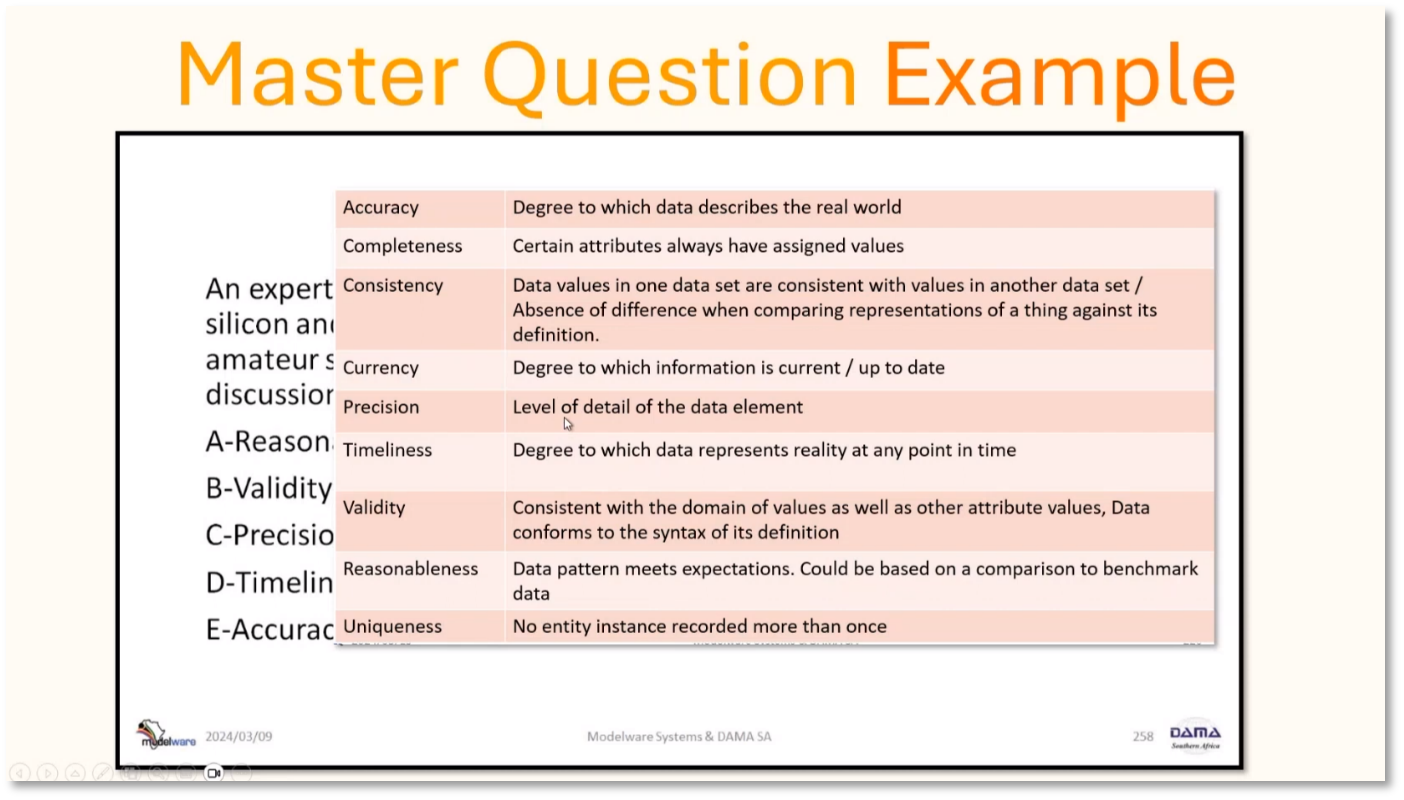

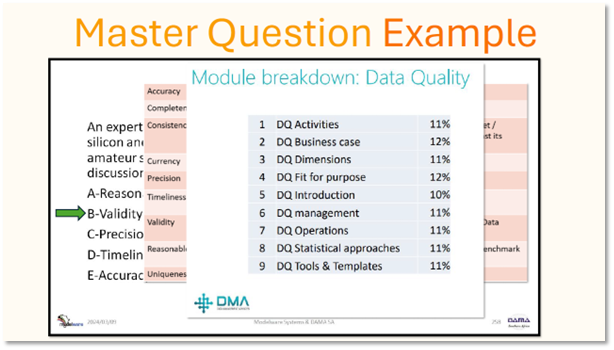

Data quality dimensions are critical aspects to consider when evaluating data quality. These dimensions include accuracy, precision, completeness, consistency, currency, timeliness, validity, reasonableness, and uniqueness. In different scenarios, it is important to consider the definitions of these dimensions and find one or two words that align with the question to determine the correct dimension being discussed. For instance, in the context of a beach inspector discussing different types of silicon and mineral substances, the data quality dimension discussed is completeness, which pertains to the completeness of attributes and values assigned to certain elements. It is emphasised that one should provide reasoning behind one's answer to ensure a deeper understanding and application of the data quality dimensions in different scenarios.

Figure 21 Data Quality Master Question Example

Figure 22 Data Quality Master Question Example Explanation

Figure 23 Data Quality Master Question Example Explanation continued

Figure 24 Data Quality Master Question Example Answer

Figure 25 Validity vs Precision

Data Element Validation

Validity and precision are two important concepts to consider when working with data. Validity refers to the range of values that a data element can take on and whether those values are accurate and relevant to the problem at hand. On the other hand, precision refers to the level of detail or granularity of a data element, such as the number of decimal points or significant figures used to represent it. While validity is concerned with the overall accuracy and appropriateness of the data, precision is focused on the specific level of detail required for a particular analysis or application. Understanding the distinction between these two concepts can help ensure that data is used effectively and appropriately and can lead to better decision-making and problem-solving. Additional references and resources can be found to explore further and understand the distinction between precision and validity.

Figure 26 Metadata Sample Question

Data Integration and Interoperability

In the context of data integration and interoperability, validity and precision are important concepts to consider. Validity refers to the permissible data values, while precision is not related to specialisation in data modelling. Mapping requirements and rules for data integration is crucial for addressing the knowledge area of DII, and the concept of ETL plays a significant role in this process. It is essential to focus on the correct knowledge area and concept, such as ETL, and scratch out options that do not align with it, such as backups and analysis, which are part of different areas.

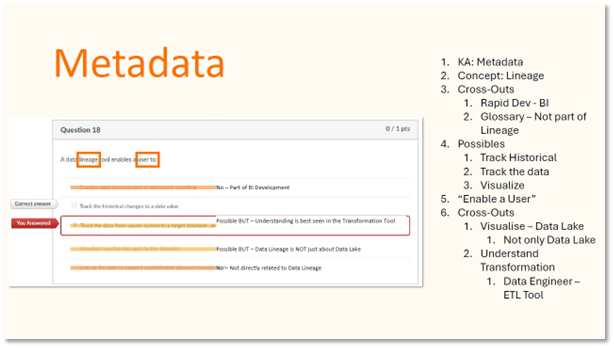

Data Lineage and Metadata

Howard discusses the process of extracting, transforming, and loading data is explored, and the importance of accurately understanding and analysing questions for proper response is emphasised. The focus then shifts to the concept of data lineage and its relation to metadata, with the role of data lineage tools in enabling user knowledge about metadata being highlighted. The key concept being discussed is the knowledge area of metadata and its role in data lineage. Finally, the process of eliminating distractors and refining the understanding of the data lineage and metadata concept is explained.

Figure 27 Metadata Sample Question 2

Data Lineage and Business Glossary

Data lineage is a process that tracks the movement of data from external systems to the data lake, staging, data warehouse, data mart, and reports. It enables users to view and understand the movement and changes in the data, thereby helping with compliance, data quality, and data governance. While it shows how data gets into the data lake and checks historical changes and data from source to target with transformations, it is not about understanding data transformations, which is better suited for transformation or ETL tools. Data lineage and business glossary are related, allowing navigation from glossary to dictionary to where the data set has been used. The concept is lineage in metadata, and the answer lies in historical changes to the data value.

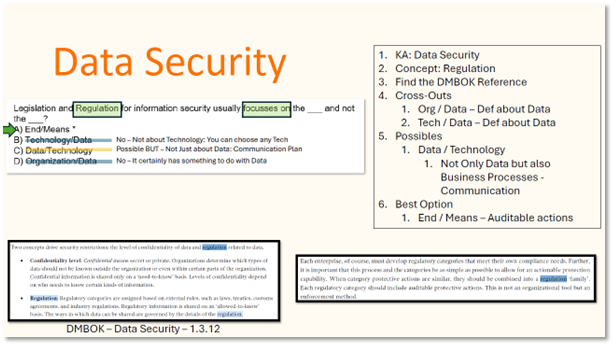

Understanding Data Quality and Information Security Regulations

Howard covers key aspects of data quality, visualisation, legislation, regulation, and security. Providence is crucial in ensuring data quality and reliability regarding the entity that sends the data. While visualising data lakes can be helpful, it does not provide a full understanding of data transformation and historical changes. Legislation and regulation for information security are focused on the means of security rather than the data itself. Therefore, the need to be aware of both data security and the regulatory framework governing information security is emphasised.

Figure 28 Data Security Sample Question

Regulation and Data Modeling

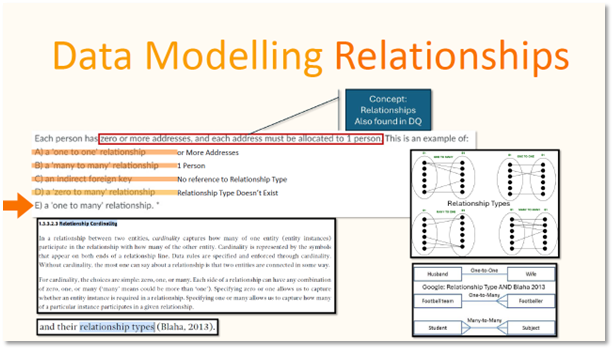

Regulation and data privacy law are critical aspects of data management. While technology plays a crucial role in this process, it is not the only factor to consider. Organisational structure and sound data management practices are equally important. Data privacy law mandates that organisations have a communication plan to deal with breaches. Selecting the best option in data management involves finding the reference, eliminating unsuitable choices, and choosing the most appropriate one. In data modelling, understanding relationships and cardinality is crucial. Cardinality refers to the options of zero, one, or many in relationships. The types of relationships in data modelling include one-to-one, one-to-many, many-to-one, and many-to-many.

Figure 29 Data Modelling Sample Question and Relationships

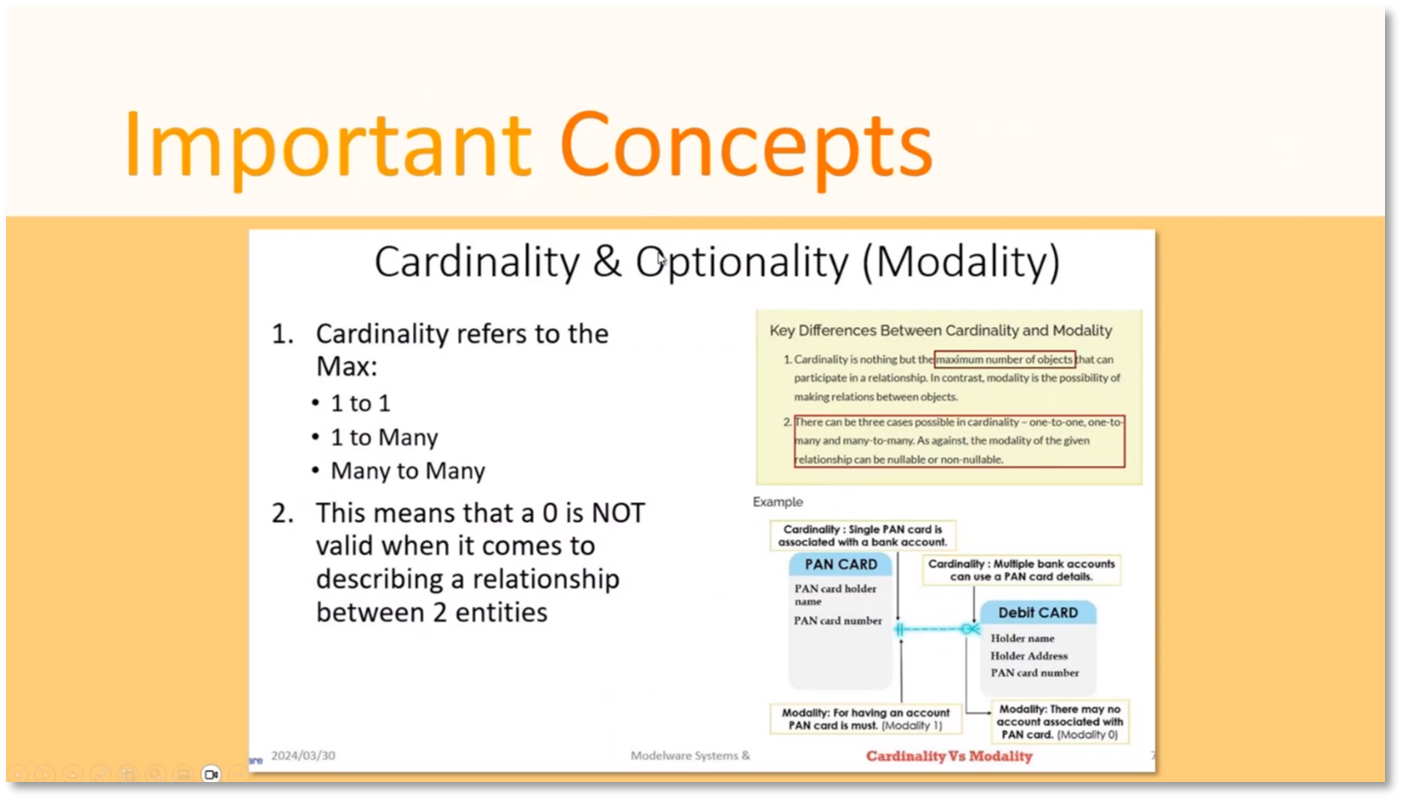

Cardinality and Optionality in Relationship Types

Relationship types play a crucial role in data governance and can be classified as one to one, one to many, or many to many. It's important to eliminate options that don't fit the given criteria, such as zero to many. Understanding categories and concepts within them is key for exam preparation. Cardinality refers to the maximum number of relationships on each side, while optionality refers to the minimum. The focus is on cardinality when considering the relationship between husband and wife.

Figure 30 Important Concepts

Key Points for Data Science and Ethics Exam Prep

To succeed in the exam, it is crucial to have a strong grasp of the categories and concepts within them. Howard notes the importance of understanding the best practices and data governance roles, key principles of ethics, and identifying important questions to choose the right answers. Practising with sample questions can help to improve techniques and speed. It's important to have a focused and distraction-free environment during the exam, and taking screenshots is not allowed. Those who struggle with Master Level questions are recommended to use a specific technique.

Figure 31 Data Governance Exam Categories

Figure 32 Data Governance Categories and Concepts

Figure 33 Exam Structure: Data Governance

Data Lineage Tools

Data lineage tools are instrumental in tracking the movement and changes of data values over time, aiding high-level executives in verifying the accuracy of transformations per business rules. They differ from audit trails, which record temporal changes in values. While data lineage tools elaborate on the source and target data, the transformation process is not involved. These tools are particularly crucial in banking and risk data, preventing unauthorised manipulation of data values.

Data Lineage and Dependency in PowerBI

PowerBI provides several features to help users understand the flow and dependency of data sets, such as data lineage and dependency diagrams. However, these features only show where the data comes from and its relationships with other data sets without providing the actual data values or its transformations. While the online service in PowerBI allows users to view data set dependencies and flow, it doesn't show the transformation of values. Query dependencies in PowerBI are similar to the online dependency view, showing the flow of where data comes from. Still, a more sophisticated tool is required to see the transformation of data values. Therefore, to better analyse data, users should focus on eliminating distractors and practising techniques that allow them to understand and interpret data more effectively.

Importance of Using a Dictionary in Data Management

Reading the Data Management Terminology helps understand the terminology that will come up in the exam. Access to an Excel version of the dictionary and Quizlet can enhance learning. The inclusion of data science terminology adds further depth. Utilising these resources can facilitate knowledge acquisition.

If you want to receive the recording, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!