Riskiest Risks - Data Protection for DM Professionals

Executive Summary

‘Riskiest Risks - Data Protection for DM Professionals’ outlines key considerations for effective data management, governance, and privacy in modern organisations. Caroline Mouton and Howard Diesel cover data understanding, security and risk assessment, data architecture, and data compliance. The webinar explores the importance of data classification and emphasises cataloguing and lineage, along with the utilization of metadata to analyse data assets. Additionally, Caroline and Howard highlight the significance of data privacy and compliance in automated decision-making and the need for collaboration and accountability in data management. They suggest implementing these strategies as essential for ensuring data integrity, security, and compliance while facilitating effective decision-making processes.

Webinar Details:

Title: Riskiest Risks - Data Protection for DM Professionals

Date: 13 January 2021

Presenter: Caroline Mouton & Howard Diesel

Meetup Group: Data Privacy & Protection with Caroline Mouton

Write-up Author: Howard Diesel

Contents

Data Understanding and Privacy Risk Assessment

Data Security and Risk Assessment

Risky Processing for Type Three.

Risks and Accountability of Cloud-Based Systems.

Data Privacy Program and Data Classification.

Data Catalogue and Building a Data Model

Data Architecture and Data Governance.

Overview of Data Governance and the Importance of a Data Catalogue.

Building a Data Lineage Approach.

Data Asset Management and Analysis.

Utilising Metadata to Query and Analyse Data Assets.

Data Management and Reporting.

Data Lake, Data Source, Data Catalogue, and Data Governance.

Importance of Data Compliance and Privacy by Design in Automated Decision-Making.

Data Understanding and Privacy Risk Assessment

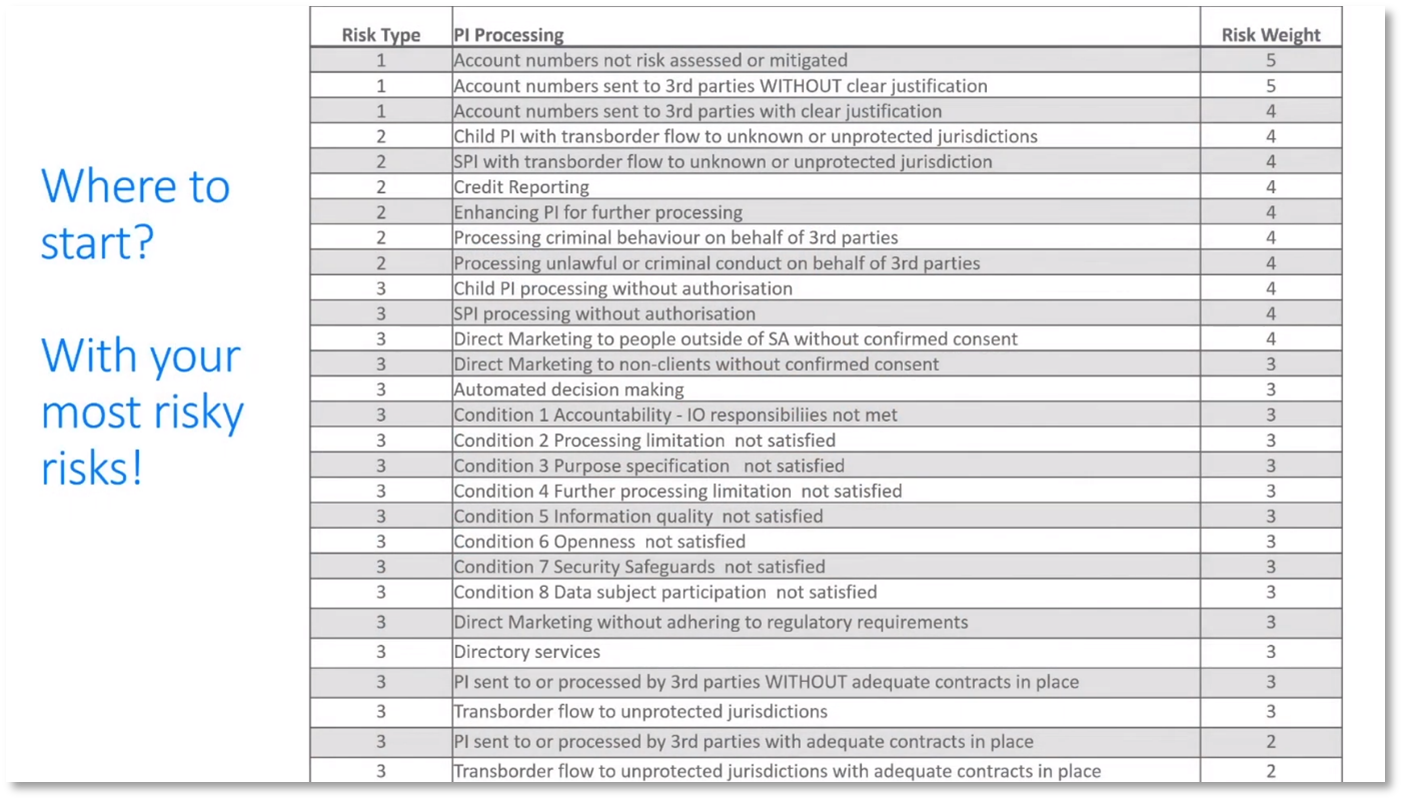

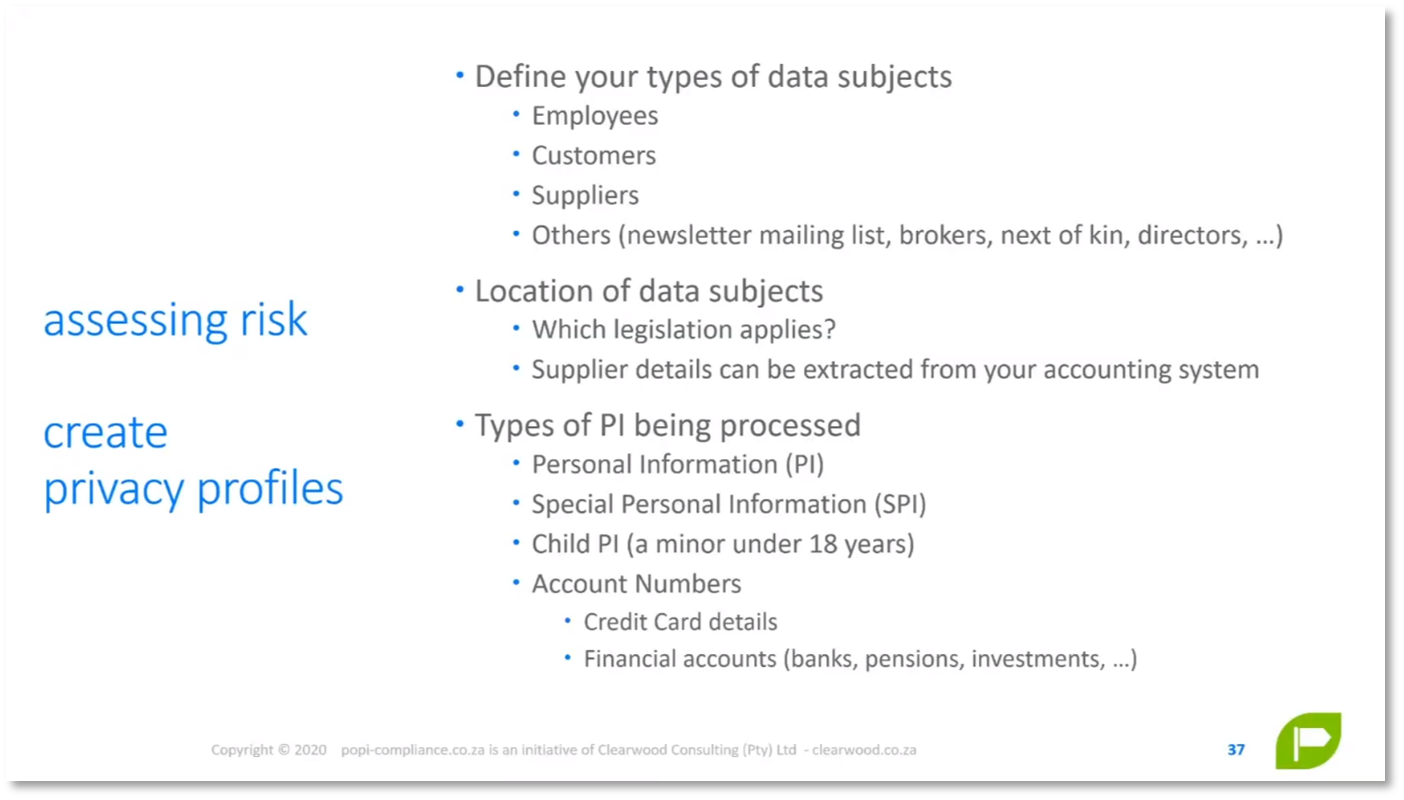

Howard Diesel explains that organisations can use a data catalogue or dictionary to locate personally identifiable information in their databases to understand data better and identify potential privacy risks. Analysing data flows, business processes, and applications can aid in processing the data and identifying potential privacy risk areas. To assess privacy risk, it's important to understand the privacy profile of data subjects and identify the applicable legislation, such as the General Data Protection Regulation (GDPR) or the California Law Act. Third-party suppliers should also be considered data subjects and assessed for risk. Properly managing their risk requires including their location in the vendor register within the accounting system.

Figure 1 Data Understanding and Privacy Risk Assessment. Where to start?

Figure 2 Data Understanding and Privacy Risk Assessment: Assessing risk

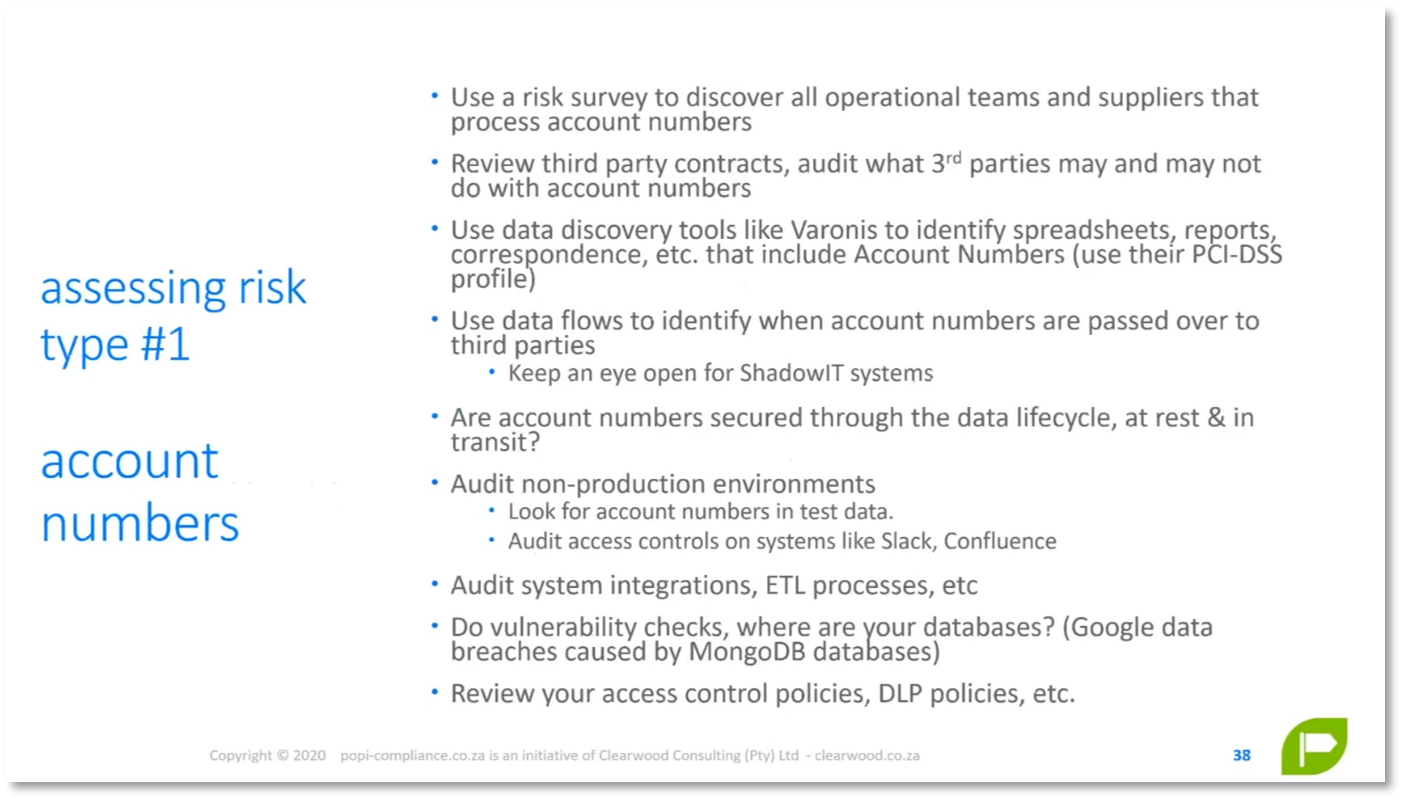

Data Security and Risk Assessment

Caroline Mouton recommends using the Payment Card Industry Data Security Standard (PCI DSS) to protect account numbers, including any number that provides funding access. A survey can be conducted to identify and classify unauthorised processing, using waiting criteria to prioritise departments to focus on. IoT systems are mentioned as where data lives, but Caroline suggests not to worry about it. Proprietary Information and Inventions Agreement (PIIA) can be used to determine the risk to data subjects. At the same time, Private Impact Assessment (PIA) should be applied when dealing with special personal information or information about children. Integration methods such as APIs, plugins, extensions, and machine learning should be understood to process data effectively.

Figure 3 Assessing Risk: Type 1

Figure 4 Assessing Risk: Type 2

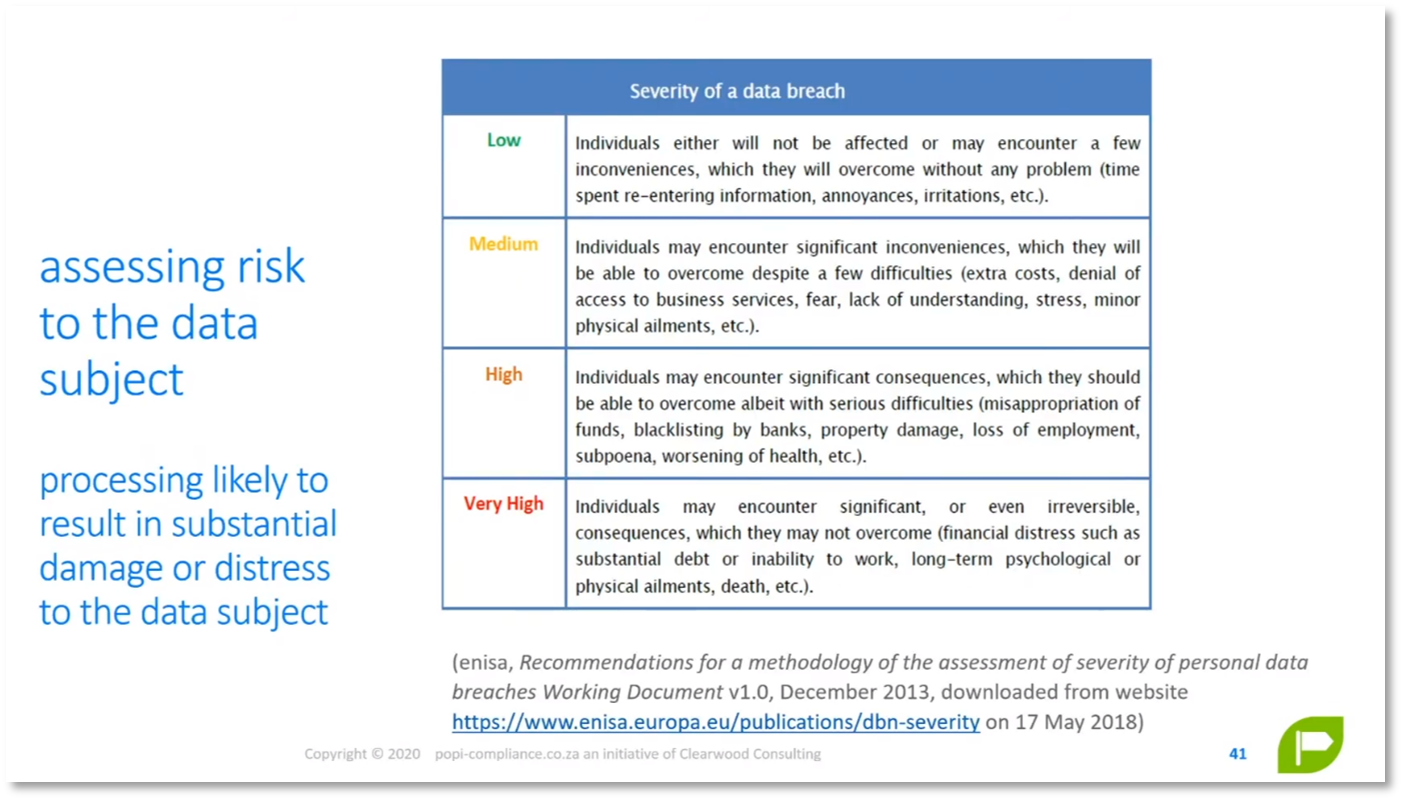

Risky Processing for Type Three

Regarding digital marketing, cookies, pixel tracking, and re-advertising can pose risks to the hardware endpoints. Risk surveys and direct marketing campaigns should consider pixel tracking. Organisations should be mindful of data flows, automated decision-making, and managing data breaches. Risk should be defined in terms of the organisation and the data subject. The data subject can also be a heuristic, not just a physical individual. Lastly, a concern is a lack of control over emails once they leave the servers.

Figure 5 Assessing Risk: Type 3

Figure 6 Assessing Risk to the Data Subject

Risks and Accountability of Cloud-Based Systems

Cloud-based systems such as Office 365 may pose a risk to organisations dealing with sensitive data due to the lack of control over where data is stored, making it susceptible to breaches of privacy laws. Cloud solutions may also limit the ability to manage and report on data, especially when processing special personal information or information about children. Strict measures may need to be taken to limit cloud data placement, potentially affecting organisational functionality. Thus, it is crucial to question and carefully consider cloud systems and their potential impacts on data subjects. While emails may pose some risks, the primary concern should be focused on using cloud-based systems and the potential exposure of sensitive information. Cloud providers should be held accountable and transparent about their data processing practices through data processing agreements to ensure compliance with privacy regulations.

Data Privacy Program and Data Classification

A privacy program's main goal is to assign responsibilities, define boundaries, and determine how to handle data, focusing on mitigating risks and prioritising data subjects' safety. Microsoft email offers data privacy and classification tools, including rules and controls to manage personal information. Howard touches on Protégé, a product he uses to support building data ontology. The process involves creating taxonomies and hierarchies to classify data and build an asset catalogue.

Figure 7 Penalties & Fines Taxonomy Facets

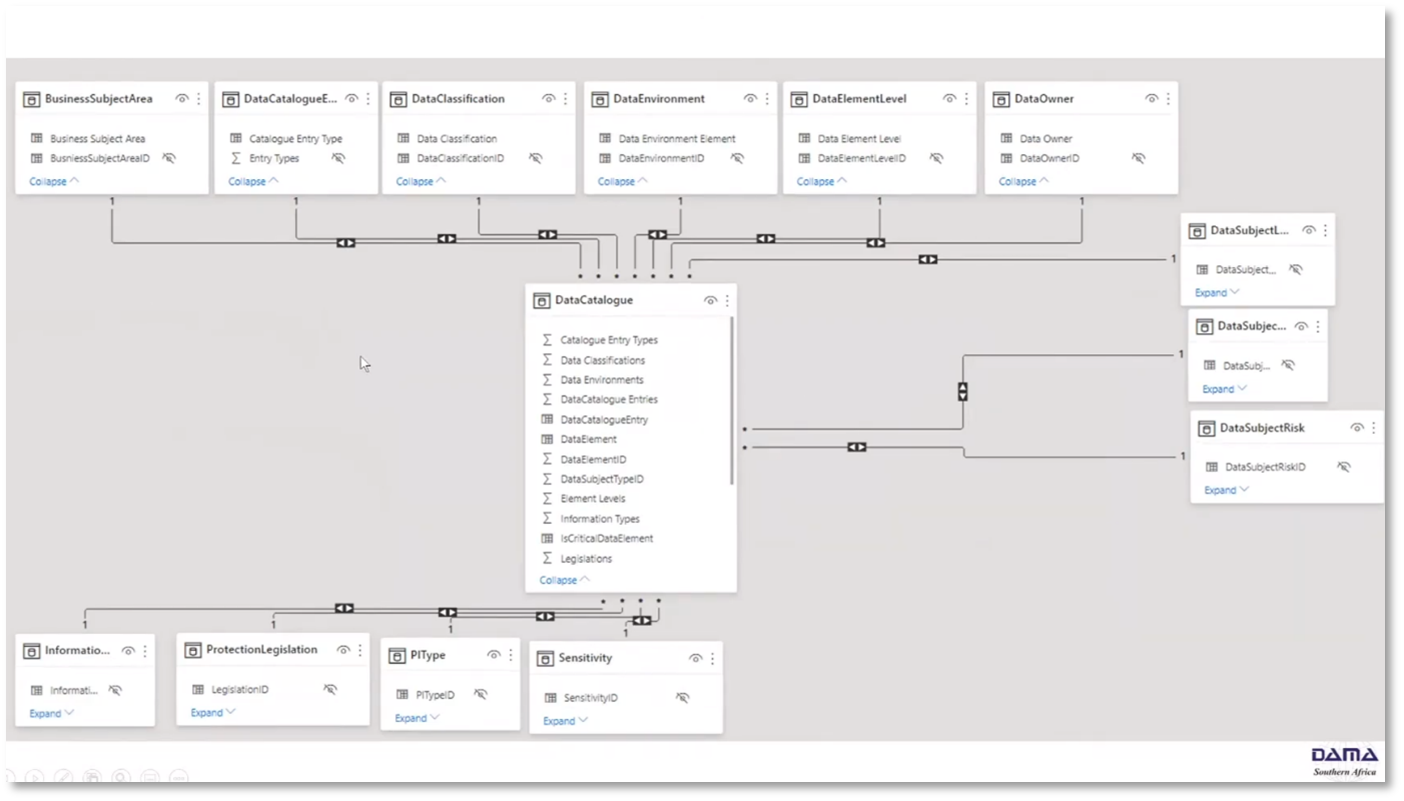

Data Catalogue and Building a Data Model

Building a data catalogue involves categorising data and adding business processes and flows, including manual parts and system applications or integration. To help with this, tools such as Oracle have GDPR compliance modules that allow for data classification across different tables. Reference data elements in the data catalogue serve as a basis for building a data model and extracting information, making it easier to understand and analyse data.

Figure 8 Data Catalogue and Building a Data Model

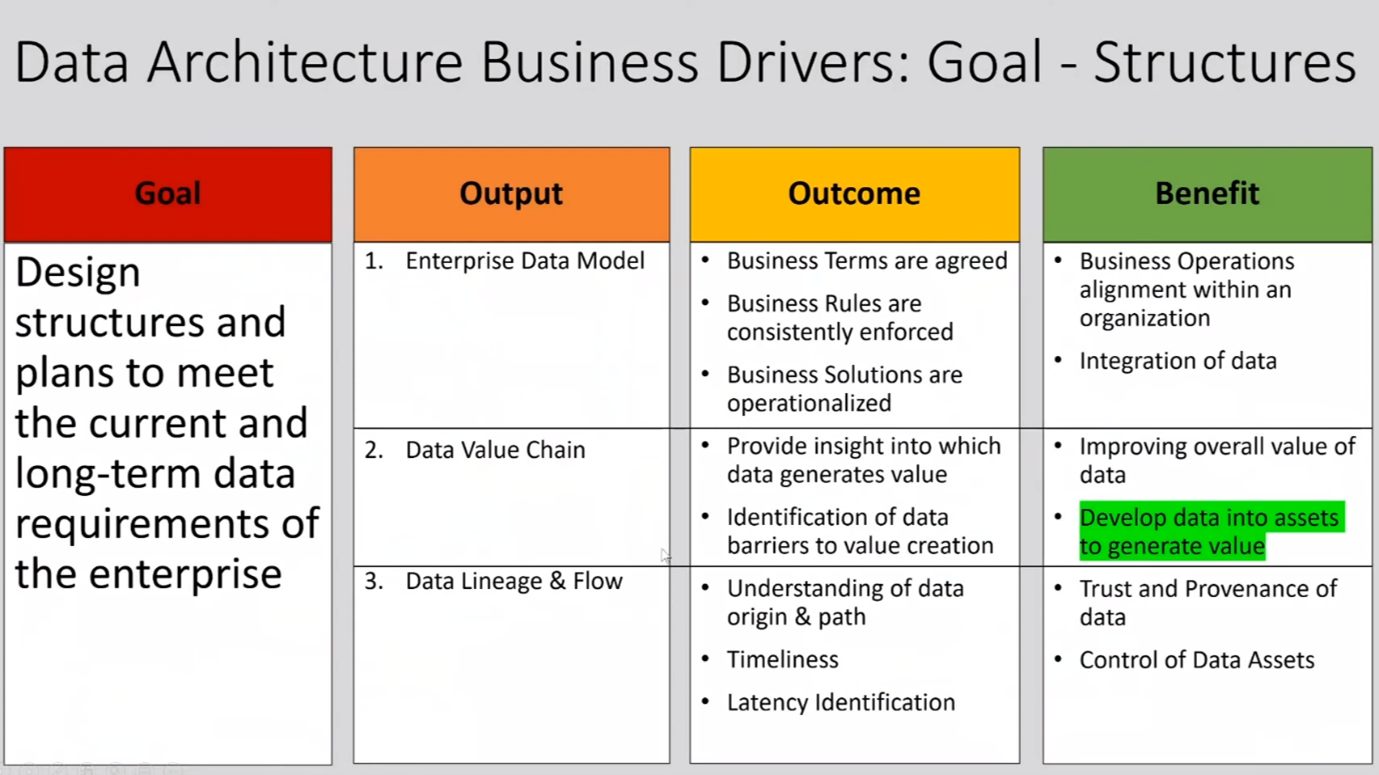

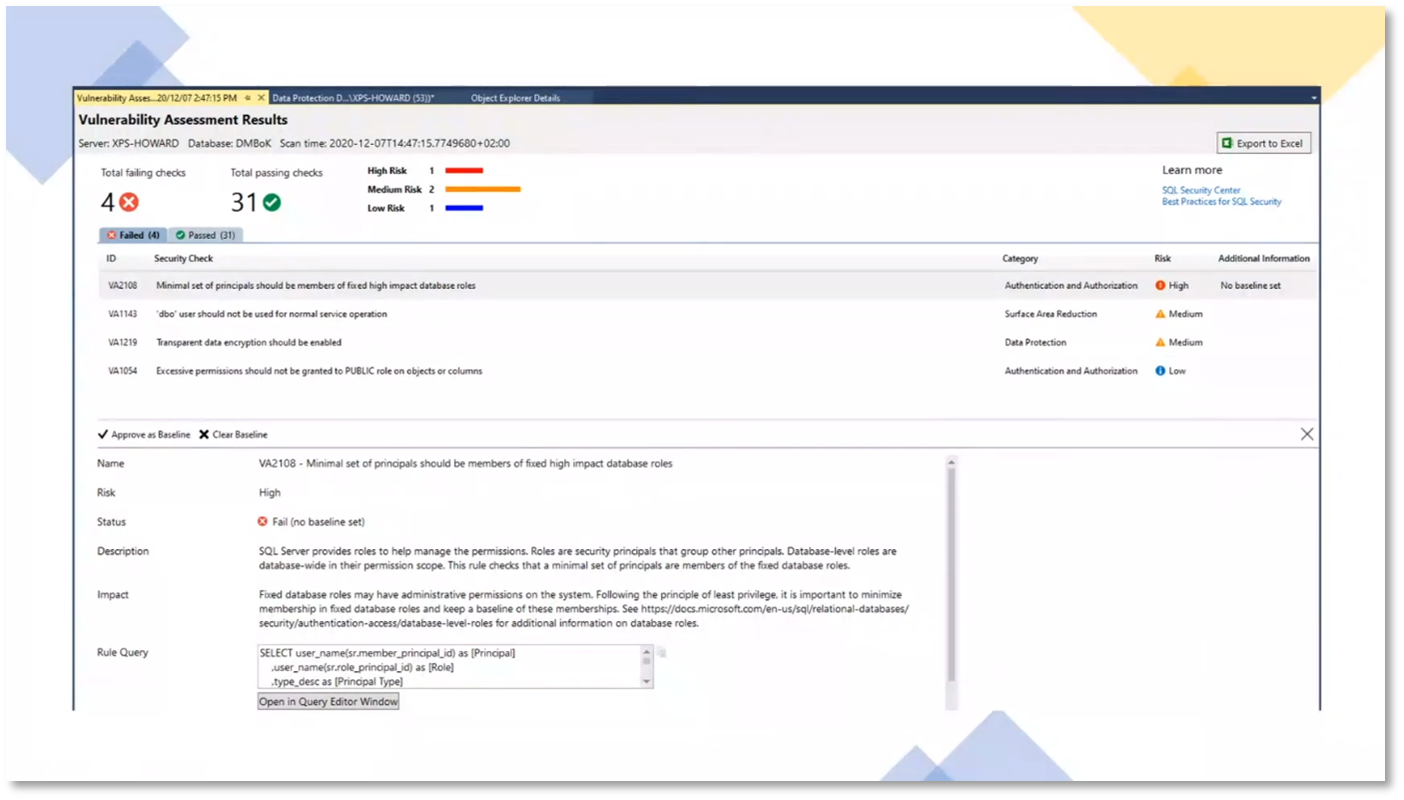

Data Architecture and Data Governance

Howard outlines the key steps in vulnerability checks and profiling business processes and software assets. He notes that it involves building a set of options for areas of vulnerability, operating at a data asset level, collecting data, and understanding data movement and integration across platforms. The responsibility for managing data assets lies with data architecture, a well-defined enterprise data model, a data value chain, and data lineage support. Other critical considerations include control of data assets, privacy by design, and centralising accountability. Roles and responsibilities within the DMBOK for data architecture in organisations must be clearly defined to ensure effective management of data assets.

Figure 9 Data Architecture Business Drivers: Goals - Structures

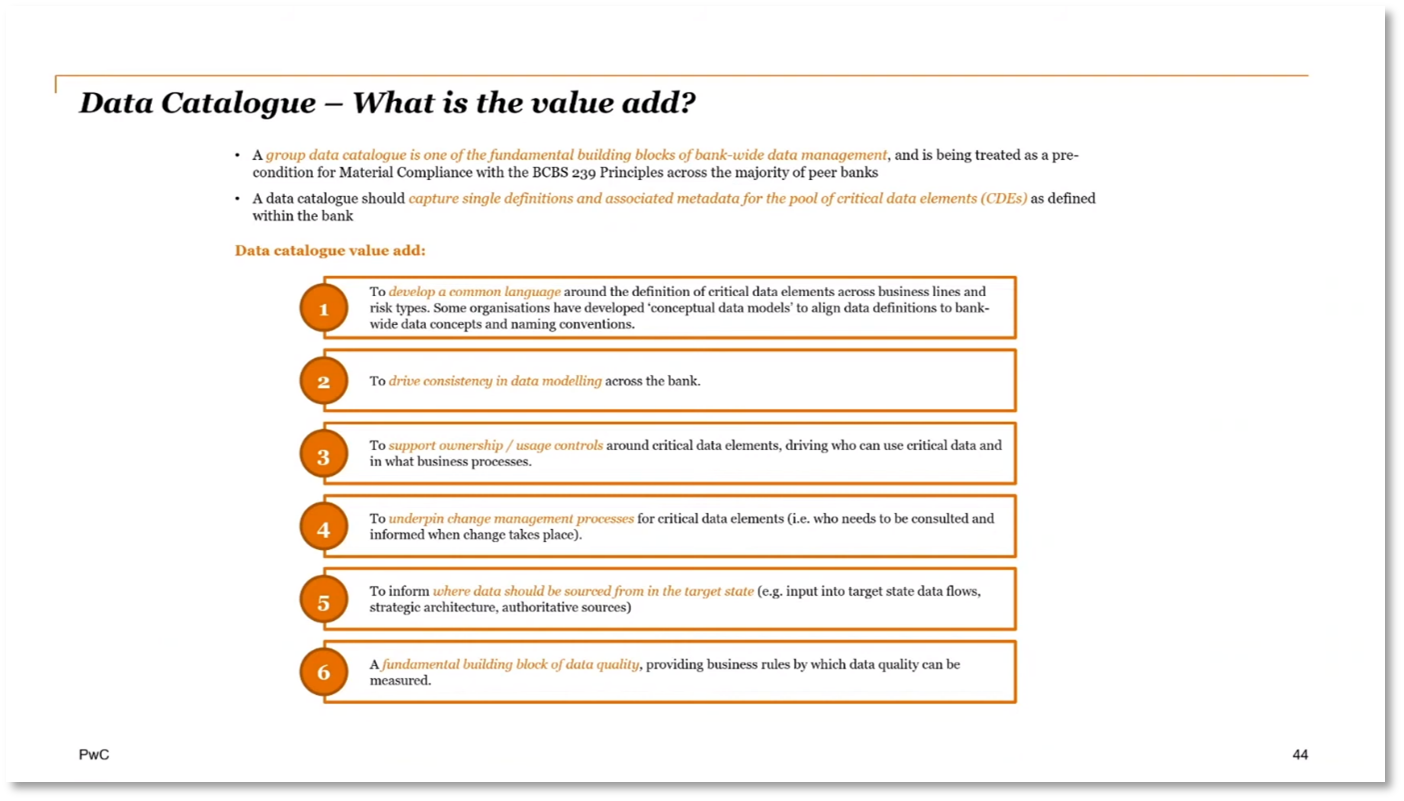

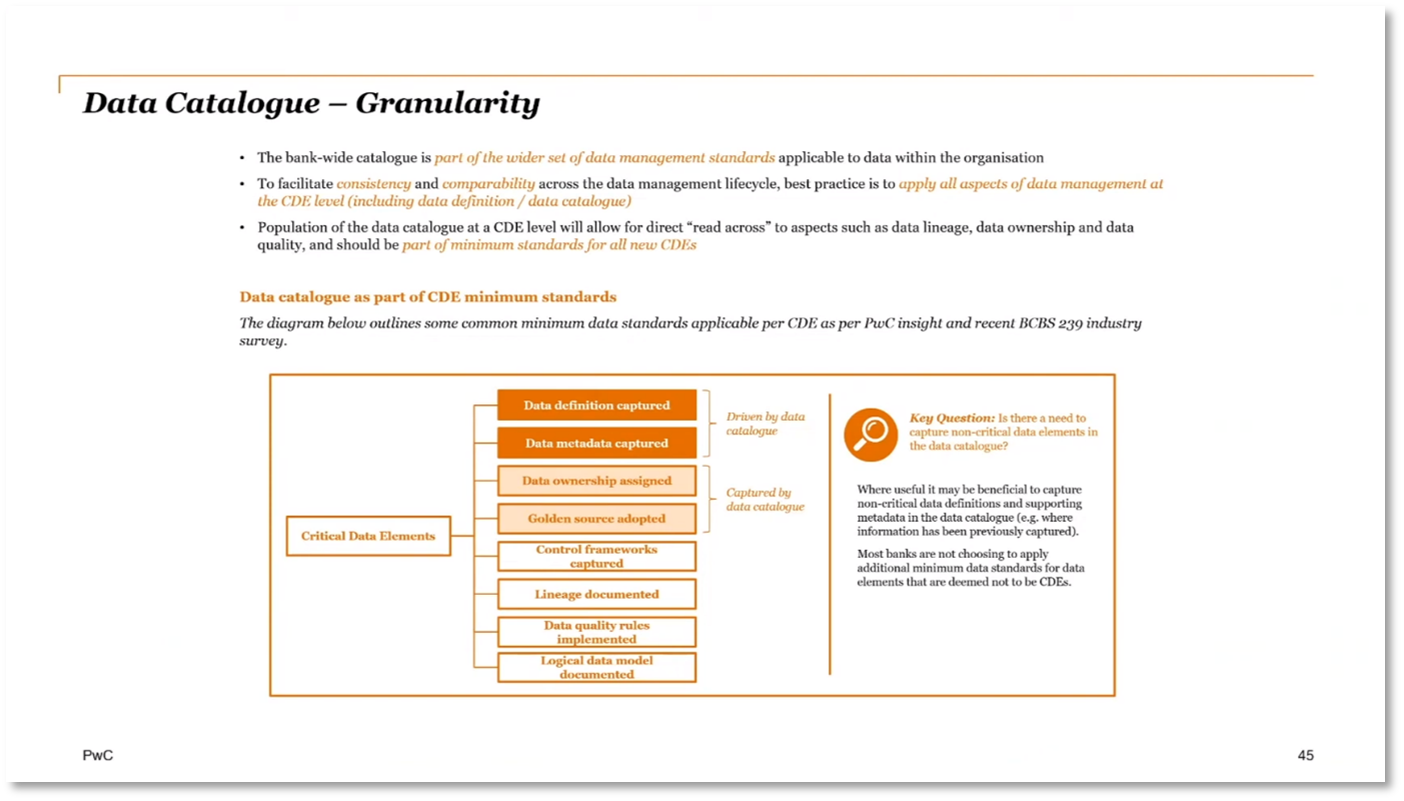

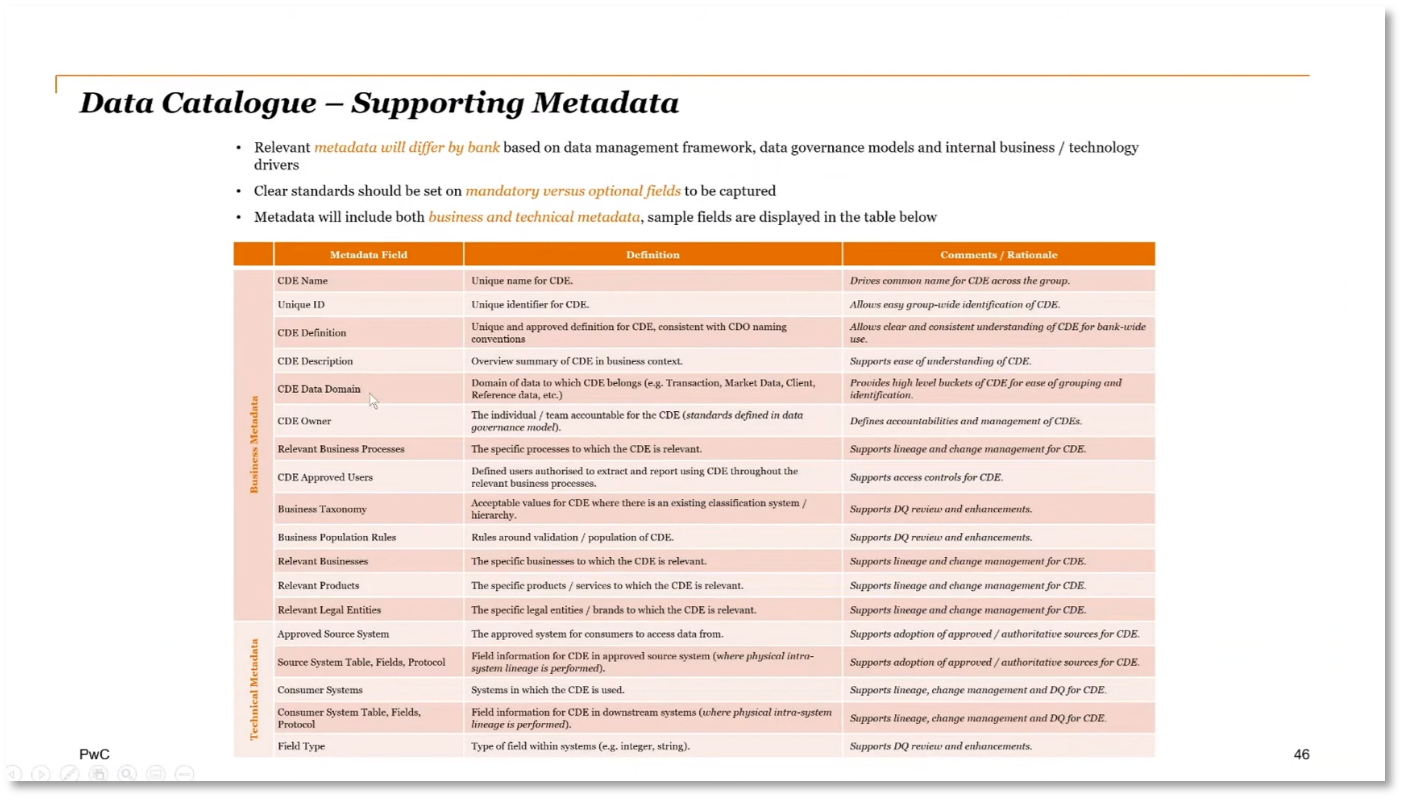

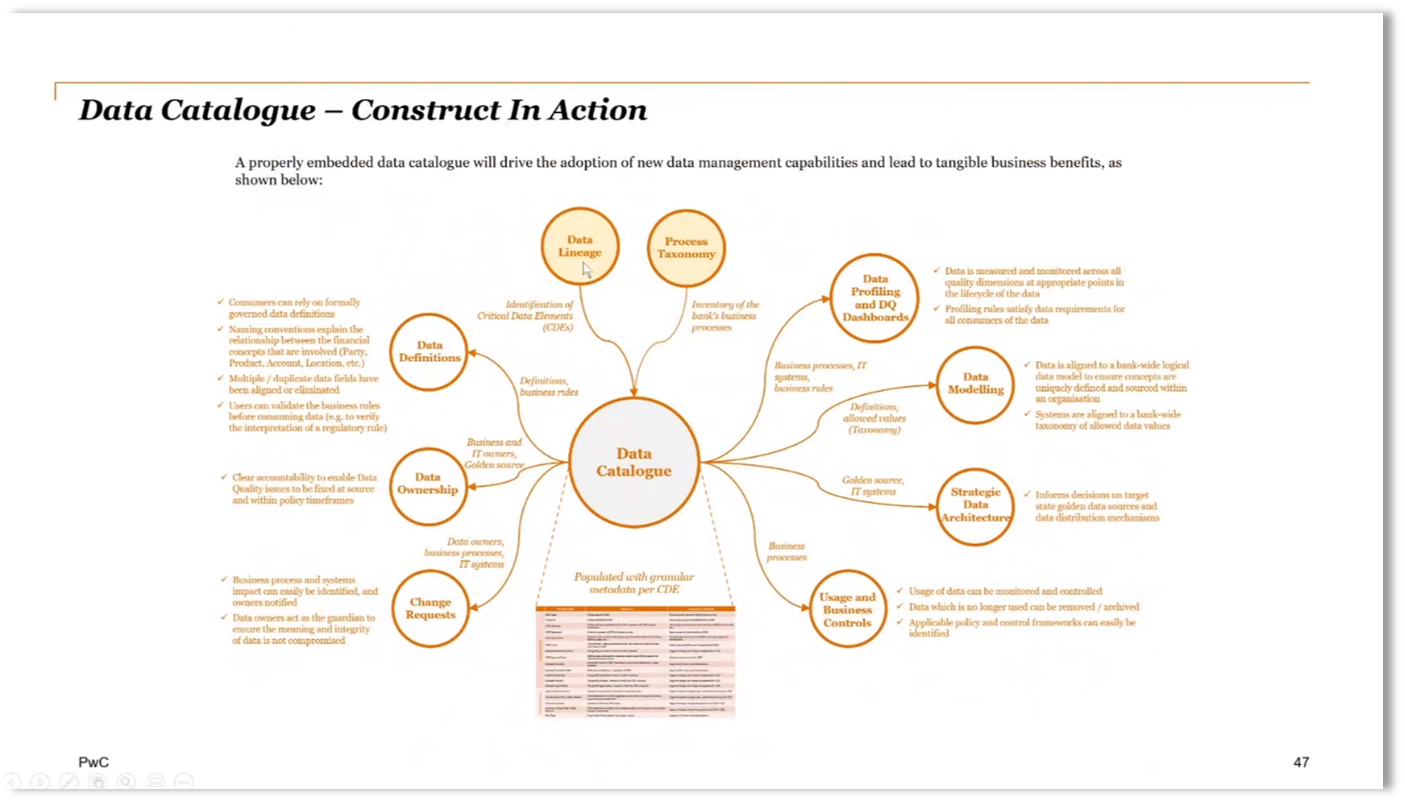

Overview of Data Governance and the Importance of a Data Catalogue

Data governance is essential for maintaining compliance and ensuring proper handling of data. Architects must understand the entire data dictionary, data model, and data lineage and flow. The importance of a data catalogue cannot be overstated in preparing for risk management, identifying exposure, and understanding the risk of data processing. It's crucial to capture metadata such as data definitions, domains, owners, related business processes, taxonomy, population rules, and legal entities within a data catalogue. The definition of critical data elements should be extended to include Personally Identifiable Information (PII) and sensitive data.

Figure 10 Data Catalogue - What is the Value Add?

Figure 11 Data Catalogue - Granularity

Figure 12 Data Catalogue - Supporting Metadata

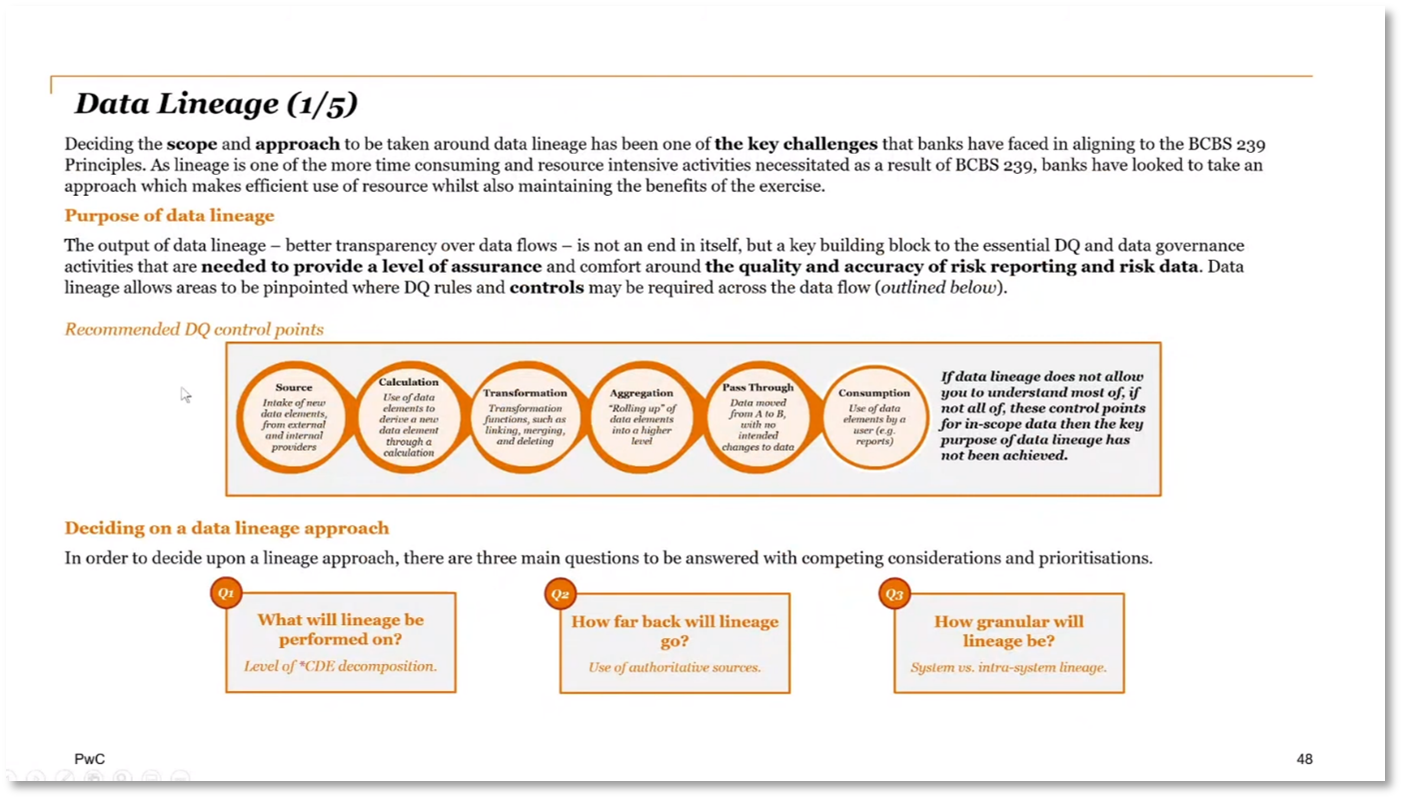

Building a Data Lineage Approach

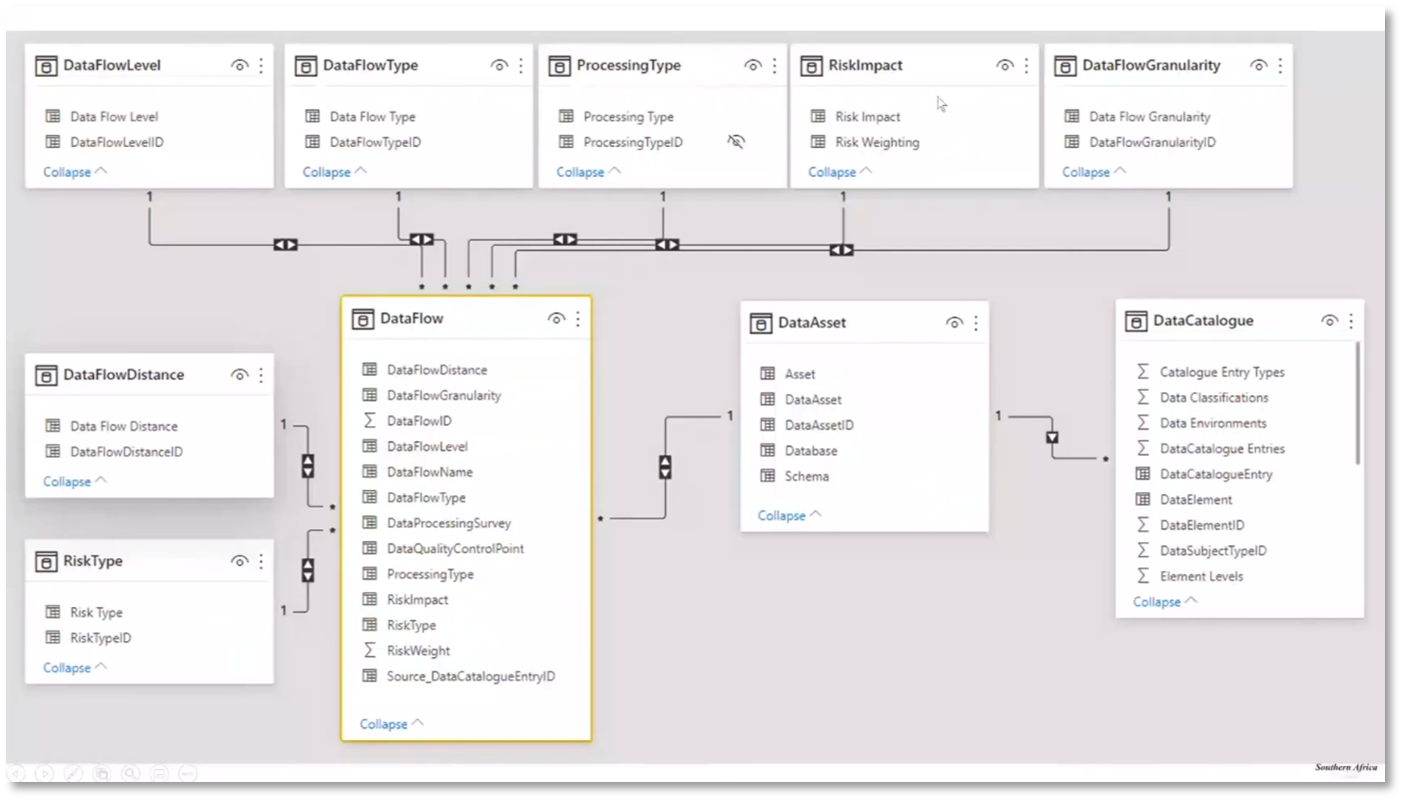

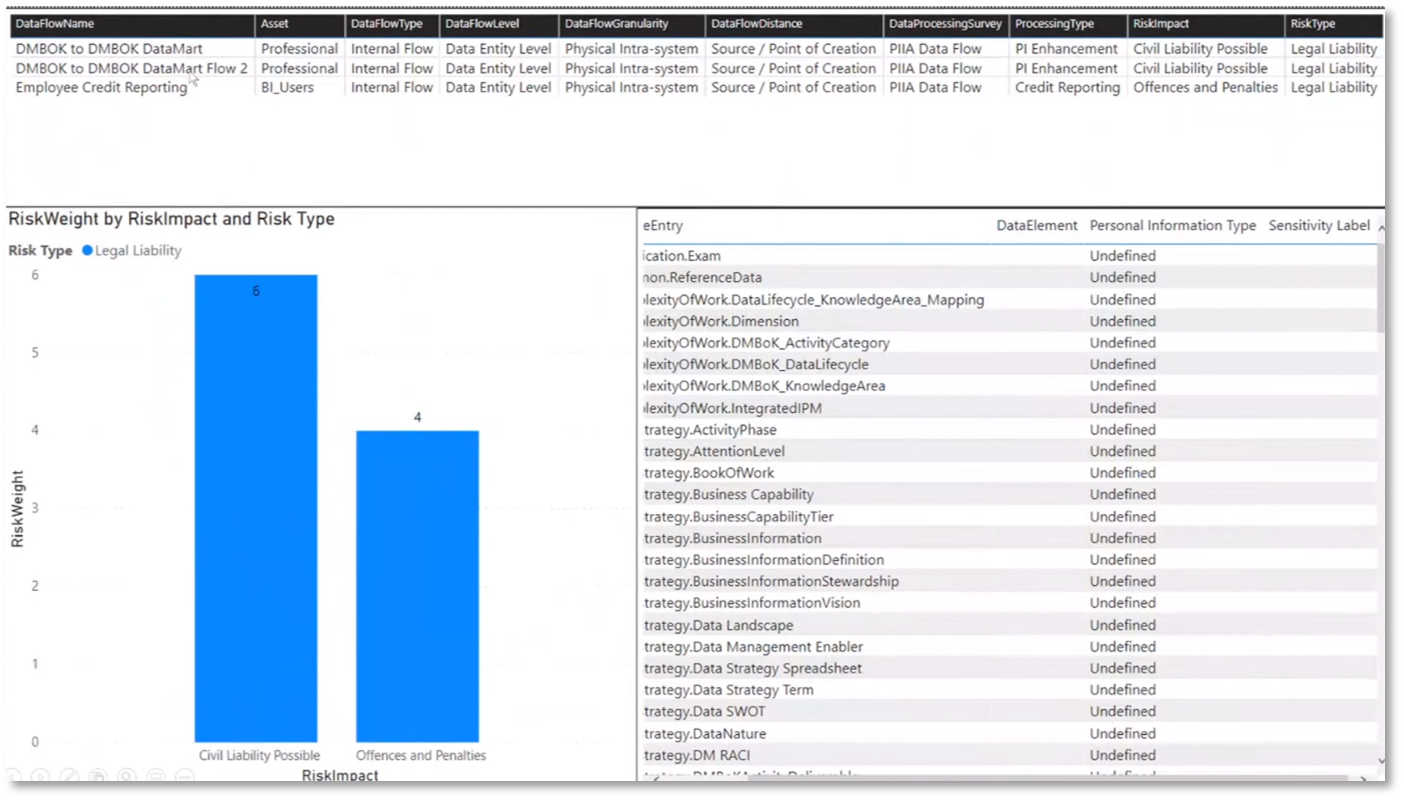

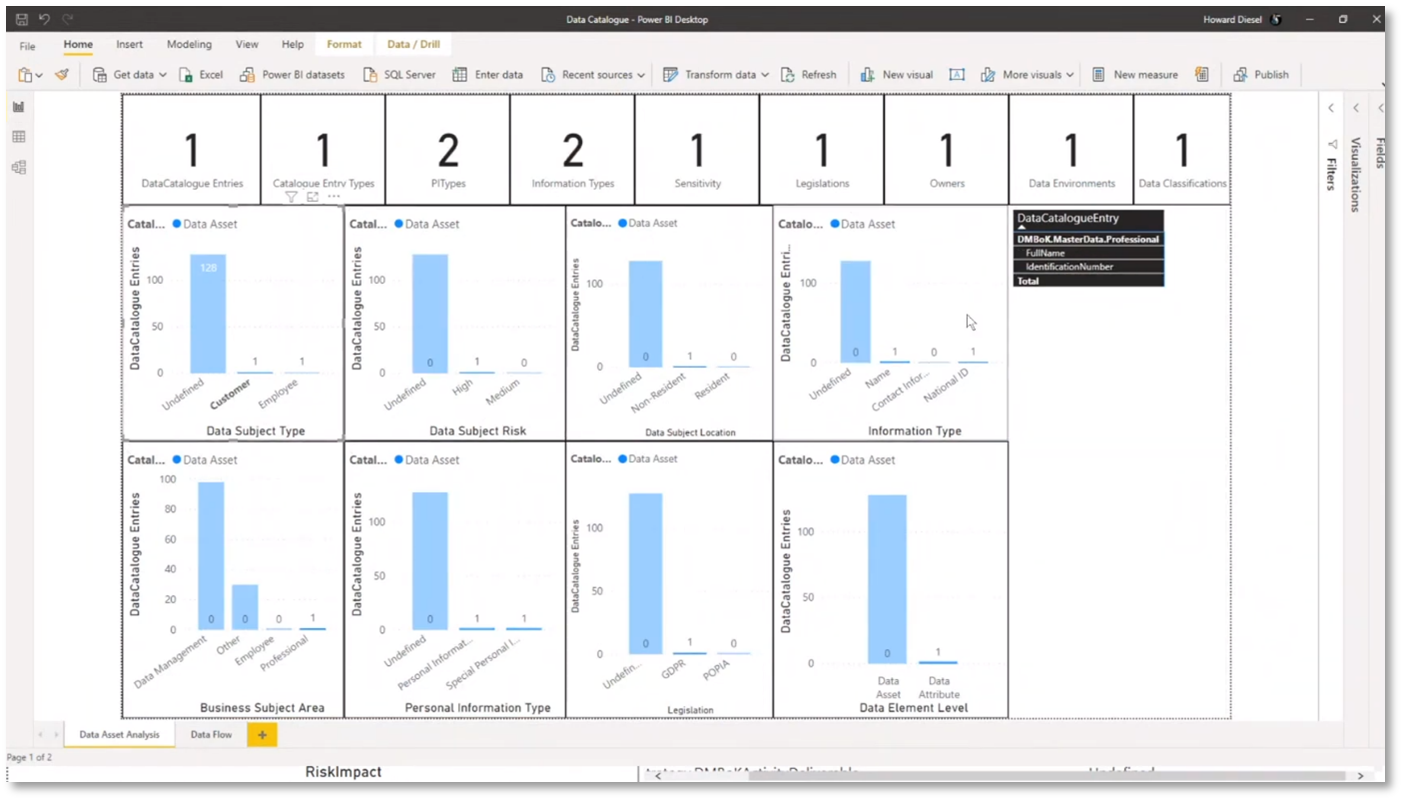

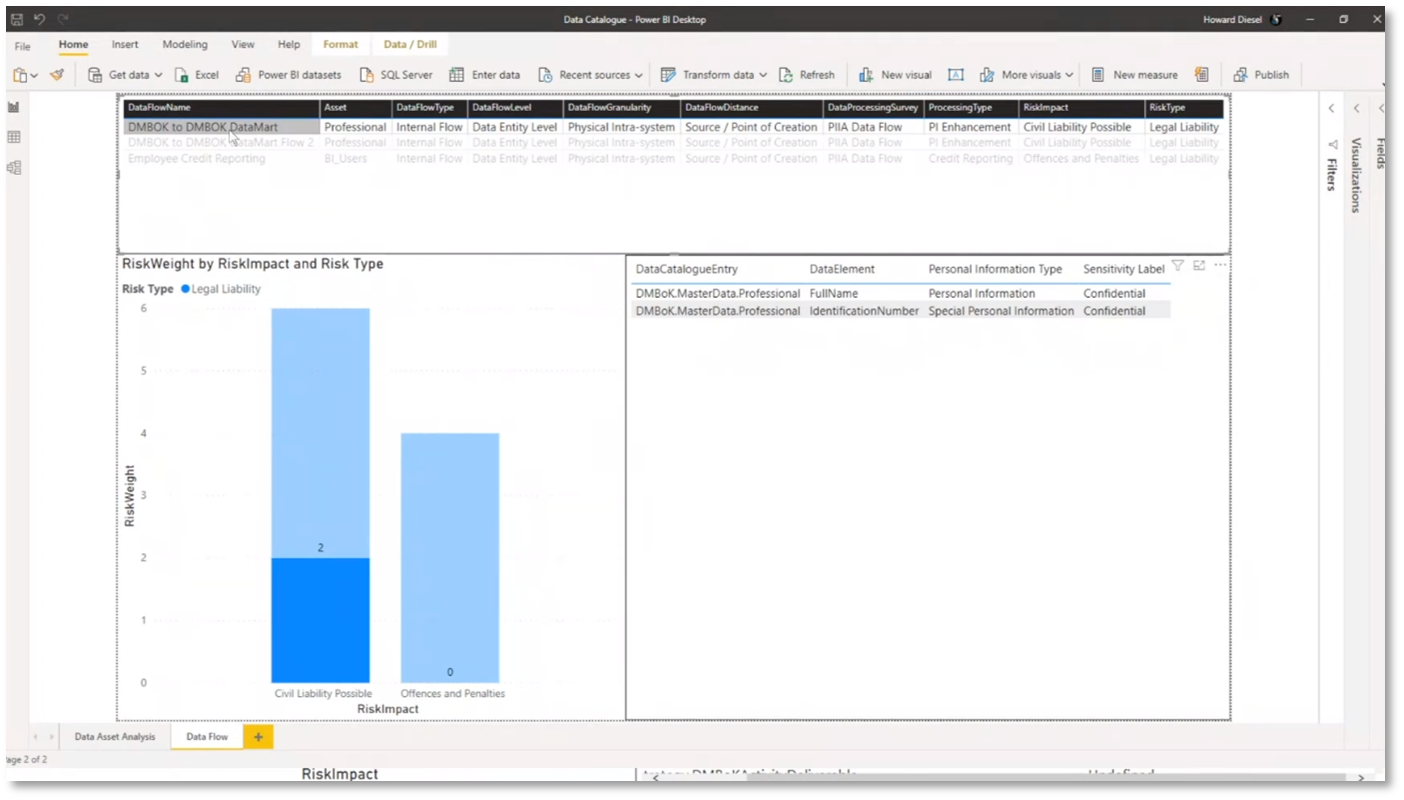

Understanding data profiling, data quality (DQ) definitions, data models, architecture, business controls, change requests, ownership, and definition in building a comprehensive data catalogue. Data lineage is critical to building a robust data quality control system that tracks data from source to consumption, including processes like transformation, aggregation, and calculation. The lineage approach's level, scope, and granularity need to be considered, and it can start at different levels, like an application level, before considering databases and systems. When building a Power BI data model using an Excel definition, it is crucial to capture data assets, their flow level, flow type, processing type, and risk impact, using hierarchies to join these elements.

Figure 13 Data Catalogue - Construct in Action

Figure 14 Data Lineage

Figure 15 Data Lineage continued

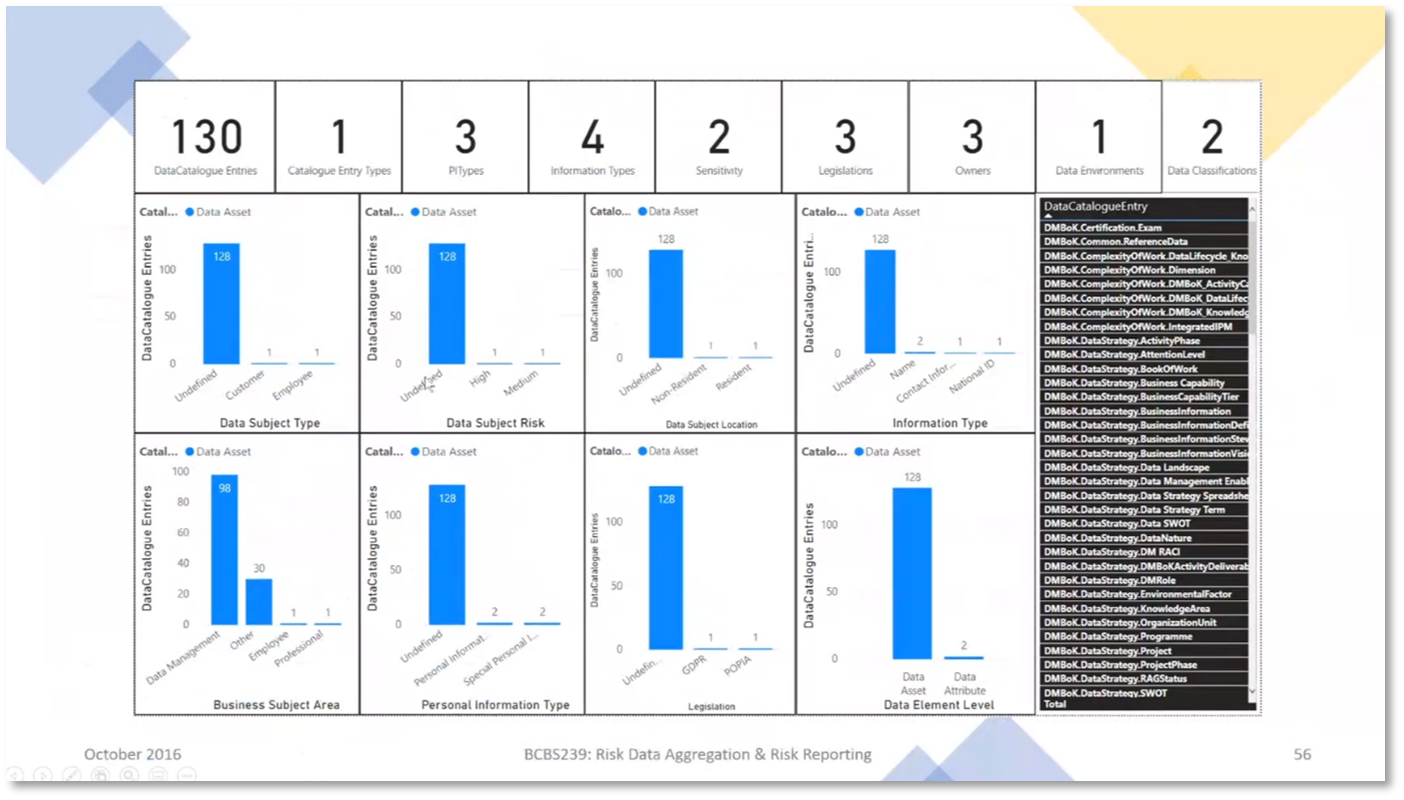

Data Asset Management and Analysis

Howard manages 128 data assets, including customer and employee information, with high and medium levels of risk and compliance with the General Data Protection Regulation (GDPR) and Protection of Personal Information Act (POPIA) legislations. The breakdown of data assets covers the subject area, data catalogue entry type, data classification, environment, element levels, owner, the data subject, risk type, personal information type, legislation, information types, and sensitivity. Data flows are reported to showcase involved data assets, their impact, and weight. Howard uses Power BI to create dashboards and analyse customer tables, employee information, GDPR-related data, and personal information classification. The data flows include an ETL process from a DMBOK database to a data mart, demonstrating the movement of professional information and the associated risk impact and weighting.

Figure 16 Data Asset Management and Analysis

Figure 17 Risk Data Aggregation & Risk Reporting

Figure 18 Risk Data Aggregation & Risk Reporting continued

Figure 19 Risk Data Aggregation & Risk Reporting continued

Figure 20 Risk Data Aggregation & Risk Reporting continued

Utilising Metadata to Query and Analyse Data Assets

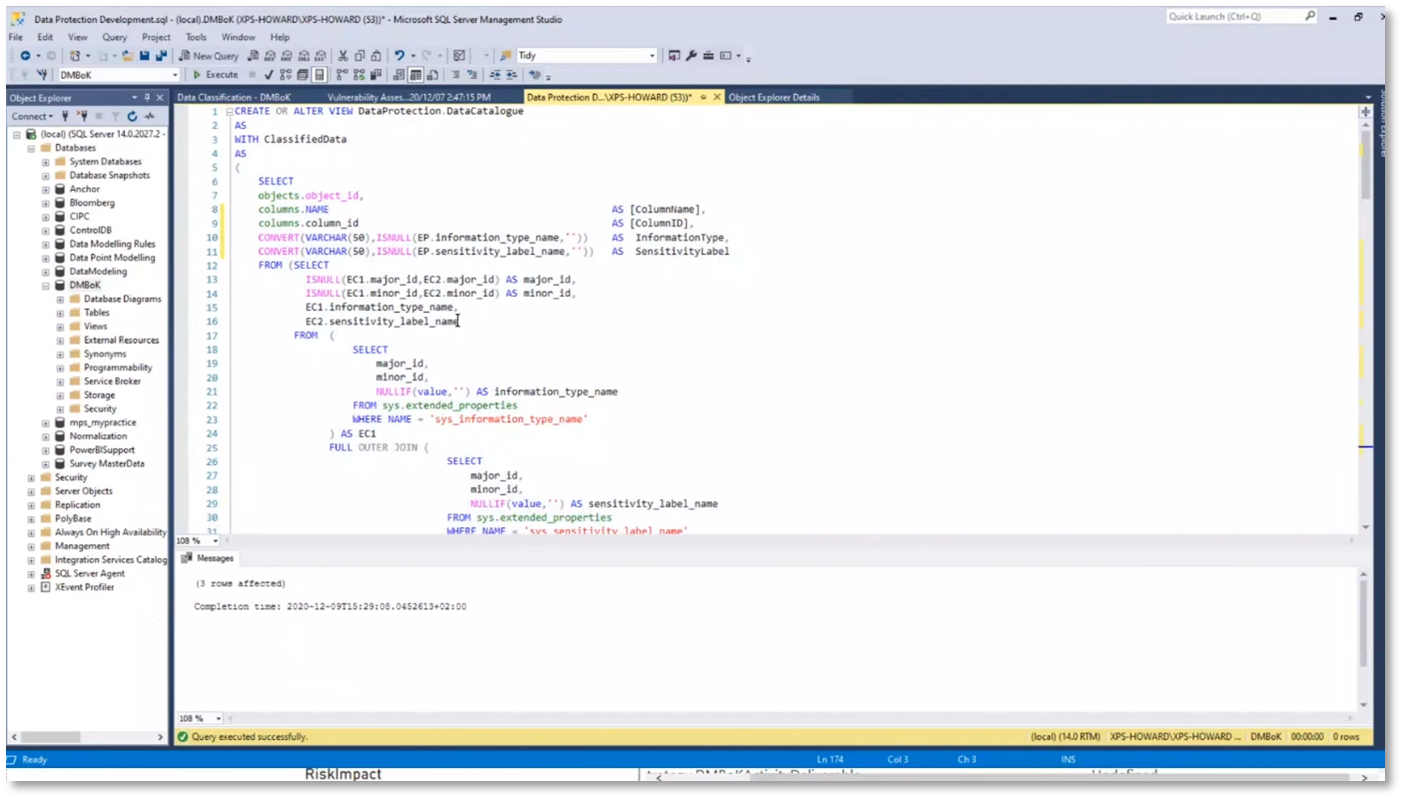

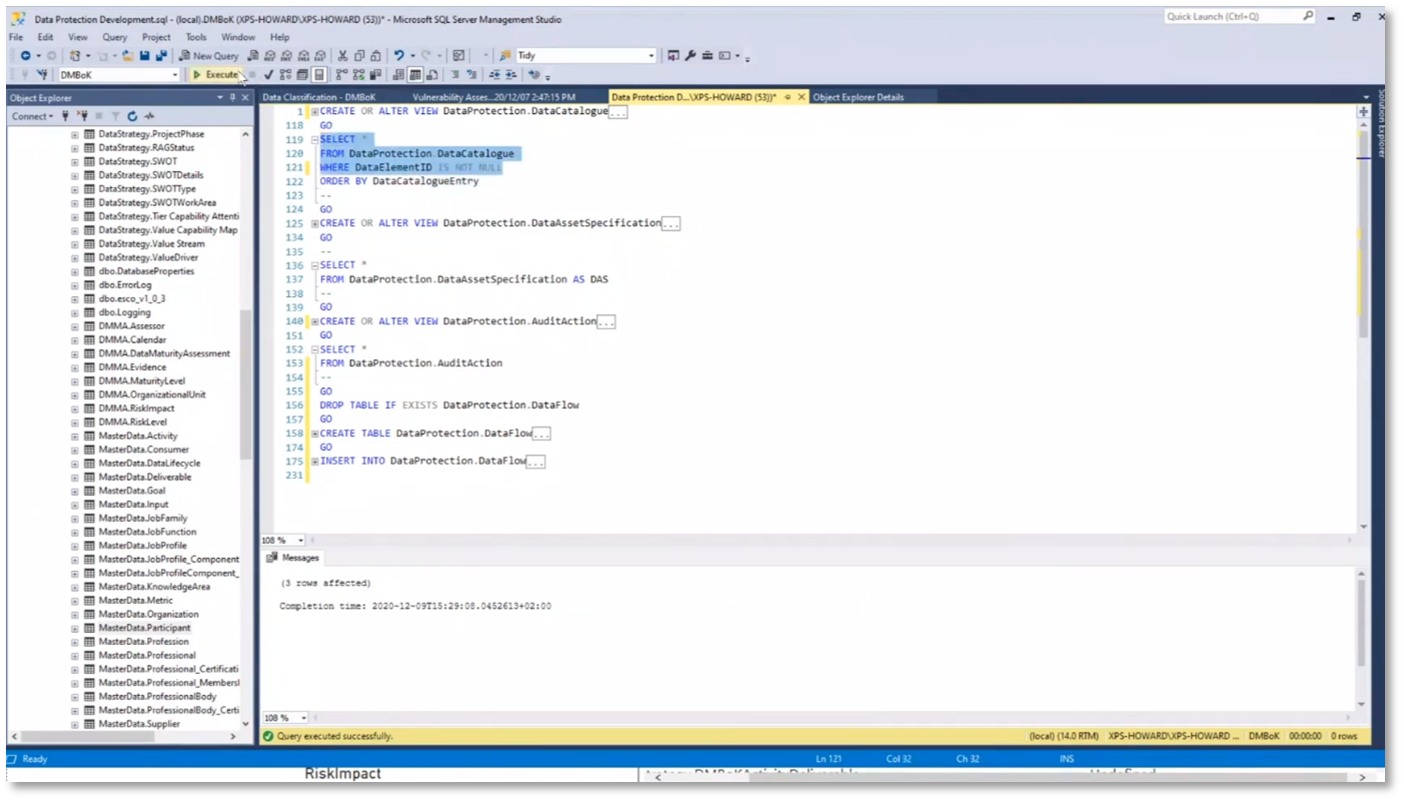

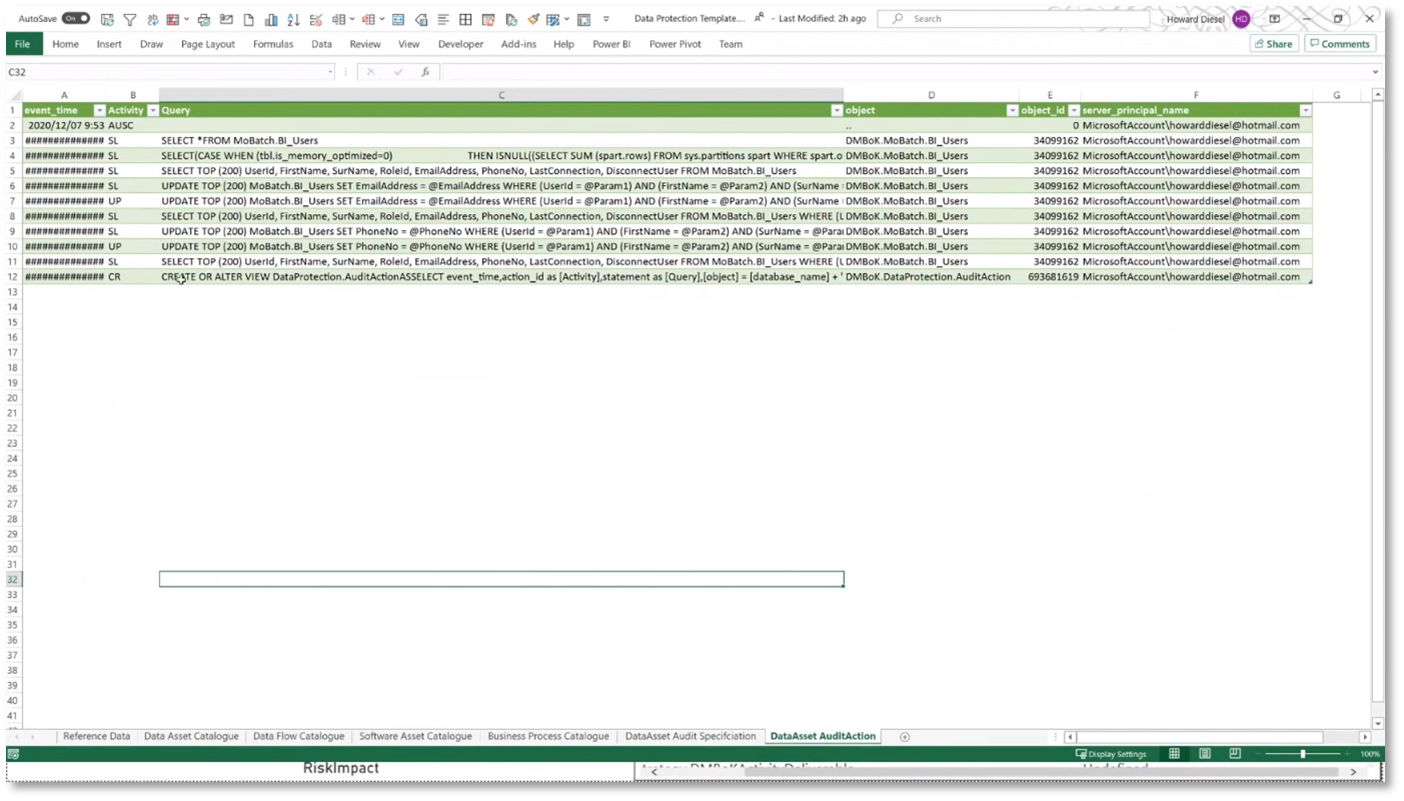

Creating a heat map that displays various data elements and information can be done by classifying shared Oracle data. This classification allows simple SQL queries to access extended properties added to tables, including metadata such as the classification, database schema, table, asset type, data elements, sensitivity, data environment, and business owners. Storing metadata with data assets is more challenging with tools like Excel as it requires integration with a separate database, separating metadata from data assets. However, with Oracle's tools, the ability to define asset specifications and access audit actions, such as viewing the update history and user access, demonstrates the comprehensive functionality of these tools.

Figure 21 Utilising Metadata to Query and Analyse Data Assets

Figure 22 Utilising Metadata to Query and Analyse Data Assets Continued

Data Management and Reporting

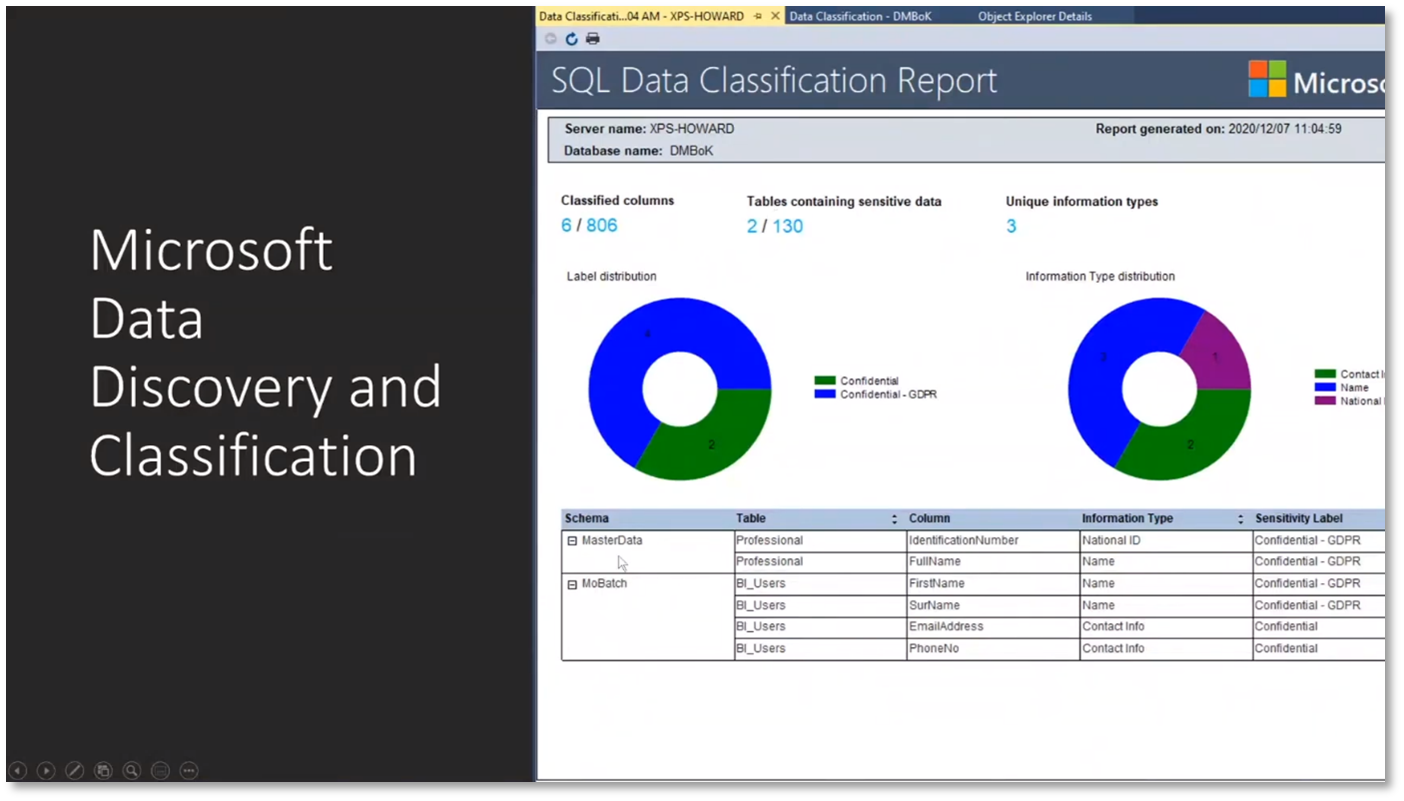

Howard emphasises the importance of data management and reporting for successful event planning. The complexities of collecting, classifying and assessing data using SQL and the significance of presenting data to risk management teams are discussed. Quality fixes are also highlighted as crucial, and Howard expresses enthusiasm for preserving evidence of quality rules for both customer and employee data elements.

Figure 23 Data Management and Reporting

Figure 24 Microsoft Data Discovery and Classification

Figure 25 Vulnerability Assessment Results

Data Lake, Data Source, Data Catalogue, and Data Governance

In the past, the focus was on the big data environment and data lake. Still, nowadays, data lakes have shifted to being data sources, and having a data catalogue is essential. Big data and data lake providers offer data catalogues, emphasising the need to collect reference data. The availability of reference data is crucial for assessing the risk weighting of different data assets in the data lake. Publicly available information can be used, but data subjects must be informed, and their rights must be respected. Having a legal justification for using data does not exempt from respecting the data subject's rights. Digital decision-making requires adherence to data privacy regulations. It is important to be aware of red flags in data governance and know when to escalate issues. Polybase in SQL allows mapping of data from SQL environment onto Excel or CSV files and adding metadata.

Importance of Data Compliance and Privacy by Design in Automated Decision-Making

Assessing compliance and privacy is important when purchasing a system involving automated decision-making. Caroline notes that privacy by design should be a priority in enterprise data models, and discussions about data privacy must occur during attribute mapping. Companies that understand their data can increase their value. To avoid trouble, companies should adhere to conditions for lawful processing and minimise data to only what is necessary for current processing. Caroline suggests that using a unique identifier and separating sensitive details can reduce potential exposure. Translating risk into a data structure is a positive thing that can bring confidence in risk and audit. Data professionals are crucial in implementing and maintaining data compliance and privacy.

If you want to receive the recording, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!