Unified Metamodel for Data Executives

Executive Summary

This webinar highlights the importance of metadata, understanding FAIR, and developing a CDO self-measurement tool for effective data access and management. Howard Diesel emphasises the need for integration, shared understanding, and trust in data management and elaborates on change control, effective knowledge management, and learning measurement. The webinar also focuses on the significance of fair data principles, advanced data management practices, and the challenges and principles of open data. Furthermore, it discussed the different types of analytics and decision-making approaches and strategies for effective business decision-making. Howard emphasises the importance of comprehensive assessments in understanding organisational progress and decision-making.

Webinar Details:

Title Unified Metamodel for Data Executives

Date: 05 October 2023

Presenter: Howard Diesel

Meetup Group: Data Executives

Write-up Author: Howard Diesel

Contents

Executive Summary

Webinar Details

CDMP Fundamentals and Certification Levels

Certification and Training Opportunities for CDMP Exams

Summary of Talk on Unified Meta Model and CDO Self-Measurement Kit

Unifying Data for Effective Access and Measurement

The importance of metadata and understanding FAIR

Developing a CDO Self-Measurement Tool

The Importance of Integration, Shared Understanding, and Trust in Data Management

Change Control, Effective Knowledge Management, and Measurement of Learning

Improving Listening Hours for Customers

Decision-Making and Hierarchy in Business

Data Management Principles and Scales

The Importance of FAIR Data Principles

Impressed by Advanced-Data Management Practices and the FAIR Framework

Challenges and Principles of Open Data

Reflecting on the Use and Ethical Considerations of Data Sets

Observations on Collaboration and Decision-Making Process

OODA Process and Decision-Making Techniques

Digital Decisioning Strategy and Process

Understanding the Different Types of Analytics and Decision-Making Approaches

Strategies and Methods for Effective Decision-Making

Quick decision-making and strategy alignment in business

Comprehensive View of Program Operation

Different Types of Organizations and Decision-Making

Importance of Data and Maturity Assessments in Decision Making

Importance of Comprehensive Assessments in Understanding Organizational Progress

Importance of Assessment before Project Quoting

CDMP Fundamentals and Certification Levels

The certification process for becoming an Associate has changed over the years. Previously, individuals were required to write all three exams to obtain a certification, but now they can become Associates without writing the specialist exams. Due to the additional requirements and associated costs, there is less interest in pursuing the Master's level. The number of Associates is significantly higher than that of Practitioners, with a 15 times difference. Individuals who excel in the Fundamentals are encouraged to pursue further certifications. Some certification programs offer incentives, such as Calgary's pay-when-you-pass option. However, the option to request a specialist exam after passing the Fundamentals and paying for it is no longer available. There is a concern about the number of people trained versus those who complete the certification exams. Moreover, having all Associates become Practitioners may diminish the value of the Practitioner level. Finally, CDMP certifications have lower achievement rates than other certifications, raising concerns about the certification level being attained.

Figure 1 CDMP Certified Professionals

Certification and Training Opportunities for CDMP Exams

Since 2006, close to 2,900 people have been certified by the organisation, primarily in Data Vault modelling. Individuals are encouraged to pursue additional levels and specialist exams. The upcoming fundamentals training course, starting next week, offers detailed study notes, practice questions, mind maps, and a study group opportunity. In case of unsatisfactory exam results, individuals can opt for the CDMP DIY product, which provides a refresher course and customisable bundles. It is important to note that three CDMP exams must be completed within three years before certification expiration. Specialist training courses include data governance and quality, with upcoming dates secured. The organisation is considering future specialist courses in modelling, analysing, and planning data.

Summary of Talk on Unified Meta Model and CDO Self-Measurement Kit

Debbie provided information about upcoming courses in November and promised to share more details. Howard emphasises the limitations of a DMMA and the need for assessing data quality, business trust, and integration of capabilities. Ashleigh Faith's work on linking data and knowledge graphs was discussed. Howard could not elaborate further on the Unified Metamodel and its various metadata stores due to the large amount of content to be covered in the webinar.

Unifying Data for Effective Access and Measurement

Howard outlines the challenges of unifying data from various sources to provide a comprehensive view and the need for a self-measurement tool for Chief Data Officers (CDOs) tasked with implementing data management offices. He seeks advice on how CDOs can effectively measure and communicate their progress in data management and shares an example of using statistics and graphs to report progress. Additionally, Howard considers new measures for data literacy, AI literacy, governance literacy, and the number of staff trained as indicators of progress for CDOs. Lastly, he highlights the experience of setting up a sales leaderboard in a hydraulic supermarket. It was initially met with excitement but resulted in some employees manipulating sales data once measurement was implemented.

Figure 2 DM Unified Metamodel Deep-Dive

Figure 3 Data Executive Requirements

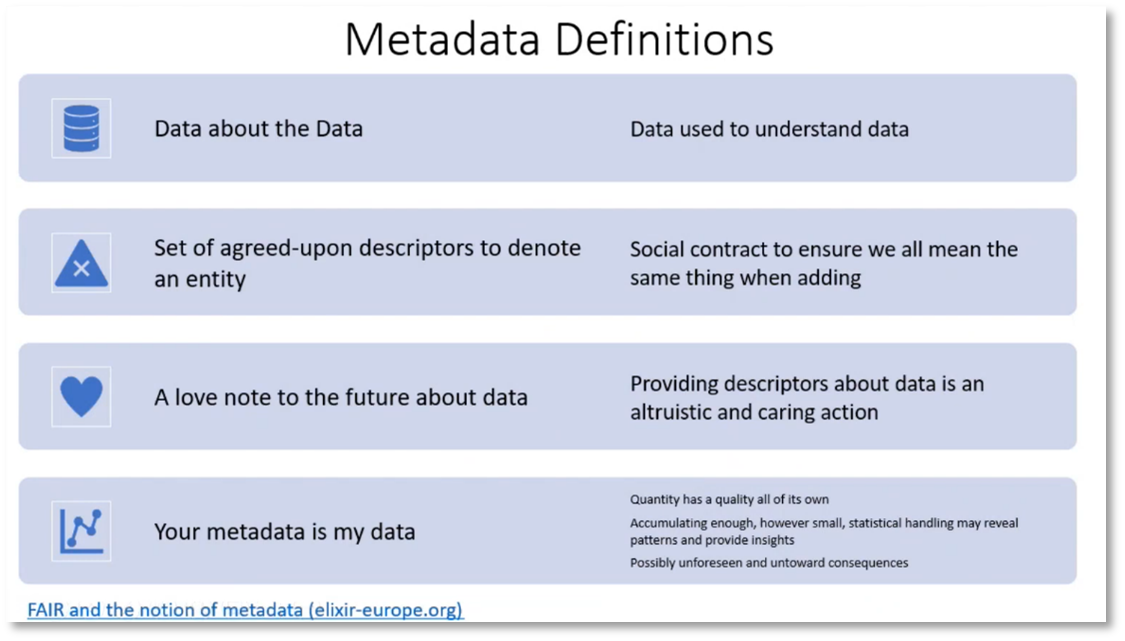

The importance of metadata and understanding FAIR

The challenge of accurately measuring humans was highlighted, and Howard stresses the importance of comprehensive data management. He discusses metadata and its roles, including denoting entities and serving as a "love note to the future" about the data. Howard emphasises the increasing value of metadata collection in the age of generative AI. He introduces the concept of FAIR, which stands for Findability, Accessibility, Interoperability, and Reusability, and expresses curiosity about the audience's understanding of it.

Figure 4 Metadata Definitions

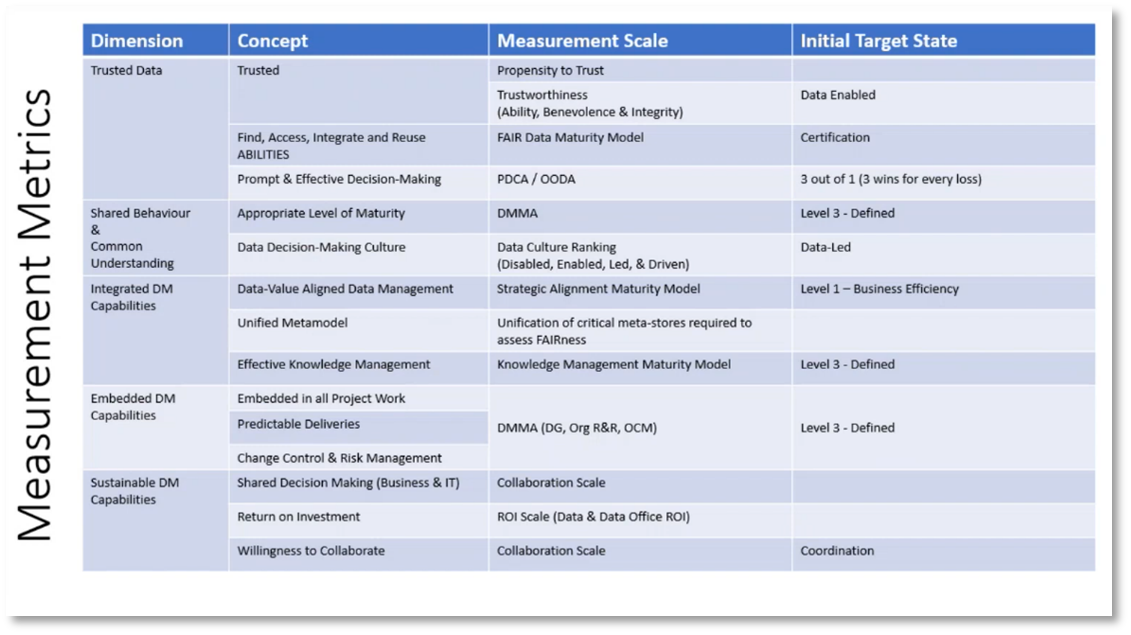

Developing a CDO Self-Measurement Tool

Howard discusses the importance of FAIR in the scientific community and its absence in the business community. He has been tasked to create a self-measurement tool for a Chief Data Officer to track their data management office's progress. Howard identifies sustainable data management capabilities, such as maintaining knowledge management and embedding data management in development processes. He also highlights the common integration issue between data quality and business intelligence departments in organisations. Additionally, Howard mentions the need for guidance on how different capabilities in the Data Management Body of Knowledge (DMBOK) can work together.

Figure 5 What does GOOD look like?

The Importance of Integration, Shared Understanding, and Trust in Data Management

Effective data management is crucial for businesses and organisations to ensure the accuracy and reliability of information. However, the lack of an overall system hinders the integration of different components of data management. To overcome this, shared understanding and common behaviour are necessary, which includes understanding data models and business glossaries. Additionally, trust in the data is crucial as decision-making relies on the accuracy and reliability of the provided information. Communication plays a significant role in data management, ensuring that information is effectively shared and easily accessible. Lastly, findability is a crucial aspect of data management, requiring clear and accessible documentation rather than relying on vague references like "it's on Confluence."

Change Control, Effective Knowledge Management, and Measurement of Learning

The webinar covers various aspects of organisational management. It highlights the importance of change control, effective communication, and employee understanding. The Unified Metamodel is a critical aspect of organisational management that allows easy access to information. Maturity assessments have revealed that organisations lack evidence, policies, procedures, and data strategies, which can impact their overall performance. Change management, knowledge management, and collaboration are essential for organisational success, but cultural differences can impact implementation. Learning measurement should go beyond tracking hours to include recognised learning and value delivered. Finally, the "one metric that matters" approach, such as Spotify's listening hours, can provide a meaningful gauge for organisational performance and progress.

Figure 6 Integrated, Embedded and Sustainable DM Capabilities

Figure 7 Measurement scorecard OMTM (The One Metric That Matters): Trustworthiness

Improving Listening Hours for Customers

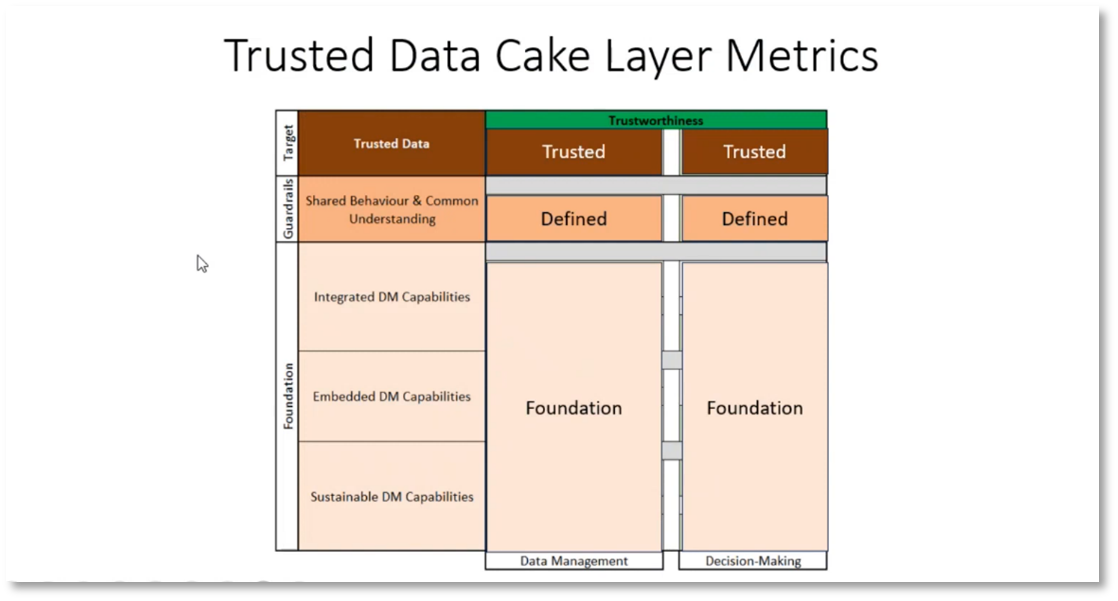

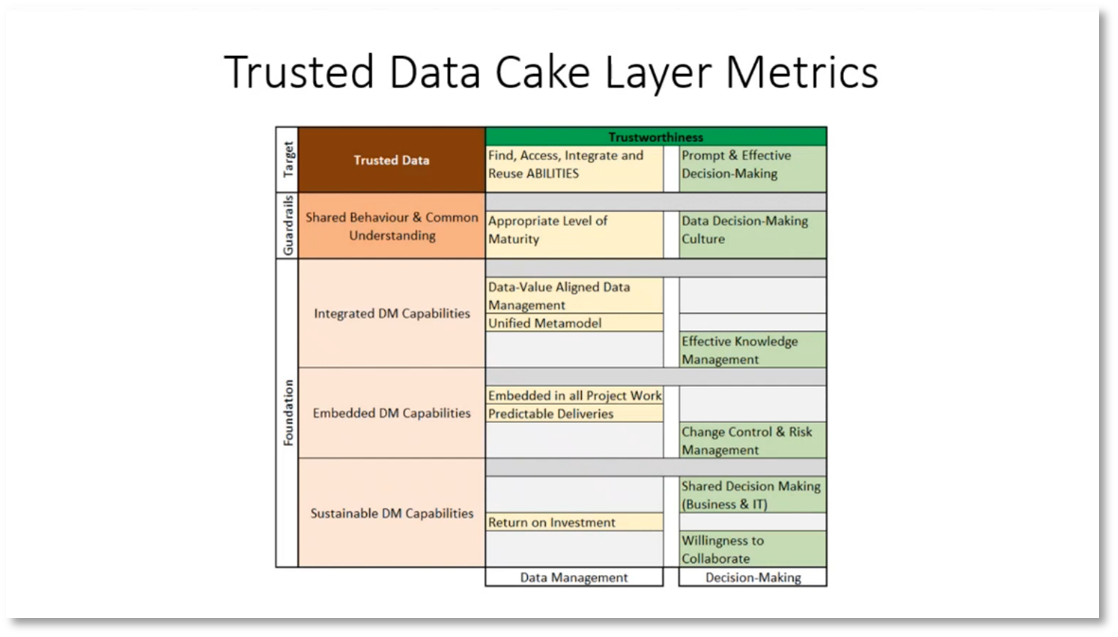

The key aspects of data management and business decision-making culture are highlighted. Howard emphasises the importance of understanding each department's contribution to overall data, building trust in data for making informed decisions, and addressing data trustworthiness. Additionally, he highlights the significance of effective data management for achieving ROI, predictable deliverables, unified and aligned data, and data maturity. Developing a data-driven culture takes time and requires building trust in the data for decision-making. Encouraging people to make data-based decisions is essential for creating a culture of data-driven decision-making in businesses.

Figure 8 Climbing the Ladder

Figure 9 Measurement Metrics

Figure 10 Trusted Data Cake Layer Metrics

Figure 11 Trusted Data Cake Layer Metrics Continued

Decision-Making and Hierarchy in Business

Effective decision-making is crucial in business, and understanding its hierarchy is essential. This hierarchy involves lower-level decisions being easier to make than high-level strategic decisions. To build a hierarchy, one needs to roll up numbers and analyse the decision-making process. Ratios are used in business decision-making, with the ratio being distributed across multiple metrics. However, it is essential to note that different areas may not contribute equally to the final metric that matters. There are two domains to consider: culture, change management, and decision-making. Quantifying each decision-making level can be challenging, as subjective opinions must be removed and replaced with scores. Overall, understanding the decision-making process and its hierarchy is crucial for effective decision-making in business.

Figure 12 Trusted Data Cake Layer

Data Management Principles and Scales

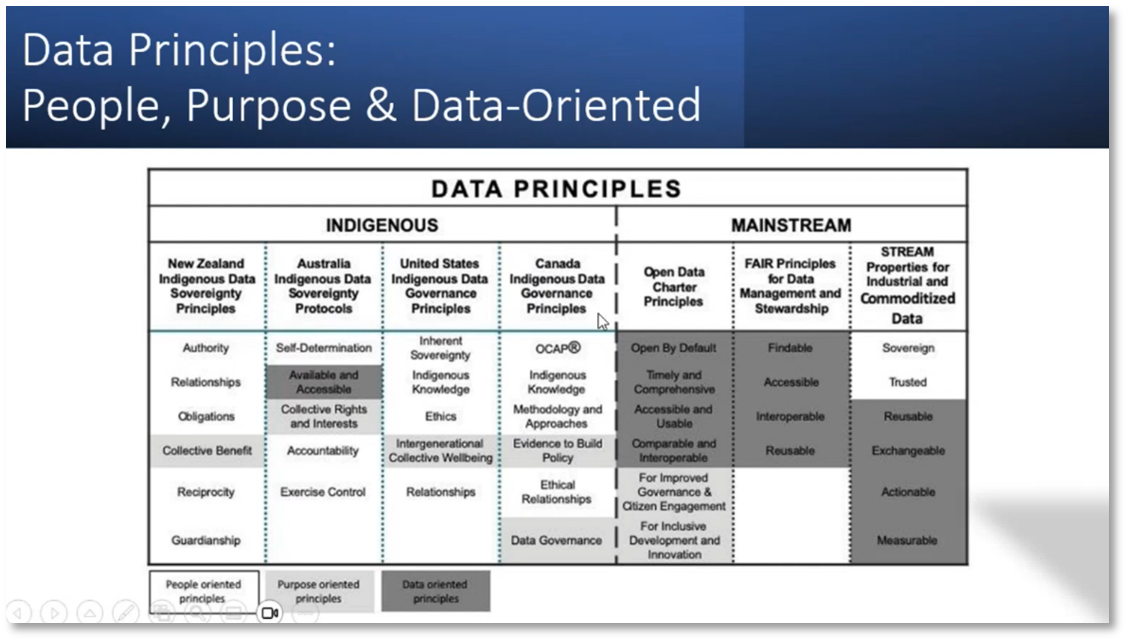

The office assesses employees quantitatively and qualitatively using six scales: trustworthiness, propensity to trust, fair data maturity model, data management, data culture, and strategic alignment maturity model. The strategic alignment maturity model measures how well strategies align with data and business. The Australians have principles called Fair and Care that focus on data management for data and people. Working with DMMA has six scales: strategic alignment, collaboration, business decision-making, fair, and change and data management metrics. Lastly, the principles of open and fair focus on purpose and data, respectively.

The Importance of FAIR Data Principles

FAIR principles ensure that data and metadata are treated equally and associated with proper provenance. The principles emphasise the use of applicable language, qualified references, and evaluation of the capabilities of a dataset. They also ensure that data sets are findable, accessible, interoperable, and reusable. Originally used in academic publishing, these principles have proven effective in correcting mistakes and improving the implementation of measures in various domains, showcasing the power of accessible data and metadata in research and beyond.

Figure 13 FAIR Data Principles

Impressed by Advanced-Data Management Practices and the FAIR Framework

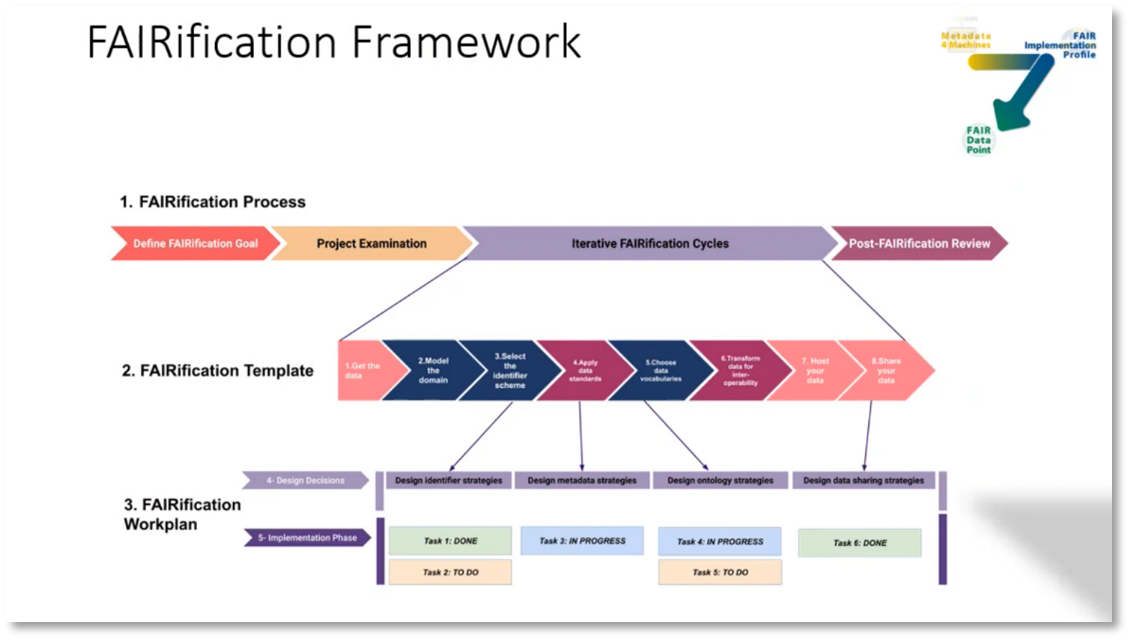

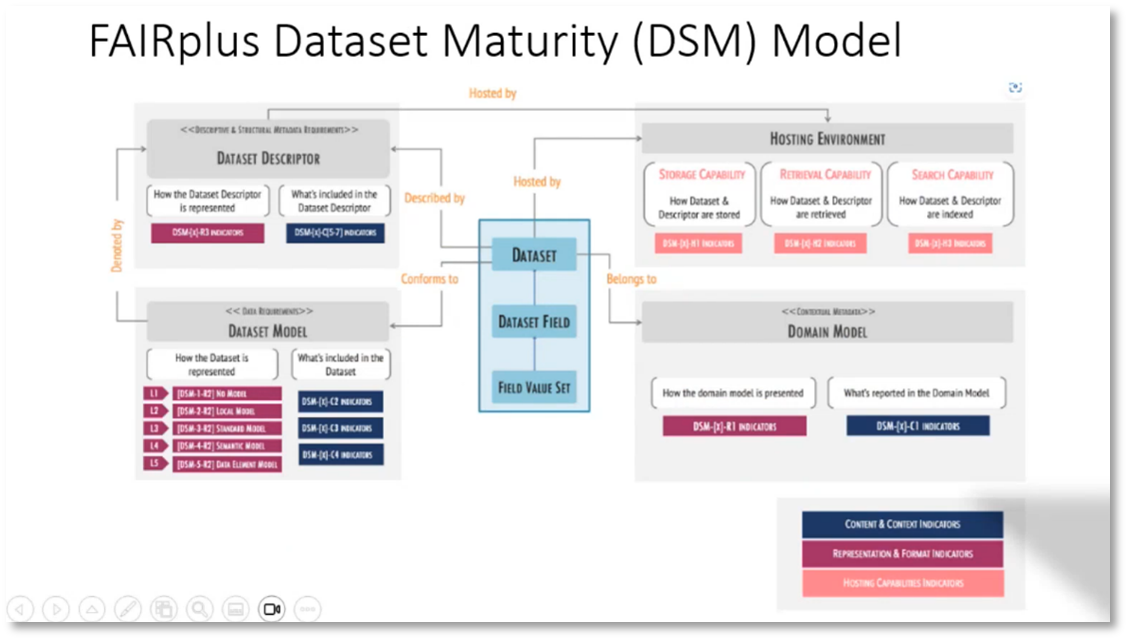

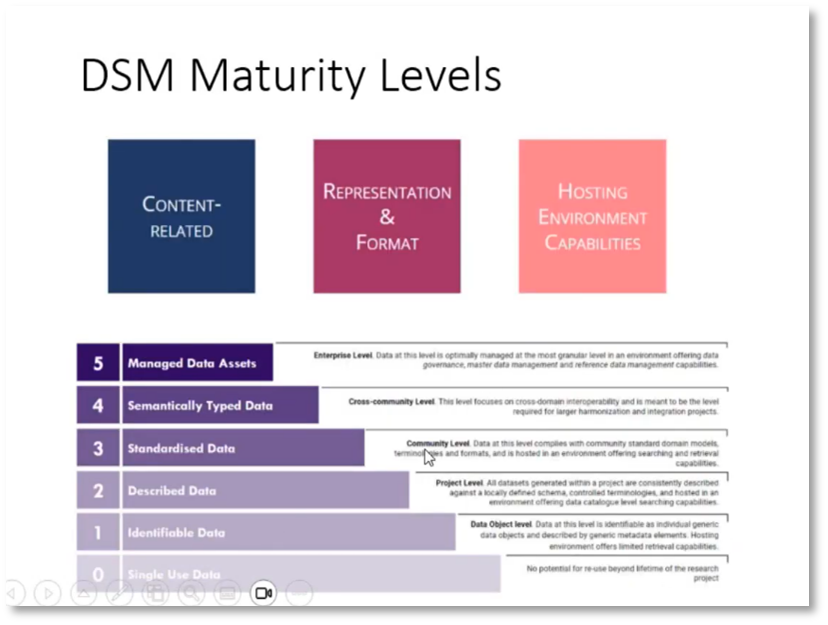

Howard is impressed with the researchers in Dublin for their advanced understanding and measurement of information. He highlights the arduous work required for effective data management, including metadata. Dublin researchers have made strides in taxonomies, knowledge graphs, crosswalks, and canonical models. The FAIR framework is introduced, which prioritises data survival and understandability. Universities must now submit publications per the FAIR model, encompassing maturity levels: single-use data, identifiable data, described standardised data, and systematically typed data. Finally, there is also a regulation on the repository for data management.

Figure 14 FAIRification Framework

Figure 15 FAIRplus Dataset Maturity (DSM) Model

Figure 16 DSM Maturity Levels

Challenges and Principles of Open Data

Storing data in a reliable place is crucial to ensure access in the future, regardless of software discontinuation. Various formats, including PDF, Excel, CSV, RDF, and linked open data, are discussed as options for expressing and describing data. It is recommended that data be expressed using linked open data to facilitate efficient processing. The open data maturity level includes principles addressing indigenous representation, government-level governance, and commercial entities' benefits from open data publishing. Fair principles based on data, people, and purpose highlight different aspects of open data principles.

Figure 17 FAIR Data CMM

Figure 18 Data Principles: People, Purpose and Data-Oriented

Reflecting on the Use and Ethical Considerations of Data Sets

The significance of managing and utilising data sets fairly and ethically is crucial. Howard emphasises the importance of ensuring the data sets are findable, accessible, interoperable, and reusable (FAIR) in different formats. The introduction of an Excel template to assess the FAIRness of data sets allows for ranking them based on their essentiality, priority, importance, and usefulness. The priority ranking helps assess the evidence and determine compliance. Additionally, Howard acknowledges the progress made in data management and metadata within the research community. He also highlights the significance of metadata and knowledge in AI systems and the challenges generative AI faces in understanding and categorising information accurately.

Figure 19 Open, FAIR & CARE

Figure 20 FAIR Assessment

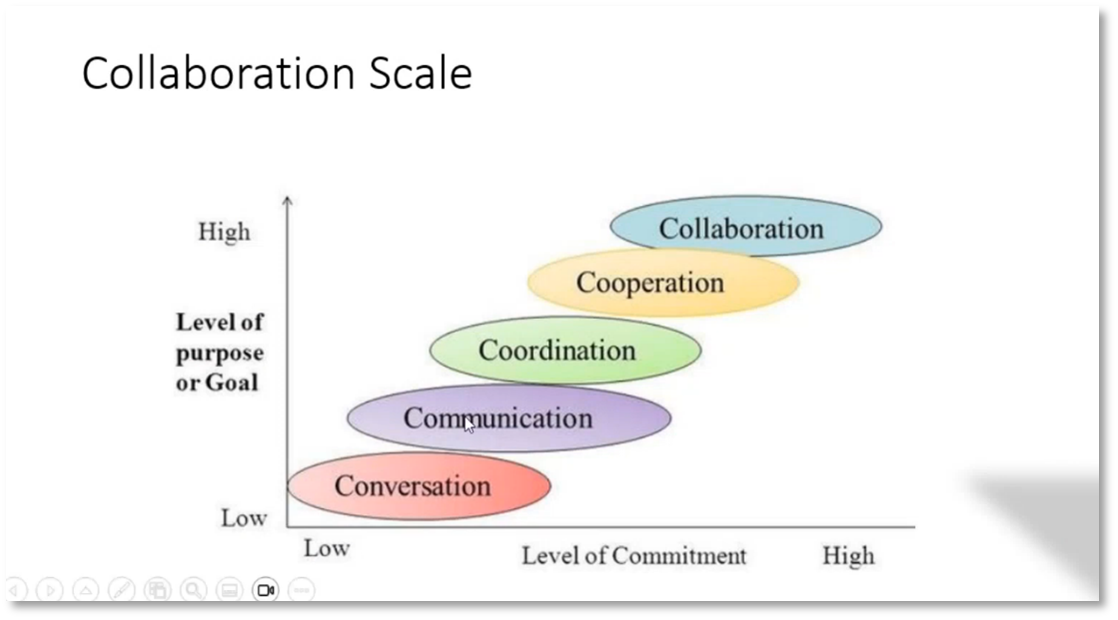

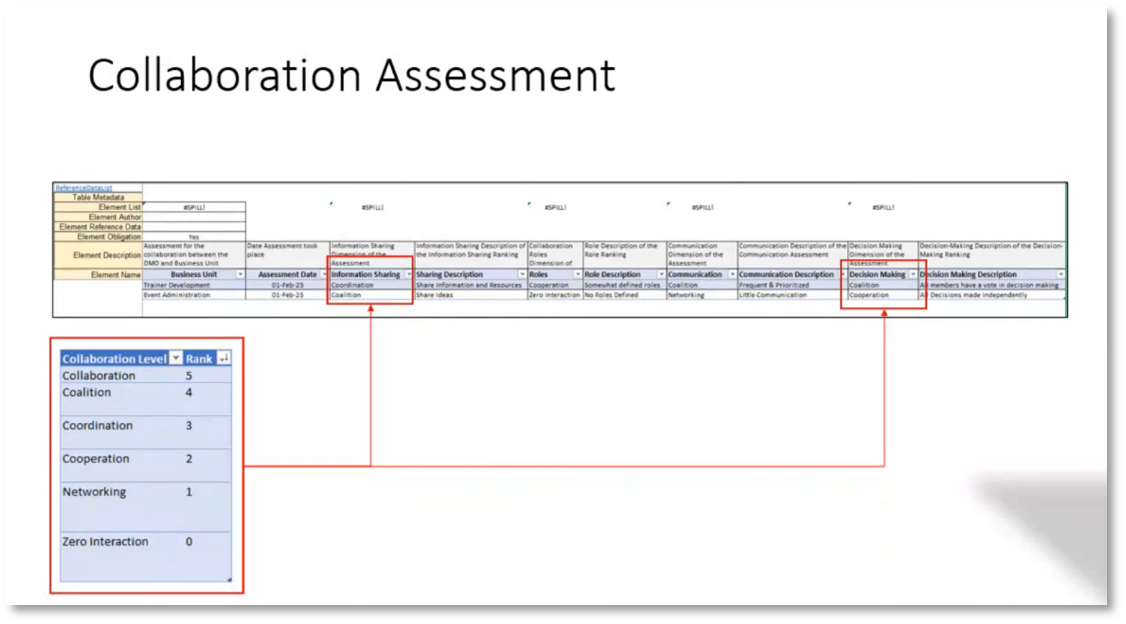

Observations on Collaboration and Decision-Making Process

Howard discusses the importance of shaping information properly for trustworthy and precise decision-making, even for machines. He introduces a collaboration scale, starting with communication and progressing into coordination, cooperation, and collaboration. The significance of measuring collaboration levels is emphasised. Howard presents a "network, cooperate, coordinate, share, and understand" process for building collaboration. Decision-making can slow down as collaboration progresses due to the need for consensus. Howard acknowledges the decision-making challenges with multiple internal and external parties, making it even more complex.

Figure 21 Measuring Collaboration

Figure 22 Collaboration Scale

Figure 23 Scales of Collaboration

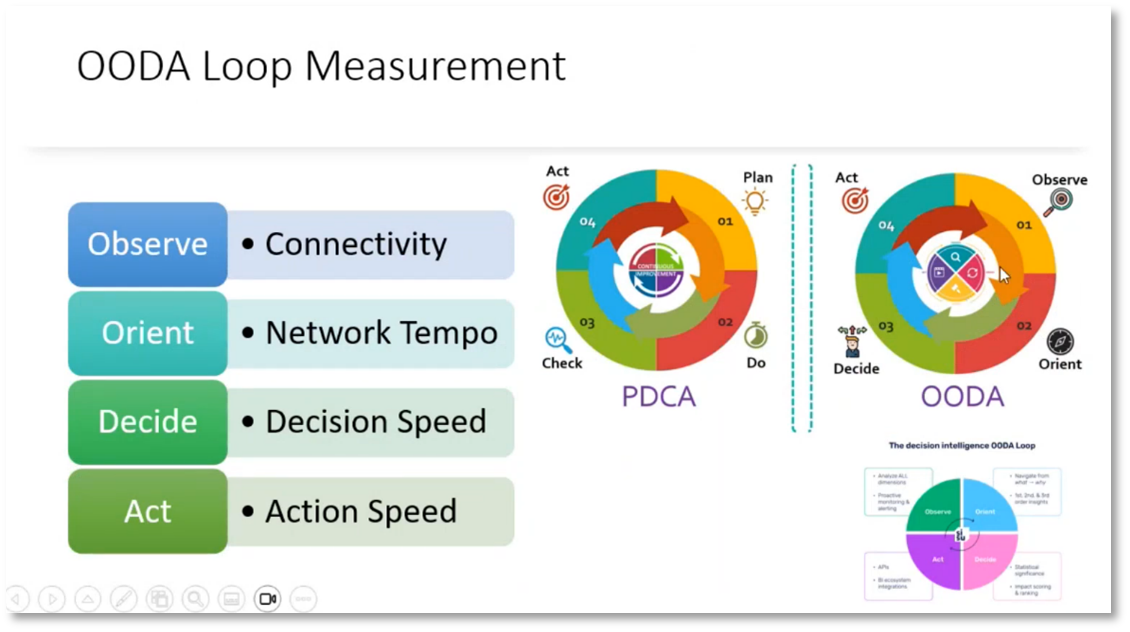

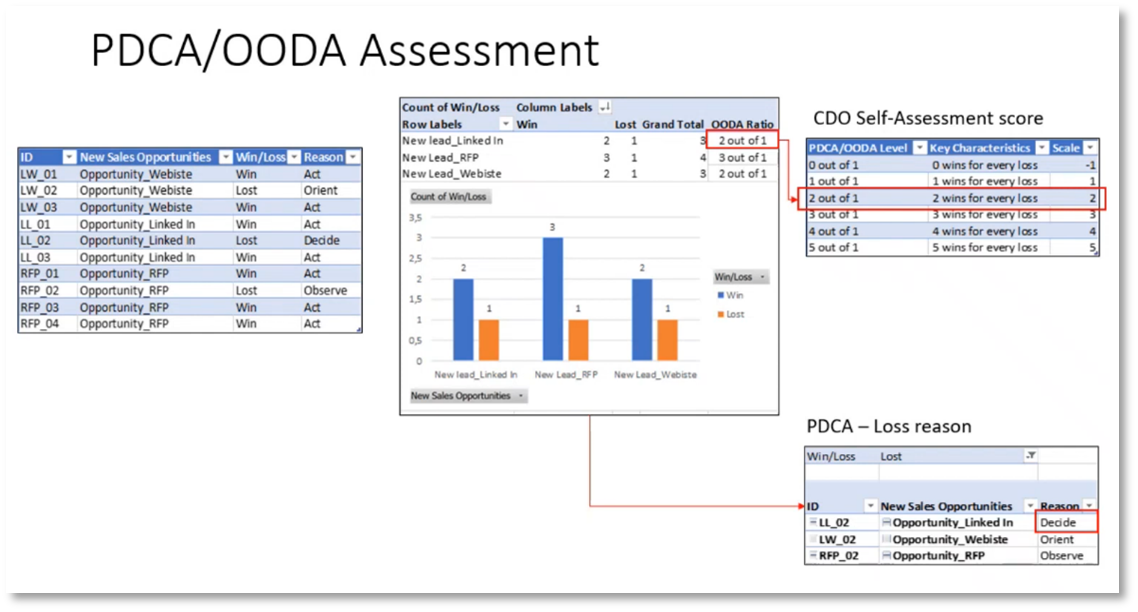

OODA Process and Decision-Making Techniques

The OODA process, developed by World War Two pilot John Boyd, involves observing, orienting, deciding, and acting. It is used in decision-making, and a root cause analysis can help understand wins and losses. Effective communication with business departments is important, and an assessment has been created to evaluate department collaboration, communication, and role clarity. Decision-making may require being authoritative or seeking consensus, depending on the situation, and faster decision-making is often preferred, but considering other factors is also crucial for better decisions.

Figure 24 Collaboration Assessment

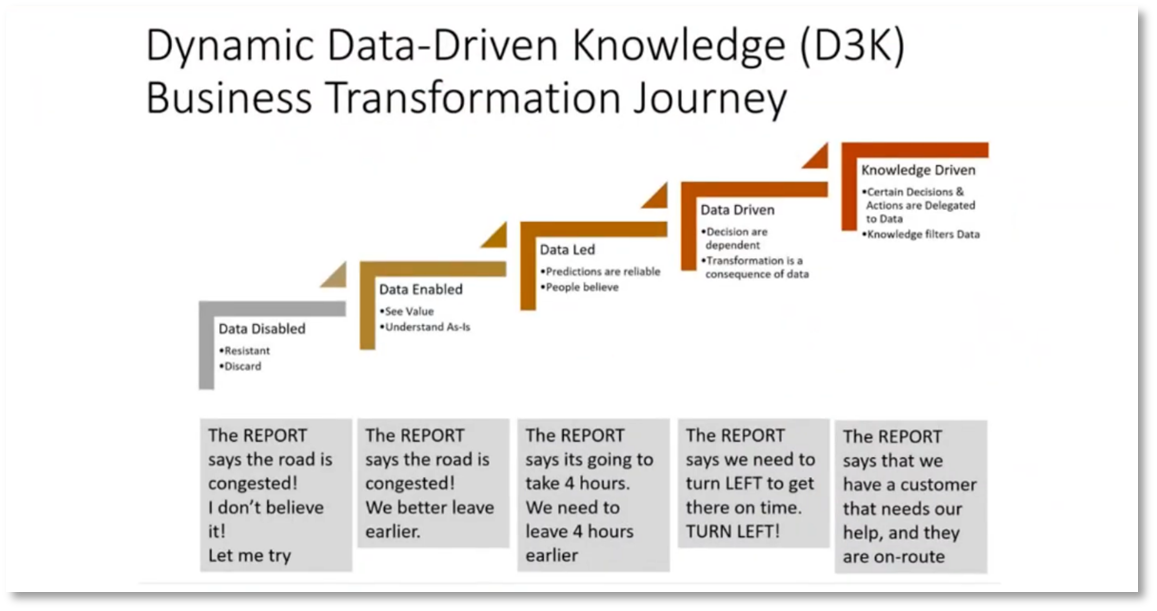

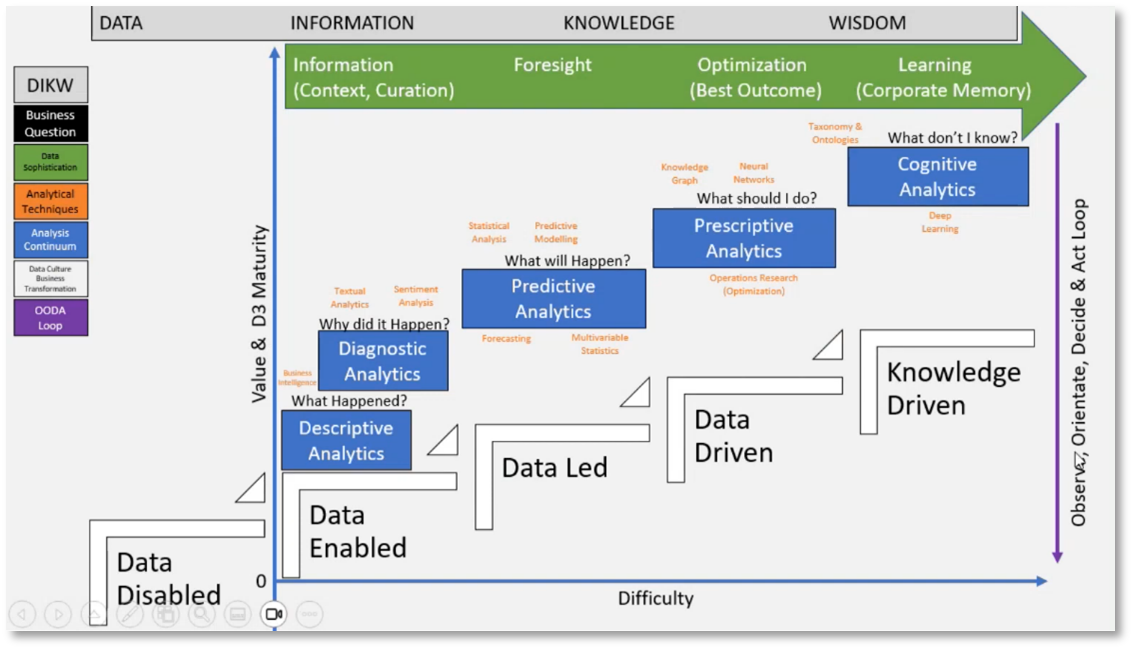

Digital Decisioning Strategy and Process

When implementing digital decisions, carefully deciding which decisions are appropriate for machine-made choices is crucial. To do this, a comprehensive inventory of all decisions must be conducted, and the state of information on those decisions must be assessed. The OODA loop process is a helpful methodology for decision-making. Key questions to consider include what happened, why it happened, what will happen, what should be done, and what information is unknown. Index weights can be assigned to prioritise decisions, and different types of analytics can be utilised to answer these questions. This decision-making process is commonly found in the DMBOK.

Figure 25 Dynamic Data-Driven Knowledge (D3K)

Figure 26 Digital Decisioning Strategy and Process

Understanding the Different Types of Analytics and Decision-Making Approaches

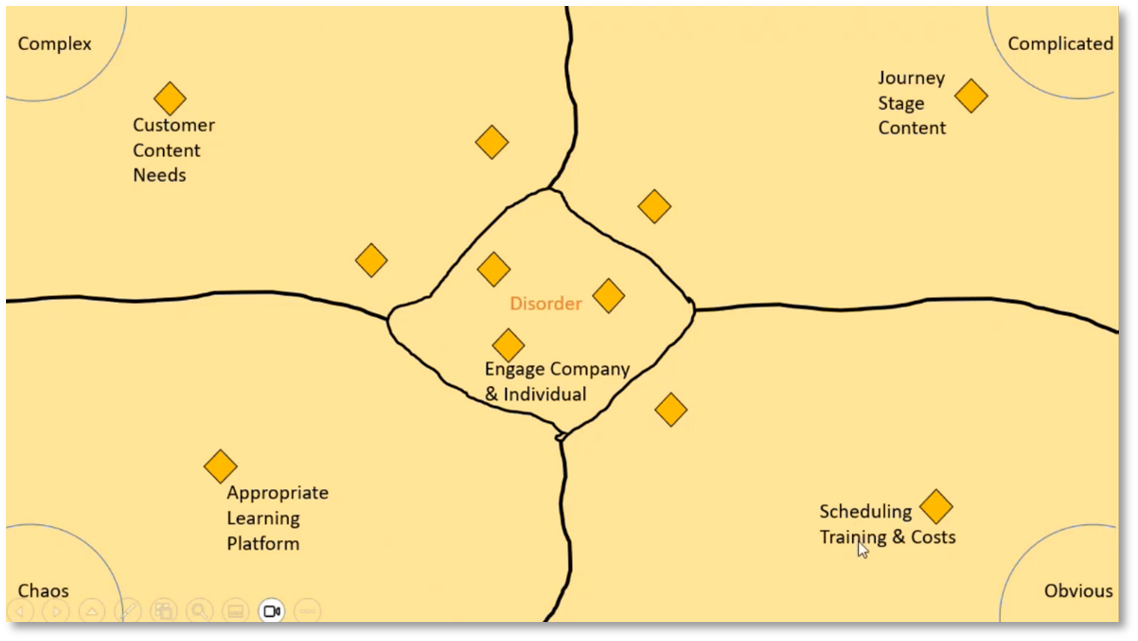

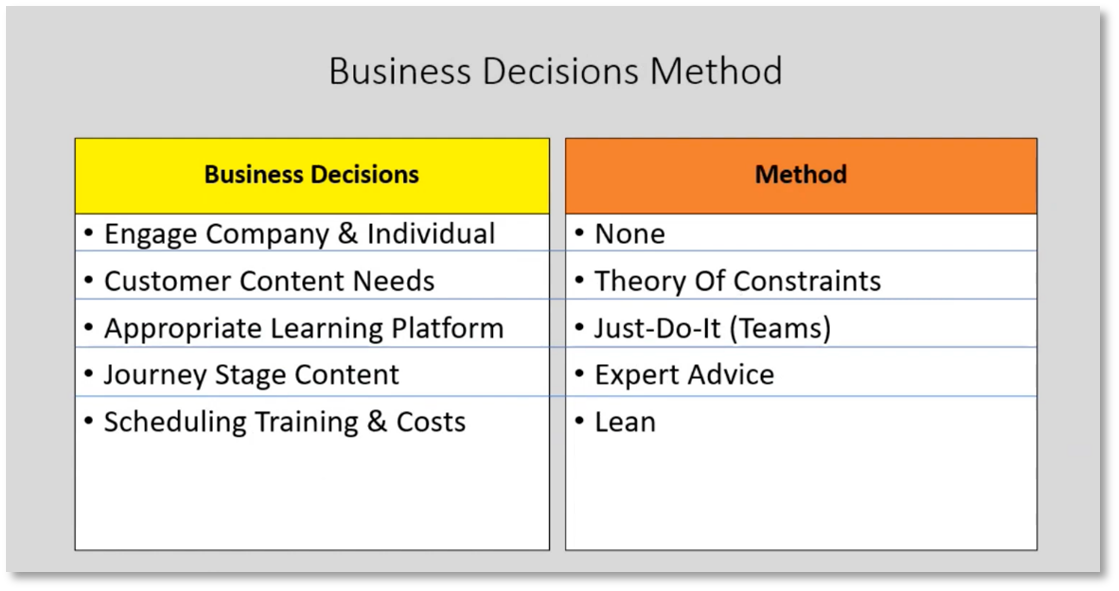

The field of decision-making involves several different approaches and tools. Traditional Business Intelligence answers what happened and why, while Predictive Analytics predicts likely outcomes and Prescriptive Analytics suggests actions to achieve desired outcomes. Operational Research focuses on finding the most effective approach, while Blind Spot Analysis looks at what we might miss due to uncertainty. The KFAN diagram measures uncertainty and helps categorise decisions into quadrants based on cause and effect understanding. Digital decision-making is suited for decisions with clear cause-and-effect relationships, but a drift in understanding may lead to incorrect decision-making. Chaos represents emergencies where data is scarce, and decision-making is based on assigning someone or a team to make the call. There is no answer in disorder, and the decision should be unmade. Decisions must be considered based on the level of information and understanding, as not all decisions can be treated the same way. Different decision-making approaches are needed for unknown unknowns, known unknowns, and known knowns scenarios.

Figure 27 Context Frame: North Star & OMTM Layers

Figure 28 D3K Scale

Figure 29 Knowledge-Driven (Smart) Business Decisions

Figure 30 Understanding the Different Types of Analytics and Decision-Making Approaches

Strategies and Methods for Effective Decision-Making

Effective decision-making involves a range of approaches and considerations. Confirmatory and exploratory analytics are important tools for analysing decisions, while different classifications of decisions based on their nature can help guide decision-making processes. Seeking too many opinions can have a negative impact, particularly when discussing scheduling and training costs. The importance of prompt and well-informed decision-making has been demonstrated by research indicating that it leads to success in top companies. However, decision-making can be challenging when there is a lack of data or disagreement among team members. Different approaches, such as the theory of constraints and the "just do it" Bron approach, can be useful in different situations. Choosing the appropriate method for each type of decision is significant, and it may be necessary to refrain from making decisions when there is insufficient information. The "OO" Loop involves observing market trends and competitor activities, with ongoing observation, decision-making, and adaptation necessary to respond to market changes.

Figure 31 State of Knowing for Business Decisions

Figure 32 Business Decision Method

Quick decision-making and strategy alignment in business

The Orient approach involves identifying the unique strategies of competitors and evaluating if there are any gaps in our approach. Timely decision-making is critical to stay ahead of competitors and avoid being stuck in a cycle of observation and orientation. The OO scale quantifies the number of missed opportunities for every successful decision made. The PDCA framework can help analyse and understand the reasons for missed opportunities and improve decision-making. Strategy alignment is crucial in ensuring business strategies align with overall business objectives. Capability and data life cycle assessments can identify areas for improvement in business processes and strategies, which can help achieve overall business goals.

Figure 33 OODA Loop Measurement

Figure 34 PCA/OODA Assessment

Comprehensive View of Program Operation

A comprehensive view of a program's operation was emphasised to assess its effectiveness and progress. Focusing only on one aspect may lead to a biased view, and a technical answer alone may not clearly understand how well a program is performing. The need to consider how well data supports decision-making processes was also acknowledged. Additionally, decision-making speed may be a disparity between individual teams and the organisation. Therefore, combining various perspectives and approaches in program management is necessary to obtain a well-rounded view.

Different Types of Organizations and Decision-Making

Howard introduces different types of organisations, including inventory-oriented, customer-oriented, and product-oriented. He explains that inventory-oriented organisations focus on inventory terms and operate through centralised decision-making. In contrast, customer-oriented organisations allow ground-level employees to make decisions while interacting with customers. Product-oriented organisations prioritise creating quality products that lead the market. Howard emphasises the importance of decision-making processes and the challenge of eliminating subjectivity in such processes.

Importance of Data and Maturity Assessments in Decision Making

The importance of data in decision-making and achieving organisational goals cannot be overstated. It is crucial to understand the cause-and-effect relationship and identify known knowns, unknown knowns, and known unknowns for effective analysis. However, decision-makers often overlook the impact of information quality on decision-making. Tom Redmond emphasised embedding data and people culture in the organisation. Howard shares his experience doing maturity assessments in South Africa. Organisations may be sceptical of assessments as they feel it is a ploy to extract more money without tangible benefits.

Importance of Comprehensive Assessments in Understanding Organizational Progress

Assessing the performance of an organisation requires consideration of multiple elements rather than relying on a single assessment or maturity check. The goal is to educate CDOs on how to perform their jobs effectively. Akin to the difficulties in creating a logical data model, it is important to gather people, understand the organisation's current state, and make the assessment process enjoyable. However, organisations often make the mistake of requesting estimates on how long it will take to reach a certain level of maturity, but progress can vary greatly and may take years to achieve higher levels.

Importance of Assessment before Project Quoting

Assessment is crucial before providing a quote for a project. It helps to understand the organisation's requirements and identify potential hurdles hindering effective project delivery. Breaking down the assessment process into smaller components, such as the DMAA framework (Define, Measure, Analyse, and Act), can help quantify effort and provide a clearer understanding of the project's feasibility. To determine the success of a project, it is necessary to assess a wide range of aspects rather than relying on a single measure.

If you want to receive the recording, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!